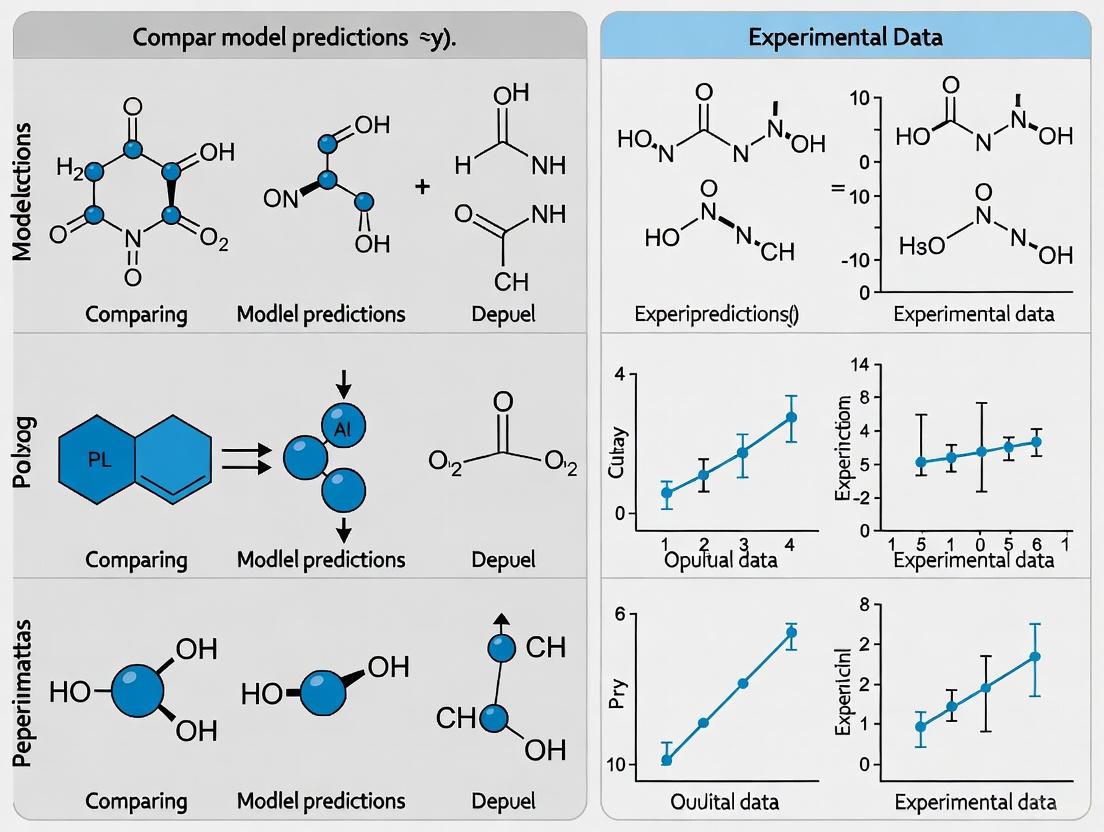

Bridging the Digital and Physical: A Strategic Framework for Comparing Model Predictions with Experimental Data in Biomedical Research

This article provides a comprehensive guide for researchers and drug development professionals on the critical process of validating computational models against experimental data.

Bridging the Digital and Physical: A Strategic Framework for Comparing Model Predictions with Experimental Data in Biomedical Research

Abstract

This article provides a comprehensive guide for researchers and drug development professionals on the critical process of validating computational models against experimental data. It explores the foundational importance of this comparison for ensuring model reliability and generalizability. The content delves into practical validation methodologies, from basic hold-out techniques to advanced cross-validation, specifically within contexts like Model-Informed Drug Development (MIDD). It addresses common challenges such as data mismatch and overfitting, offering optimization strategies. Furthermore, it outlines a rigorous framework for the comparative analysis of models using robust statistical measures and benchmarks, empowering scientists to build more accurate, trustworthy, and impactful predictive tools for accelerating biomedical discovery.

The Critical Bridge: Why Validating Models Against Experimental Data is Non-Negotiable in Science

In computational sciences and drug development, a model's value is determined not by its sophistication but by its validated performance. Model validation is the critical process of assessing a model's ability to generalize to new, unseen data from the population of interest, ensuring its reliability and real-world impact [1]. This process moves beyond theoretical performance to demonstrate how well a model will function in practice, particularly when its predictions will inform high-stakes decisions in clinical trials, therapeutic development, and regulatory submissions.

For researchers, scientists, and drug development professionals, validation provides the evidentiary foundation for trusting model predictions. The framework of model validation rests on three interconnected pillars: generalizability (performance across diverse populations and settings), reliability (consistent performance under varying conditions), and real-world impact (demonstrable utility in practical applications). Within Model-Informed Drug Discovery and Development (MID3), validation transforms quantitative models from research tools into assets that can optimize clinical trial design, inform regulatory decisions, and ultimately accelerate patient access to new therapies [2] [3].

Core Principles: Generalizability, Reliability, and Real-World Impact

Generalizability: Beyond the Training Data

Generalizability refers to a model's ability to maintain performance when applied to data outside its original training set—particularly to new populations, settings, or conditions [4]. In clinical research, this concept is analogous to the generalizability of randomized controlled trial (RCT) results to real-world patient populations [5]. The assessment often involves comparing a study sample (SS) to the broader target population (TP) to evaluate population representativeness.

Two temporal perspectives exist for generalizability assessment:

- A priori generalizability: Assessed before a trial begins using only study design information (primarily eligibility criteria), giving investigators opportunity to adjust study design before trial initiation [5].

- A posteriori generalizability: Assessed after trial completion, comparing enrolled participants to the target population [5].

Quantitative assessment of generalizability is increasingly important, with informatics approaches leveraging electronic health records (EHRs) and other real-world data to profile target populations and evaluate how well a study population represents them [5].

Reliability: Consistency and Robustness

Reliability encompasses a model's consistency, stability, and robustness. A reliable model produces similar performance across different subsets of data, under varying conditions, and over time. Key aspects include:

- Consistency: Minimal variance in performance metrics across validation runs

- Robustness: Resistance to performance degradation from noisy, incomplete, or slightly perturbed inputs

- Stability: Maintained performance over time as data distributions evolve

In machine learning, techniques like cross-validation and bootstrap resampling help assess reliability by evaluating performance across multiple data partitions [4].

Real-World Impact: From Validation to Value

Real-world impact represents the ultimate measure of a model's success—its ability to deliver tangible benefits in practical applications. For healthcare applications, this might include improving diagnostic accuracy, optimizing treatment decisions, or streamlining drug development. Demonstrating real-world impact requires moving beyond laboratory settings to evaluate performance in environments that reflect actual use conditions [6].

The case of intracranial hemorrhage (ICH) detection on head CT scans illustrates this principle well, where an ML model maintained high performance (AUC 95.4%, sensitivity 91.3%, specificity 94.1%) when validated on real-world emergency department data, confirming its potential for clinical implementation [6].

Quantitative Metrics and Evaluation Frameworks

Classification Model Metrics

For classification models, multiple metrics provide complementary views of performance:

Table 1: Key Evaluation Metrics for Classification Models

| Metric | Formula | Interpretation | Use Case Focus |

|---|---|---|---|

| Accuracy | (TP + TN) / (TP + TN + FP + FN) | Overall correctness | Balanced classes |

| Precision | TP / (TP + FP) | Quality of positive predictions | When FP costs are high |

| Recall (Sensitivity) | TP / (TP + FN) | Coverage of actual positives | When FN costs are high |

| F1 Score | 2 × (Precision × Recall) / (Precision + Recall) | Harmonic mean of precision and recall | Balanced view |

| AUC-ROC | Area under ROC curve | Discrimination ability across thresholds | Overall ranking |

| Log Loss | -1/N × Σ[yᵢlog(pᵢ) + (1-yᵢ)log(1-pᵢ)] | Calibration of probability estimates | Probabilistic interpretation |

These metrics should be selected based on the specific application and consequences of different error types. For example, in medical diagnostics, high recall is often prioritized to minimize missed cases, while in spam detection, high precision is valued to avoid filtering legitimate emails [7] [8].

Validation Techniques and Protocols

Table 2: Model Validation Techniques and Applications

| Technique | Methodology | Primary Advantage | Limitations |

|---|---|---|---|

| Train-Validation-Test Split | Single split into training, validation, and test sets | Simple, computationally efficient | High variance based on single split |

| K-Fold Cross-Validation | Data divided into K folds; each fold serves as validation once | Reduces variance, uses all data for validation | Computationally intensive |

| Stratified K-Fold | K-fold with preserved class distribution in each fold | Maintains class balance in imbalanced datasets | Complex implementation |

| External Validation | Validation on completely independent dataset from different source | Best assessment of generalizability | Requires additional data collection |

| Temporal Validation | Training on past data, validation on future data | Simulates real-world temporal performance | Requires longitudinal data |

External validation represents the gold standard for assessing generalizability, using data that is temporally and geographically distinct from training data [4] [6]. The convergent-divergent validation framework extends this approach, using multiple external datasets to better understand a model's domain limitations and true performance boundaries [4].

Experimental Protocols for Validation

A Priori vs. A Posteriori Generalizability Assessment

The experimental design for assessing generalizability depends on whether the evaluation occurs before or after trial completion:

Table 3: Protocols for Generalizability Assessment

| Assessment Type | Data Requirements | Methodological Approach | Outcome Measures |

|---|---|---|---|

| A Priori (Eligibility-Driven) | Eligibility criteria + observational cohort data (e.g., EHRs) | Compare eligible patients (study population) to target population | Population representativeness scores, characteristic comparisons |

| A Posteriori (Sample-Driven) | Enrolled participant data + observational cohort data | Compare actual participants (study sample) to target population | Difference in outcomes, effect size variations, subgroup analyses |

A systematic review of generalizability assessment practices found that less than 40% of studies assessed a priori generalizability, despite its value in optimizing study design before trial initiation [5].

External Validation Protocol for Medical AI Models

The following workflow illustrates a rigorous external validation protocol for assessing model generalizability, based on the ICH detection case study [6]:

Key Experimental Considerations:

- Temporal Separation: External data should be collected from a time period distinct from training data

- Geographical Separation: External data should come from different institutions or regions

- Minimal Exclusion: Apply broad inclusion criteria to reflect real-world use

- Protocol Alignment: Ensure consistent preprocessing and evaluation metrics

In the ICH detection study, this protocol revealed a modest performance drop from internal (AUC 98.4%) to external validation (AUC 95.4%), demonstrating achievable but imperfect generalizability in medical imaging AI [6].

The Scientist's Toolkit: Essential Research Reagents and Solutions

Table 4: Essential Research Reagents and Computational Tools for Model Validation

| Tool/Reagent | Function | Application Context | Implementation Considerations |

|---|---|---|---|

| Electronic Health Records (EHRs) | Profile real-world target populations | A priori generalizability assessment | Data quality, standardization, interoperability |

| Stratified K-Fold Cross-Validation | Assess model reliability | Internal validation during development | Computational resources, class imbalance handling |

| SHAP/LIME | Model interpretability and explainability | Understanding feature importance | Computational complexity, faithfulness to model |

| Bayesian Optimization | Hyperparameter tuning | Model development and validation | Search space definition, convergence criteria |

| Gradient Boosting Models (LightGBM, XGBoost, CatBoost) | Ensemble modeling for structured data | Tabular data tasks | Training time, memory requirements, regularization |

| Deep CNN Architectures (ResNeXt) | Feature extraction from images | Computer vision tasks | GPU requirements, pretrained model availability |

| PBPK/PD Models | Mechanistic modeling of drug effects | MID3 for drug development | Physiological parameter estimation, system-specific data |

| Quantitative Systems Pharmacology (QSP) | Integrative biological system modeling | Drug target identification and validation | Multiscale data integration, model complexity management |

| Fairness Audit Tools | Bias detection and mitigation | Ensuring equitable model performance | Protected attribute definition, fairness metric selection |

Comparative Analysis: Validation Across Domains

Drug Development vs. Healthcare AI Validation

The application of model validation principles varies significantly across domains, reflecting different regulatory requirements, data characteristics, and consequence profiles:

Drug Development (MID3 Context):

- Employs physiologically-based pharmacokinetic (PBPK) and exposure-response models [2]

- Validation focuses on predictive accuracy for clinical outcomes

- Regulatory acceptance requires thorough qualification and verification

- Real-world impact measured through improved trial success rates and optimized dosing

Healthcare AI (Clinical Implementation):

- Utilizes diverse ML architectures from CNNs to gradient boosting [6]

- Validation emphasizes generalizability across institutions and patient populations

- Regulatory clearance requires robust performance across subpopulations

- Real-world impact measured through clinical workflow improvements and patient outcomes

Performance Comparison: Validation Metrics in Practice

Table 5: Performance Comparison Across Model Types and Validation Approaches

| Model Type | Internal Validation Performance (AUC) | External Validation Performance (AUC) | Performance Drop | Key Generalizability Factors |

|---|---|---|---|---|

| ICH Detection CNN [6] | 98.4% | 95.4% | 3.0% | Scanner variability, patient population differences |

| Typical ML Model (Literature) | 85-95% | 75-85% | 5-15% | Data quality, population shift, contextual factors |

| PBPK Models [2] | N/A (Mechanistic) | N/A (Mechanistic) | Protocol-dependent | Physiological parameter accuracy, system-specific data |

| Logistic Regression (Structured Data) | 80-90% | 75-85% | 3-8% | Feature distribution stability, temporal drift |

Model validation represents the critical bridge between theoretical model development and practical real-world implementation. For researchers, scientists, and drug development professionals, rigorous validation protocols that assess generalizability, reliability, and real-world impact are not optional—they are fundamental to responsible model deployment.

The evidence consistently demonstrates that models performing well in controlled laboratory environments often experience performance degradation when applied to external datasets [6]. This reality underscores the necessity of comprehensive validation strategies that include external testing on temporally and geographically distinct data. The emerging paradigms of "fit-for-purpose" modeling in drug development [2] and convergent-divergent validation in machine learning [4] represent important advances in validation methodology.

As computational models play increasingly prominent roles in high-stakes decisions—from therapeutic development to clinical diagnostics—the validation standards must evolve accordingly. This includes greater emphasis on reproducibility, transparency, and ongoing performance monitoring in production environments. By embracing these comprehensive validation approaches, the research community can ensure that models deliver not only statistical performance but also genuine real-world impact.

Validation is a critical step in the development of robust machine learning models, especially in scientific fields like drug development. It provides the empirical evidence needed to trust a model's predictions and is the primary defense against the twin pitfalls of overfitting and underfitting. This guide objectively compares the performance of different validation approaches and the models they assess, providing the experimental data and protocols to inform rigorous research.

What is the Model's True Predictive Power?

A model's predictive power is not its performance on the data it was trained on, but its ability to generalize to new, unseen data. Validation quantifies this power using specific metrics, providing a realistic performance estimate that guards against over-optimistic results from the training set.

Core Evaluation Metrics by Task

The choice of evaluation metric is fundamental to assessing predictive power and depends entirely on the type of machine learning task. The table below summarizes the most critical metrics for classification and regression problems, which are prevalent in scientific research.

Table 1: Key Evaluation Metrics for Supervised Learning Tasks

| Task | Metric | Formula | Interpretation & Use Case |

|---|---|---|---|

| Classification | Accuracy | (TP+TN)/(TP+TN+FP+FN) | Overall correctness; can be misleading with imbalanced data [9] [10]. |

| Precision | TP/(TP+FP) | The proportion of positive predictions that are correct. Crucial when the cost of false positives is high (e.g., predicting a drug candidate as effective when it is not) [10] [7]. | |

| Recall (Sensitivity) | TP/(TP+FN) | The proportion of actual positives that are correctly identified. Vital when missing a positive case is costly (e.g., failing to identify a promising drug candidate) [10] [7]. | |

| F1 Score | 2 × (Precision×Recall)/(Precision+Recall) | The harmonic mean of precision and recall. Provides a single score to balance both concerns [9] [10]. | |

| AUC-ROC | Area under the ROC curve | Measures the model's ability to distinguish between classes across all thresholds. A value of 1 indicates perfect separation, 0.5 is no better than random [9] [10] [7]. | |

| Regression | R² (R-squared) | 1 - (∑(yj-ŷj)²)/(∑(y_j-ȳ)²) | The proportion of variance in the outcome explained by the model. Closer to 1 is better [10] [11]. |

| Mean Squared Error (MSE) | (1/N) × ∑(yj-ŷj)² | The average of squared errors. Heavily penalizes large errors [10] [11]. | |

| Mean Absolute Error (MAE) | (1/N) × ∑⎮yj-ŷj⎮ | The average of absolute errors. More easily interpretable as it's in the target variable's units [10]. |

Experimental Protocol: Measuring Predictive Performance

To generate the metrics in Table 1, a standard experimental protocol for model training and evaluation must be followed.

- Data Splitting: The dataset is initially split into a training set (e.g., 70-80%) and a hold-out test set (e.g., 20-30%). The test set is locked away and must not be used for any aspect of model training or tuning [11].

- Model Training: The model is trained exclusively on the training set.

- Prediction and Calculation: The trained model is used to make predictions on the held-out test set. These predictions are compared against the ground-truth values, and the relevant metrics from Table 1 are calculated [9]. This final evaluation on the test set provides the best estimate of the model's predictive power on new data.

Is the Model Overfitting or Underfitting?

Validation is the primary tool for diagnosing a model's fundamental failure modes: overfitting and underfitting. These concepts are directly linked to bias (error from overly simplistic assumptions) and variance (error from sensitivity to small fluctuations in the training set) [12] [13].

Table 2: Diagnostic Guide to Overfitting and Underfitting

| Aspect | Underfitting (High Bias) | Overfitting (High Variance) |

|---|---|---|

| Definition | Model is too simple to capture underlying patterns in the data [14] [12]. | Model is too complex, learning noise and details in the training data that do not generalize [14] [12]. |

| Performance on Training Data | Poor performance, high error [14] [13]. | Excellent performance, very low error [14] [13]. |

| Performance on Test/Validation Data | Poor performance, high error (similar to training error) [14] [13]. | Poor performance, significantly worse than training error [14] [13]. |

| Common Causes | - Excessively simple model [12].- Insufficient training time [14].- Excessive regularization [12].- Poor feature selection [14]. | - Excessively complex model [14].- Training for too many epochs (overtraining) [14].- Small or noisy training dataset [13].- Too many features without enough data [14]. |

The following diagram illustrates the conceptual relationship between model complexity, error, and the occurrence of underfitting and overfitting, guiding the search for the optimal model.

How to Validate: Experimental Protocols for Robustness

Choosing the right validation strategy is an experiment in itself. Different protocols offer varying degrees of reliability and are suited to different dataset sizes, as compared in the table below.

Table 3: Comparison of Model Validation Strategies

| Validation Strategy | Methodology | Key Experimental Output | Advantages | Disadvantages | Recommended Data Size |

|---|---|---|---|---|---|

| Hold-Out Validation | Single split into training and test sets [13]. | Performance metrics on the test set. | Simple, fast, low computational cost [13]. | Performance estimate can be highly dependent on a single data split; unstable [15]. | Very Large |

| K-Fold Cross-Validation | Data is randomly split into k equal-sized folds. The model is trained k times, each time using k-1 folds and validated on the remaining fold. The final performance is the average of the k results [14] [11]. | Average performance metric across all k folds, plus variance. | More reliable and stable estimate of performance; makes efficient use of all data [14] [15]. | k times more computationally expensive than hold-out. | Medium to Large |

| Nested Cross-Validation | An outer k-fold loop estimates generalization error, while an inner loop (e.g., another k-fold) performs hyperparameter tuning on the training set of the outer loop [13]. | An unbiased estimate of model performance after hyperparameter tuning. | Provides a nearly unbiased performance estimate; rigorous separation of tuning and evaluation [13]. | Computationally very expensive. | Small to Medium |

The following diagram outlines the workflow for a robust model validation experiment, integrating the concepts of data splitting, training, and evaluation to answer the key questions of predictive power and model fit.

The Scientist's Toolkit: Essential Reagents for Validation Experiments

Just as a lab requires specific reagents, a robust validation workflow requires a set of core computational tools and techniques.

Table 4: Essential Research Reagent Solutions for Model Validation

| Category | Tool / Technique | Primary Function in Experiment |

|---|---|---|

| Validation Protocols | K-Fold Cross-Validation [14] [11] | Provides a robust, averaged estimate of model performance and helps detect overfitting. |

| Hold-Out Test Set [11] | Serves as the final, unbiased arbiter of model performance before deployment. | |

| Prevention & Mitigation | L1 / L2 Regularization [14] [13] | "Regularization Reagent": Penalizes model complexity to prevent overfitting. |

| Dropout (for Neural Networks) [14] [13] | Randomly deactivates neurons during training to force redundant, robust representations. | |

| Early Stopping [14] [13] | Monitors validation performance and halts training when overfitting is detected. | |

| Data Augmentation [13] [16] | Artificially expands the training set by creating modified copies of existing data (e.g., image rotations). | |

| Performance Analysis | ROC Curve Analysis [9] [11] | Visualizes the trade-off between sensitivity and specificity across classification thresholds. |

| Learning Curves [13] [16] | Plots training and validation error vs. training iterations/samples to diagnose bias/variance. |

Systematic validation, not merely high performance on training data, is the foundation of trustworthy predictive modeling. By applying the metrics, diagnostic guides, and experimental protocols detailed in this guide, researchers can confidently answer the key questions: a model's true predictive power is defined by its performance on a rigorously held-out test set; overfitting and underfitting are identified through the performance gap between training and validation sets; and robustness is ensured through careful strategies like k-fold cross-validation. This empirical, data-driven approach is essential for building models that deliver reliable predictions in real-world scientific applications.

The biomedical landscape is undergoing a profound transformation, shifting from traditional, labor-intensive drug discovery processes to artificially intelligent, data-driven approaches. By 2025, artificial intelligence (AI) has evolved from experimental curiosity to clinical utility, with AI-designed therapeutics now in human trials across diverse therapeutic areas [17]. This transition represents nothing less than a paradigm shift, replacing human-driven workflows with AI-powered discovery engines capable of compressing traditional timelines, expanding chemical and biological search spaces, and redefining the speed and scale of modern pharmacology [17].

The stakes in biomedicine have never been higher. With chronic diseases like diabetes, osteoarthritis, and drug-use disorders demonstrating the highest gaps between public health burden and biomedical innovation [18], the pressure to accelerate and improve drug discovery is intense. The industry response has been a rapid adoption of hybrid intelligence models that combine computational power with human expertise [19]. This review objectively compares leading AI-driven drug discovery platforms, their performance metrics, experimental methodologies, and implications for clinical translation, providing researchers and drug development professionals with a critical analysis of this rapidly evolving landscape.

Comparative Analysis of Leading AI Drug Discovery Platforms

Platform Architectures and Technical Approaches

The current AI drug discovery ecosystem encompasses several distinct technological architectures, each with unique methodologies and applications. The five dominant platform types include generative chemistry, phenomics-first systems, integrated target-to-design pipelines, knowledge-graph repurposing, and physics-plus–machine learning design [17]. Each approach leverages different aspects of AI and computational power to address specific challenges in the drug discovery pipeline.

Generative chemistry platforms, exemplified by Exscientia, utilize deep learning models trained on vast chemical libraries and experimental data to propose novel molecular structures that satisfy precise target product profiles, including potency, selectivity, and ADME properties [17]. These systems employ a "design-make-test-learn" cycle where AI iteratively proposes compounds that are synthesized and tested, with results feeding back to improve subsequent design cycles.

Phenomics-first systems, such as Recursion's platform, leverage high-content cellular imaging and AI analysis to identify novel biological insights and drug candidates based on changes in cellular phenotypes [17]. This approach generates massive datasets of cellular images which are analyzed using machine learning to detect subtle patterns indicating potential therapeutic effects.

Integrated target-to-design pipelines, used by companies like Insilico Medicine, aim to unify the entire discovery process from target identification to candidate optimization [17]. These platforms often employ multiple AI approaches in sequence, beginning with target discovery using biological data analysis, followed by generative chemistry for compound design, and predictive models for optimization.

Quantitative Performance Metrics of Leading Platforms

Table 1: Comparative Performance of AI Drug Discovery Platforms

| Platform/Company | Primary Approach | Discovery Timeline | Clinical Stage Candidates | Key Differentiators |

|---|---|---|---|---|

| Exscientia | Generative Chemistry | ~70% faster design cycles [17] | 8 clinical compounds designed [17] | Patient-derived biology integration; "Centaur Chemist" approach [17] |

| Insilico Medicine | Integrated Target-to-Design | 18 months (target to Phase I) [17] | ISM001-055 (Phase IIa) [17] | Full pipeline integration; quantum-classical hybrid models [20] |

| Recursion | Phenomics-First | Not specified | Multiple candidates in clinical trials [17] | Massive cellular phenomics database; merger with Exscientia [17] |

| Schrödinger | Physics-Plus-ML | Not specified | TAK-279 (Phase III) [17] | Physics-based simulations combined with machine learning [17] |

| BenevolentAI | Knowledge-Graph Repurposing | Not specified | Multiple candidates in clinical trials [17] | Knowledge graphs for target identification and drug repurposing [17] |

Table 2: Experimental Hit Rates Across Discovery Approaches

| Discovery Approach | Screened Candidates | Experimental Hit Rate | Notable Achievements |

|---|---|---|---|

| Traditional HTS | Millions | Typically <0.01% | Industry standard for decades |

| Generative AI (GALILEO) | 12 compounds | 100% in vitro [20] | All 12 showed antiviral activity [20] |

| Quantum-Enhanced AI | 15 compounds synthesized | 13.3% (2/15 with biological activity) [20] | KRAS-G12D inhibition (1.4 μM) [20] |

| Exscientia AI | 10× fewer compounds [17] | Not specified | Faster design cycles with fewer synthesized compounds [17] |

The performance data reveals significant advantages for AI-driven approaches over traditional methods. Insilico Medicine demonstrated the potential for radical timeline compression, advancing an idiopathic pulmonary fibrosis drug from target discovery to Phase I trials in just 18 months—a fraction of the typical 5-year timeline for traditional discovery [17]. Exscientia reports design cycles approximately 70% faster than industry standards while requiring 10 times fewer synthesized compounds [17].

Perhaps most impressively, Model Medicines' GALILEO platform achieved an unprecedented 100% hit rate in validated in vitro assays, with all 12 generated compounds showing antiviral activity against either Hepatitis C Virus or human Coronavirus 229E [20]. This remarkable efficiency demonstrates how targeted AI approaches can dramatically improve success rates while reducing the number of compounds that need to be synthesized and tested.

Experimental Protocols and Methodologies

Quantum-Enhanced Drug Discovery Workflow

The quantum-classical hybrid approach represents one of the most advanced methodologies in AI-driven drug discovery. Insilico Medicine's protocol for tackling the challenging KRAS-G12D oncology target exemplifies this workflow [20]:

Step 1: Molecular Generation with Quantum Circuit Born Machines (QCBMs)

- Initialize quantum-inspired generative models with chemical space priors

- Generate diverse molecular libraries targeting specific binding pockets

- Screen 100+ million virtual molecules using hybrid quantum-classical algorithms

Step 2: AI-Enabled Molecular Filtering

- Apply deep learning models to predict binding affinities

- Filter candidates based on multi-parameter optimization (potency, selectivity, ADME)

- Select 1.1 million candidates for further computational analysis

Step 3: Synthesis and Experimental Validation

- Synthesize top 15 predicted compounds

- Evaluate binding affinity using surface plasmon resonance (SPR) or similar biophysical methods

- Confirm cellular activity in relevant disease models

This workflow yielded ISM061-018-2, a compound exhibiting 1.4 μM binding affinity to KRAS-G12D—a notable achievement for this challenging cancer target [20]. The quantum-enhanced approach demonstrated a 21.5% improvement in filtering out non-viable molecules compared to AI-only models [20].

Figure 1: Quantum-enhanced AI drug discovery workflow, combining quantum-inspired molecular generation with classical AI filtering and experimental validation.

Generative AI Protocol for Antiviral Discovery

Model Medicines' GALILEO platform employs a distinct methodology focused on one-shot prediction for antiviral development [20]:

Step 1: Chemical Space Expansion

- Begin with 52 trillion molecules in virtual chemical space

- Apply geometric graph convolutional networks (ChemPrint) for molecular representation

- Generate inference library of 1 billion candidates

Step 2: Target-Focused Filtering

- Employ deep learning models trained on viral target structures

- Focus on Thumb-1 pocket of viral RNA polymerases

- Select 12 highly specific antiviral compounds for synthesis

Step 3: Experimental Validation

- Test compounds in cell-based antiviral assays

- Measure inhibition of viral replication (HCV and Coronavirus 229E)

- Confirm chemical novelty through Tanimoto similarity analysis

This protocol achieved a remarkable 100% hit rate, with all 12 compounds showing antiviral activity [20]. The generated compounds demonstrated minimal structural similarity to known antiviral drugs, confirming the platform's ability to create first-in-class molecules.

Automation-Integrated Biological Validation

Modern AI discovery platforms increasingly integrate automated laboratory systems for biological validation:

Automated High-Content Screening (as implemented by Recursion)

- Utilize robotic systems for cell seeding, treatment, and imaging

- Apply AI-based image analysis to detect phenotypic changes

- Generate massive datasets linking compound structures to biological effects

Automated 3D Cell Culture and Organoid Systems (exemplified by mo:re)

- Implement MO:BOT platform for standardized 3D cell culture

- Automate seeding, media exchange, and quality control

- Reject sub-standard organoids before screening to improve data quality

Integrated Protein Expression and Purification (as seen with Nuclera)

- Utilize eProtein Discovery System for DNA-to-protein workflow

- Screen up to 192 construct and condition combinations in parallel

- Generate purified, soluble protein in under 48 hours versus traditional weeks [21]

These automated workflows enhance reproducibility and scalability while generating the high-quality data necessary to train more accurate AI models.

The Scientist's Toolkit: Essential Research Reagents and Solutions

Table 3: Key Research Reagent Solutions for AI-Driven Drug Discovery

| Reagent/Technology | Function | Application in AI Workflows |

|---|---|---|

| AlphaFold Algorithm | Protein structure prediction | Enables antibody discovery and optimization by predicting protein structures [19] |

| Agilent SureSelect Max DNA Library Prep Kits | Target enrichment for genomic sequencing | Automated library preparation integrated with firefly+ platform [21] |

| Multiplex Imaging Assays | Simultaneous detection of multiple biomarkers | Generates high-content data for AI analysis of disease mechanisms [21] |

| Patient-Derived Organoids | 3D cell cultures mimicking human tissue | Provides human-relevant models for compound validation [21] |

| Surface Plasmon Resonance (SPR) | Biomolecular interaction analysis | Validates binding affinities of AI-predicted compounds [17] |

| Cenevo/Labguru AI Assistant | Experimental design and data management | Supports smarter search, experiment comparison, and workflow generation [21] |

The integration of these tools within AI-driven platforms creates a powerful ecosystem for predictive molecule invention. As noted by Bristol Myers Squibb scientists, "We have integrated AI, machine learning, and the human component as a part of our drug discovery fabric. We view these technologies as an extension of our labs" [19].

Experimental Validation Workflows

The translation of AI predictions to experimentally validated results requires rigorous workflows that maintain the connection between computational predictions and laboratory verification.

Figure 2: Multi-stage experimental validation workflow for AI-generated compounds, progressing from initial in vitro testing through human-relevant models to in vivo validation.

The validation workflow emphasizes the critical importance of human-relevant models in the AI-driven discovery process. As highlighted at ELRIG's Drug Discovery 2025, technologies like mo:re's MO:BOT platform standardize 3D cell culture to improve reproducibility and reduce the need for animal models [21]. By producing consistent, human-derived tissue models, these systems provide clearer, more predictive safety and efficacy data before advancing to clinical trials.

The integration of artificial intelligence into drug discovery represents a fundamental shift in how we approach biomedical innovation. The quantitative evidence demonstrates that AI-driven platforms can significantly compress discovery timelines, improve hit rates, and tackle previously undruggable targets. However, the ultimate validation of these approaches will come from clinical success.

As the field progresses, key challenges remain: ensuring data quality and integration [21], maintaining transparency in AI decision-making [21], and developing regulatory frameworks for AI-derived therapies [17]. The convergence of generative AI with emerging technologies like quantum computing suggests that the current rapid evolution will continue, potentially leading to even more profound transformations in how we discover and develop medicines.

The high stakes in biomedicine demand nothing less than these innovative approaches. With chronic diseases continuing to impose massive public health burdens [18], the efficient, targeted discovery made possible by AI technologies offers hope for addressing unmet medical needs through smarter, faster, and more effective drug development.

Table of Contents

- Introduction

- Quantitative Comparisons of Modeling Approaches

- Experimental Protocols for Model Evaluation

- Visualizing Workflows and Relationships

- The Scientist's Toolkit: Key Research Reagents and Solutions

- Conclusion

In the high-stakes field of drug discovery and development, the choice of a predictive model is a critical strategic decision. The pursuit of ever more complex models is not always the most effective path. Instead, embracing a 'fit-for-purpose' philosophy—where model complexity is deliberately aligned with specific research objectives—is essential for enhancing credibility, improving decision-making, and conserving resources [22]. This approach prioritizes practical utility and biological plausibility over purely theoretical sophistication. Success in predictive modeling hinges on a strong foundation in traditional disciplines such as physiology and pharmacology, coupled with the strategic application of modern computational tools [22]. This guide provides a comparative analysis of modeling approaches, supported by experimental data and practical methodologies, to help researchers select and implement the most appropriate models for their specific goals within the drug development pipeline.

Quantitative Comparisons of Modeling Approaches

Selecting a modeling approach often involves weighing traditional statistical methods against more advanced computational models. The following comparisons highlight the performance trade-offs in different drug development scenarios.

Table 1: Comparison of Sample Sizes Required for 80% Power in Proof-of-Concept Trials

| Therapeutic Area | Primary Endpoint | Conventional Analysis | Pharmacometric Model-Based Analysis | Fold Reduction in Sample Size | Source/Study Context |

|---|---|---|---|---|---|

| Acute Stroke | Change in NIHSS score at day 90 | 388 patients | 90 patients | 4.3-fold | Parallel design (Placebo vs. Active) [23] |

| Type 2 Diabetes | Glycemic Control (HbA1c) | 84 patients | 10 patients | 8.4-fold | Parallel design (Placebo vs. Active) [23] |

| Acute Stroke (Dose-Ranging) | Change in NIHSS score | 776 patients | 184 patients | 4.2-fold | Multiple active dose arms [23] |

| Type 2 Diabetes (Dose-Ranging) | Glycemic Control (HbA1c) | 168 patients | 12 patients | 14-fold | Multiple active dose arms & follow-up [23] |

Table 2: Performance Comparison of Drug Response Prediction Models for Individual Drugs

| Model Category | Specific Models Tested | Performance Range (RMSE) | Performance Range (R²) | Best Performing Model Example | Key Finding |

|---|---|---|---|---|---|

| Deep Learning (DL) | CNN, ResNet | 0.284 to 3.563 | -2.763 to 0.331 | - | For 24 individual drugs, no significant difference in prediction performance was found between DL and traditional ML models when using gene expression data as input [24]. |

| Traditional Machine Learning (ML) | Lasso, Ridge, SVR, RF, XGBoost | 0.274 to 2.697 | -8.113 to 0.470 | Ridge model for Panobinostat (R²: 0.470, RMSE: 0.623) [24] | |

| Model with Mutation Input | Various DL and ML | Poor correlation with actual ln(IC50) values | Poor correlation with actual ln(IC50) values | - | Models using mutation profiles alone failed to show strong predictive power, underscoring the importance of input data type [24]. |

Experimental Protocols for Model Evaluation

Implementing and validating a 'fit-for-purpose' model requires rigorous methodology. Below are detailed protocols for key experiments cited in this guide.

Protocol 1: Pharmacometric Model-Based Analysis for Proof-of-Concept (POC) Trials

This protocol is adapted from studies that demonstrated significant sample size reductions in stroke and diabetes trials [23].

- Objective: To detect a defined drug effect with sufficient power using longitudinal data and a mechanistic model.

- Data Collection:

- Collect repeated measurements over time from all subjects (e.g., multiple NIHSS scores post-stroke or multiple FPG/HbA1c measurements in diabetes).

- Record precise dosing information and exposure data.

- Model Building:

- Develop or select a pre-validated pharmacometric model for the disease endpoint (e.g., a mechanistic model of the interplay between FPG, HbA1c, and red blood cells for diabetes [23]).

- The model should incorporate the disease's natural progression and the drug's mechanism of action.

- Primary Analysis:

- Use a mixed-effects modeling framework to analyze all longitudinal data simultaneously.

- The primary test is whether the model can identify a statistically significant drug effect, often evaluated via likelihood ratio tests or similar methods, which leverage all data points rather than only endpoint measurements.

- Power Analysis:

- Use clinical trial simulations based on the developed model to estimate the sample size required to achieve 80% power, which is typically substantially lower than what is required for conventional endpoint analyses.

Protocol 2: Evaluating Drug Response Prediction Models Using Cancer Cell Line Data

This protocol is based on a performance evaluation of ML and DL models for predicting drug sensitivity [24].

- Objective: To construct and validate a model that predicts the half-maximal inhibitory concentration (IC50) of a drug based on cancer cell line genomics.

- Data Curation:

- Input Features: Obtain gene expression profiles and/or mutation data for a panel of cancer cell lines from databases like CCLE or GDSC.

- Output Variable: Obtain corresponding drug response data (ln(IC50)) for the specific drug of interest across the same cell lines.

- Data Preprocessing: Split the data into training and test sets, ensuring a representative distribution of cancer types and IC50 values in both sets.

- Model Training and Comparison:

- Train multiple model types on the same training data. This typically includes:

- Deep Learning: Convolutional Neural Networks (CNN), ResNet.

- Traditional Machine Learning: Ridge regression, Lasso, Random Forest, Support Vector Regression (SVR).

- Optimize hyperparameters for each model using cross-validation on the training set.

- Train multiple model types on the same training data. This typically includes:

- Model Validation:

- Predict ln(IC50) values for the held-out test set using all trained models.

- Performance Metrics: Calculate Root Mean Squared Error (RMSE) and R-squared (R²) values for each model's predictions against the actual IC50 values.

- Compare the performance of DL and ML models to determine the best 'fit-for-purpose' approach for the specific drug.

Protocol 3: Active Learning for Molecular Optimization

This protocol outlines the use of active learning to improve the efficiency of optimizing molecular properties [25].

- Objective: To selectively test molecules that are most informative for improving a predictive model of a molecular property (e.g., solubility, affinity, toxicity).

- Initial Model Training: Train an initial model (e.g., a Graph Neural Network) on a small, labeled dataset of molecules.

- Iterative Batch Selection:

- Uncertainty & Diversity Estimation: Use the current model to predict on a large pool of unlabeled molecules. Calculate a covariance matrix between predictions to quantify both the uncertainty and diversity of the unlabeled samples.

- Batch Query: Select a batch of molecules that maximizes the joint entropy (the log-determinant of the epistemic covariance). This ensures the selected batch is both uncertain and non-redundant.

- Experimental Testing: Synthesize and test the selected molecules in the lab to obtain their experimental property values (e.g., affinity measurement).

- Model Update: Add the new data to the training set and retrain the model.

- Cycle Continuation: Repeat steps 3a-3d until the model reaches a pre-defined performance threshold, thereby minimizing the total number of wet-lab experiments required.

Visualizing Workflows and Relationships

Visual representations are key to understanding the relationships in complex systems and the workflows of advanced methodologies.

Diagram 1: The 'Fit-for-Purpose' Model Selection Strategy

This diagram illustrates the decision process for aligning model complexity with research objectives.

Diagram 2: Active Learning Cycle for Drug Discovery

This flowchart details the iterative workflow of a batch active learning process for optimizing molecular properties.

The Scientist's Toolkit: Key Research Reagents and Solutions

The following tools and resources are essential for conducting the experiments and building the models discussed in this guide.

Table 3: Essential Research Reagents and Computational Tools

| Item Name | Type | Function / Application | Key Features / Notes |

|---|---|---|---|

| Cancer Cell Line Encyclopedia (CCLE) | Database | Provides public genomic data (gene expression, mutations) and drug sensitivity data for a large panel of cancer cell lines. | Foundational resource for training and validating drug response prediction models [24]. |

| Genomics of Drug Sensitivity in Cancer (GDSC) | Database | Another major public resource linking drug sensitivity in cancer cell lines to genomic features. | Often used in conjunction with or for comparison to CCLE data [24]. |

| DeepChem | Software Library | An open-source toolkit for applying deep learning to drug discovery, biology, and materials science. | Supports the implementation of graph neural networks and other DL architectures for molecular modeling [25]. |

| Pharmacometric Model | Software / Model | A mathematical model describing the relationship between drug exposure, biomarkers, and disease progression. | Implemented in software like NONMEM or R. Crucial for model-based trial analysis and simulation [23]. |

| Active Learning Framework (e.g., COVDROP) | Algorithm | A method for selecting the most informative batch of samples for experimental testing to optimize a model. | Reduces the number of experiments needed by prioritizing data that improves model performance [25]. |

| Explainable AI (XAI) Tools | Algorithm | Techniques to interpret complex ML/DL models and identify features driving predictions. | Critical for building trust and generating biological insights from black-box models (e.g., identifying key genomic features for drug response) [24]. |

The empirical data and methodologies presented in this guide underscore a central tenet of modern drug development: the most powerful model is the one that is most fit for its intended purpose. As the comparisons show, advanced pharmacometric and machine learning models can dramatically increase efficiency and predictive power, but their success is contingent on a thoughtful integration of biomedical knowledge, appropriate data, and a clear research objective [22]. The future of predictive modeling lies not in a universal, one-size-fits-all solution, but in a principled and pragmatic selection from a growing and integrated toolkit. By aligning model complexity with specific research goals, scientists can enhance the credibility of their models, accelerate the drug development process, and increase the likelihood of delivering effective therapies to patients.

In the high-stakes fields of oncology and Model-Informed Drug Development (MIDD), the transition from a predictive model to a trusted decision-making tool hinges entirely on the rigor of its validation. As computational models grow more complex, robust validation frameworks are what separate speculative tools from those capable of guiding clinical strategies and therapeutic development. This guide examines the critical role of validation by comparing the performance and methodological rigor of different AI/ML models across key oncology applications, from drug discovery to clinical prognosis.

Comparative Performance of Validated Oncology Models

The table below summarizes the performance outcomes of several machine learning models following rigorous validation in real-world oncology scenarios.

Table 1: Comparative Performance of Validated Oncology AI/ML Models

| Application Area | Model Type | Key Performance Metrics | Validation Method | Reference Study |

|---|---|---|---|---|

| Colon Cancer Survival Prediction | Random Survival Forest & LASSO | Concordance Index: 0.8146 (overall); Identified key risk factors (e.g., no treatment: 3.24x higher mortality risk). | Retrospective analysis of 33,825 cases from Kentucky Cancer Registry; Leave-one-out cross-validation. | [26] |

| Multi-Cancer Early Detection (MCED) | AI-Empowered Blood Test (OncoSeek) | AUC: 0.829; Sensitivity: 58.4%; Specificity: 92.0%; Accurate Tissue-of-Origin prediction in 70.6% of true positives. | Large-scale multi-centre validation across 15,122 participants, 7 cohorts, 3 countries, and 4 platforms. | [27] |

| Cancer DNA Classification | Blended Logistic Regression & Gaussian Naive Bayes | 100% accuracy for BRCA1, KIRC, COAD; 98% for LUAD, PRAD; ROC AUC: 0.99. | 10-fold cross-validation; Independent hold-out test set (20% of cohort). | [28] |

| Preoperative STAS Prediction in Lung Adenocarcinoma | XGBoost | AUC: 0.889 (Training), 0.856 (External Validation). | Internal cross-validation and external validation on a cohort from a separate medical center (n=120). | [29] |

| Radiation Dermatitis Prediction in Breast Cancer | Random Forest | AUC: 0.84 (Training), 0.748 (Testing); Sensitivity: 0.747; Specificity: 0.576. | Internal hold-out test set; model interpretability ensured via SHAP analysis. | [30] |

Experimental Protocols and Methodologies

A critical component of model validation is the transparency of the experimental workflow. The following diagram outlines the multi-stage validation process common to robust oncology model development.

Diagram: Multi-Stage Model Validation Workflow

The methodologies from the featured case studies exemplify this workflow:

Colon Cancer Survival Estimation

This study compared multiple models, including Cox proportional hazards, random survival forests, and LASSO, to estimate survival probabilities for 33,825 colon cancer cases [26]. The protocol involved:

- Data Source: Kentucky Cancer Registry data linked to mortality records [26].

- Handling Missing Data: Using multiple imputation techniques to preserve dataset integrity [26].

- Validation Technique: Employing leave-one-out cross-validation to reduce the risk of overfitting and ensure model generalizability [26].

- Performance Metrics: Using the Brier score and concordance index to compare model performance, with random survival forest and LASSO models outperforming traditional statistical methods [26].

Preoperative STAS Prediction in Lung Cancer

This research focused on predicting Spread Through Air Spaces (STAS) preoperatively to guide surgical decisions [29]. The experimental design included:

- Feature Selection: A two-step process using Maximum Relevance Minimum Redundancy (mRMR) for initial dimensionality reduction, followed by LASSO regression to identify the seven most predictive features (e.g., CEA, vascular convergence) [29].

- Model Training & Comparison: Seven different machine learning models were constructed and evaluated [29].

- Validation Rigor: External validation was performed using a cohort of 120 patients from an independent medical center, providing a strong, real-world test of the model's generalizability [29].

The Scientist's Toolkit: Essential Research Reagents & Solutions

The development and validation of predictive models in oncology rely on a suite of computational and methodological "reagents."

Table 2: Key Research Reagent Solutions in AI/ML Oncology Model Validation

| Tool Category | Specific Tool/Technique | Primary Function in Validation |

|---|---|---|

| Validation Frameworks | k-Fold Cross-Validation [28] | Robustly assesses model performance by iteratively partitioning data into training and validation sets. |

| External Validation [29] | The gold standard for testing model generalizability on data from a completely independent source. | |

| Large-Scale, Multi-Centre Validation [27] | Establishes model robustness across diverse populations, platforms, and clinical settings. | |

| Feature Selection & Interpretability | LASSO Regression [26] [29] | Identifies the most predictive features while preventing overfitting by penalizing model complexity. |

| SHAP (Shapley Additive exPlanations) [30] [29] | Provides "explainable AI" by quantifying the contribution of each feature to an individual prediction. | |

| mRMR (Maximum Relevance Minimum Redundancy) [29] | Filters features to find a subset that is maximally informative and non-redundant. | |

| Performance Metrics | Concordance Index (C-Index) [26] | Evaluates the ranking accuracy of survival models. |

| AUC (Area Under the ROC Curve) [30] [27] [29] | Measures the overall ability of the model to discriminate between classes across all thresholds. | |

| Brier Score [26] | Assesses the accuracy of probabilistic predictions (lower scores indicate better accuracy). | |

| Data Handling | Multiple Imputation [26] | Handles missing data by generating multiple plausible values to account for uncertainty. |

| SMOTE [31] | Addresses class imbalance in datasets by generating synthetic samples of the minority class. |

Pathway to Clinical Decision-Making

The ultimate goal of model validation is to create a reliable bridge from computational output to actionable clinical or developmental decisions. The following diagram illustrates how interpretability tools like SHAP integrate into this decision pathway.

Diagram: From Model Prediction to Clinical Decision

This pathway is activated in various clinical contexts:

- In Drug Development: Validated AI models can identify novel drug targets and predict the efficacy of drug candidates, as demonstrated by the AI-driven discovery of anticancer drug Z29077885, which targets STK33 [32]. This helps prioritize the most promising compounds for expensive and time-consuming in vivo studies [33].

- In Radiotherapy Planning: The model for radiation dermatitis provides not just a risk score but, through SHAP, identifies the specific clinical factors (e.g., CTVsc, diabetic status) driving that risk. This gives clinicians a rationale to personalize radiotherapy plans for high-risk patients [30].

- In Surgical Strategy: The high performance of the XGBoost model in predicting STAS status preoperatively, validated across institutions, gives thoracic surgeons a data-driven basis for opting for a lobectomy over a sublobar resection in high-risk patients, potentially improving long-term survival [29].

Key Insights for Practitioners

The cross-comparison of validated models yields several critical insights for researchers and drug development professionals:

- No Single "Best" Model: The optimal model is highly context-dependent. While XGBoost excelled in predicting STAS in lung cancer [29], a blended ensemble was superior for DNA-based cancer classification [28], and random forest was top-performing for predicting radiation dermatitis [30]. This underscores the need to train and compare multiple algorithms for each unique problem.

- Validation Scale Dictates Trust: The level of validation directly correlates with a model's potential for clinical adoption. A model like OncoSeek, validated across 15,000+ participants and multiple platforms [27], carries far greater persuasive power and evidence of robustness than a model only validated internally.

- Interpretability is Non-Negotiable: For models to be integrated into decision-making processes, they cannot be "black boxes." The use of tools like SHAP [30] [29] to explain the driving factors behind a prediction is essential for building trust with clinicians and regulators.

- The Gold Standard is External Validation: Internal cross-validation is a necessary first step, but the most compelling evidence for a model's utility is successful external validation on a cohort from a completely independent institution, as seen in the lung adenocarcinoma STAS study [29]. This is the strongest guard against over-optimistic performance estimates.

From Theory to Lab Bench: A Practical Toolkit for Model Validation Techniques

In the scientific pursuit of developing robust predictive models, the fundamental challenge lies in creating systems that generalize effectively to new, unseen data, rather than merely memorizing the dataset on which they were trained. Hold-out methods provide a foundational solution to this challenge by strategically partitioning available data into distinct subsets for different phases of model development and evaluation [34]. These methods are particularly crucial in fields like drug development, where model performance has direct implications on research outcomes and patient safety [35].

The core principle of hold-out validation is simple yet powerful: by testing a model on data it has never encountered during training, researchers can obtain a more realistic estimate of how it will perform in real-world scenarios [36]. This process helps answer critical questions: Does the model capture genuine underlying patterns or merely noise? How will it perform on future data samples? Which of several candidate models demonstrates the best generalization capability? [34] As we explore the two primary hold-out approaches—simple train-test splitting and train-validation-test splitting—we will examine their methodological differences, applications, and performance implications within the context of model prediction research.

Understanding Simple Train-Test Splitting

Conceptual Framework and Implementation

The simple train-test split represents the most fundamental hold-out method, where the available dataset is partitioned into two mutually exclusive subsets: a training set used to fit the model and a test set used to evaluate its performance [36]. This approach ensures that the model is evaluated on data it has never seen during the training process, providing an estimate of its generalization capability [34].

The typical workflow involves first shuffling the dataset to reduce potential bias, then splitting it according to a predetermined ratio, with common splits being 70:30, 80:20, or 60:40 depending on the dataset size and characteristics [36]. A larger training set generally helps the model learn better patterns, while a larger test set provides a more reliable estimate of performance [36]. The model is trained exclusively on the training data, and its final evaluation is performed once on the separate test set.

Experimental Protocol and Best Practices

Implementing a simple train-test split requires careful consideration of several factors to ensure valid results. The following Python code demonstrates a standard implementation using scikit-learn:

Key considerations for implementation include setting a random state for reproducibility, shuffling data before splitting to ensure representative distribution, and adjusting the test size based on dataset characteristics [36] [37]. For datasets with class imbalance, stratified sampling should be employed to maintain similar class distributions in both training and test sets [38].

Understanding Train-Validation-Test Splitting

Conceptual Framework and Implementation

The train-validation-test split extends the simple hold-out method by introducing a third subset, creating separate partitions for training, validation, and testing [34] [39]. This approach addresses a critical limitation of the simple train-test method: the need for both model development and unbiased evaluation.

In this paradigm, each data subset serves a distinct purpose. The training set is used for model fitting, the validation set for hyperparameter tuning and model selection, and the test set for final performance evaluation [38] [39]. This separation is particularly important when comparing multiple algorithms or tuning hyperparameters, as it prevents information from the test set indirectly influencing model development [34].

Experimental Protocol and Best Practices

The three-way split requires careful partitioning to ensure each subset serves its intended purpose effectively. The following Python code demonstrates a typical implementation:

Common split ratios for the three-way partition typically allocate 70-80% for training, 10-15% for validation, and 10-15% for testing, though these proportions should be adjusted based on dataset size and model complexity [37]. Models with numerous hyperparameters generally require larger validation sets for reliable tuning [38] [37]. The key advantage of this method is that it provides an unbiased final evaluation through the test set, which has played no role in model development or selection [39].

Comparative Analysis: Performance and Applications

Quantitative Comparison of Methodologies

The choice between simple train-test and train-validation-test splitting has significant implications for model assessment reliability. Research comparing data splitting methods has revealed important patterns in how these approaches perform under different conditions.

Table 1: Performance Comparison of Hold-Out Methods Based on Empirical Studies

| Evaluation Metric | Simple Train-Test Split | Train-Validation-Test Split | Key Research Findings |

|---|---|---|---|

| Generalization Estimate Reliability | Lower [35] | Higher [39] | Significant gap between validation and test performance observed in small datasets [35] |

| Hyperparameter Tuning Capability | Limited [34] | Comprehensive [38] | Prevents overfitting to test set during hyperparameter optimization [34] |

| Data Efficiency | More efficient for large datasets [36] | Less efficient due to three-way split [39] | Training set size critically impacts performance estimation quality [35] |

| Variance in Results | Higher across different splits [36] | Lower through dedicated validation [39] | Single split can provide erroneous performance estimates [35] |

| Optimal Dataset Size | Large datasets (>10,000 samples) [36] | Medium to large datasets [34] | Both methods show significant performance-estimation gaps on small datasets [35] |

A critical finding from comparative studies is that dataset size significantly impacts the reliability of both methods. Research has demonstrated "a significant gap between the performance estimated from the validation set and the one from the test set for all the data splitting methods employed on small datasets" [35]. This disparity decreases with larger sample sizes as models better approximate the central limit theory for the simulated datasets used in controlled studies.

Application Scenarios and Decision Framework

Each hold-out method excels in specific research contexts, and selecting the appropriate approach depends on multiple factors including dataset characteristics, research goals, and model complexity.

Table 2: Application Guidelines for Hold-Out Methods in Research Settings

| Research Scenario | Recommended Method | Rationale | Implementation Considerations |

|---|---|---|---|

| Preliminary Model Exploration | Simple Train-Test Split | Computational efficiency and implementation simplicity [36] | Use 70-30 or 80-20 split; ensure random shuffling [36] |

| Hyperparameter Optimization | Train-Validation-Test Split | Prevents information leakage from test set [34] | Allocate sufficient data for validation based on parameter complexity [38] |

| Small Datasets (<1000 samples) | Enhanced Methods (Cross-Validation) | More reliable performance estimation [35] [39] | Consider k-fold cross-validation instead of standard hold-out [39] |

| Algorithm Comparison | Train-Validation-Test Split | Unbiased final evaluation through untouched test set [34] | Use identical test set for all algorithm comparisons [39] |

| Large-Scale Data | Simple Train-Test Split | Sufficient data for training and reliable testing [36] | Can use smaller percentage for testing while maintaining absolute sample size [36] |

The three-way split is particularly valuable in research contexts where model selection is required. As noted in model evaluation literature, "Sometimes the model selection process is referred to as hyperparameter tuning. During the hold-out method of selecting a model, the dataset is separated into three sets — training, validation, and test" [34]. This approach allows researchers to try different algorithms, tune their hyperparameters, and select the best performer based on validation metrics, while maintaining the integrity of the final evaluation through the untouched test set.

Advanced Methodologies and Research Applications

Cross-Validation and Specialized Splitting Strategies

For research scenarios where standard hold-out methods may be suboptimal, several advanced techniques provide more robust model assessment:

K-Fold Cross-Validation: The dataset is partitioned into K equal folds, with each fold serving as a validation set once while the remaining K-1 folds form the training set [38]. This approach maximizes data usage for both training and validation, making it particularly valuable for small datasets [39].

Stratified Sampling: Maintains class distribution proportions across splits, crucial for imbalanced datasets commonly encountered in medical and pharmaceutical research [38]. This approach ensures that rare but clinically important events are represented in all data subsets.

Nested Cross-Validation: Implements two layers of cross-validation—an outer loop for performance estimation and an inner loop for model selection—providing almost unbiased performance estimates when comprehensive hyperparameter tuning is required [39].

These advanced methods address specific limitations of standard hold-out approaches, particularly for small datasets or those with complex structure. As demonstrated in comparative studies, "Having too many or too few samples in the training set had a negative effect on the estimated model performance, suggesting that it is necessary to have a good balance between the sizes of training set and validation set to have a reliable estimation of model performance" [35].

Research Reagent Solutions for Model Validation

Implementing robust model validation requires both methodological rigor and appropriate computational tools. The following table outlines essential "research reagents" for conducting proper hold-out validation studies:

Table 3: Essential Research Reagents for Hold-Out Validation Studies

| Tool Category | Specific Solution | Research Application | Key Functionality |

|---|---|---|---|

| Data Splitting Libraries | Scikit-learn traintestsplit [36] [37] | Partitioning datasets into subsets | Random, stratified, and shuffled splitting with controlled random states |

| Cross-Validation Implementations | Scikit-learn KFold, StratifiedKFold [38] | Robust performance estimation | K-fold, stratified, and leave-P-out cross-validation schemes |

| Model Selection Tools | Scikit-learn GridSearchCV, RandomizedSearchCV | Hyperparameter optimization | Automated search across parameter spaces with integrated validation |

| Performance Metrics | Scikit-learn metrics module [36] | Model evaluation | Accuracy, precision, recall, F1-score, and custom metric implementation |

| Statistical Validation | Custom equivalence testing [40] | Model assessment confidence | Statistical tests for model equivalence to real-world processes |

These computational tools form the essential toolkit for implementing the hold-out methods discussed in this guide. Proper utilization of these resources helps researchers avoid common pitfalls such as data leakage, overfitting, and biased performance estimation [38] [41].

Hold-out methods provide essential methodologies for developing and evaluating predictive models across scientific domains, particularly in drug development research where model reliability directly impacts decision-making. The simple train-test split offers computational efficiency and implementation simplicity suitable for preliminary investigations and large datasets. In contrast, the train-validation-test split provides a more rigorous framework for model selection and hyperparameter optimization while maintaining an unbiased final evaluation.

Empirical research has demonstrated that dataset characteristics—particularly size and distribution—significantly influence the effectiveness of both approaches [35]. While the three-way split generally provides more reliable model assessment, especially for complex models requiring extensive tuning, researchers must ensure adequate sample sizes in each partition to obtain meaningful results. For small datasets, enhanced methods such as cross-validation may be necessary to overcome limitations of standard hold-out approaches.

The fundamental principle underlying all these methodologies remains consistent: proper separation of data used for model development from data used for model evaluation provides the most realistic estimate of how a predictive system will perform on future observations. By selecting appropriate hold-out strategies based on specific research contexts and implementing them with careful attention to potential pitfalls, researchers can develop more reliable, generalizable models that advance scientific discovery and application.

In the empirical sciences, particularly in drug development and biomarker discovery, the ability to validate predictive models against experimental data is paramount. Cross-validation stands as a cornerstone statistical technique for assessing how the results of a predictive model will generalize to an independent dataset, thereby providing a crucial bridge between computational predictions and experimental validation. This resampling procedure evaluates model performance by partitioning the original sample into a training set to train the model, and a test set to evaluate it. Within the context of comparing model predictions with experimental data research, cross-validation provides a robust framework for estimating model performance while mitigating overfitting to the peculiarities of a specific dataset [42] [43].

The fundamental principle of cross-validation involves systematically splitting the dataset, training the model on subsets of the data, and validating it on the remaining data. This process is repeated multiple times, with the results aggregated to produce a single, more reliable estimation of model performance [42]. For researchers and scientists, this methodology is indispensable for model selection, hyperparameter tuning, and providing evidence that a model's predictions are likely to hold true in subsequent experimental validation. This guide provides a comprehensive comparison of two fundamental cross-validation strategies: K-Fold Cross-Validation and Leave-One-Out Cross-Validation, with a specific focus on their implementation and interpretation within experimental research contexts.

Understanding the Core Methods

K-Fold Cross-Validation

K-Fold Cross-Validation is a resampling procedure that splits the dataset into k equal-sized, or approximately equal-sized, folds. The model is trained k times, each time using k-1 folds for training and the remaining single fold as a validation set. This process ensures that each data point gets to be in the validation set exactly once [42] [43]. The overall performance is then averaged across all k iterations, providing an estimate of the model's predictive performance.

A value of k=10 is very common in applied machine learning, as this value has been found through experimentation to generally result in a model skill estimate with low bias and modest variance [43]. However, with smaller datasets, a lower k (such as 5) might be preferred to ensure each training subset is sufficiently large. The key advantage of K-Fold Cross-Validation is that it often provides a good balance between computational efficiency and reliable performance estimation, making it suitable for a wide range of dataset sizes, particularly small to medium-sized datasets [42].

Leave-One-Out Cross-Validation (LOOCV)

Leave-One-Out Cross-Validation is an exhaustive resampling method that represents the extreme case of k-fold cross-validation where k equals the number of observations (n) in the dataset. In each iteration, a single observation is used as the validation set, and the remaining n-1 observations constitute the training set. This process is repeated n times such that each observation in the dataset is used once as the validation data [42].

LOOCV is particularly advantageous with very small datasets where maximizing the training data is crucial. Since each training set uses n-1 observations, the model is trained on almost the entire dataset each time, resulting in low bias for the performance estimate [42]. However, this method can be computationally expensive for large datasets, as it requires building n models. Furthermore, because each test set contains only one observation, the validation score can have high variance, especially if outliers are present [42] [44]. The method is most beneficial when dealing with limited data, such as in preliminary studies where sample sizes are constrained by cost or availability of experimental materials.

Comparative Analysis: K-Fold vs. LOOCV

Technical Comparison

The choice between K-Fold and LOOCV involves important trade-offs between bias, variance, and computational efficiency. The following table summarizes the key technical differences between these two approaches:

Table 1: Technical comparison between K-Fold Cross-Validation and Leave-One-Out Cross-Validation

| Feature | K-Fold Cross-Validation | Leave-One-Out Cross-Validation (LOOCV) |

|---|---|---|

| Data Split | Dataset divided into k equal folds | Each single observation serves as a test set |

| Training & Testing | Model trained and tested k times | Model trained and tested n times (n = sample size) |

| Bias | Lower bias than holdout method, but higher than LOOCV | Very low bias, as training uses nearly all data |

| Variance | Moderate variance (depends on k) | High variance due to testing on single points |

| Computational Cost | Lower (requires k model trainings) | Higher (requires n model trainings) |

| Best Use Case | Small to medium datasets where accurate estimation is important | Very small datasets where maximizing training data is critical |

Performance Characteristics

The performance characteristics of these methods diverge significantly based on dataset size and structure. K-Fold Cross-Validation with k=5 or k=10 typically provides a good compromise between bias and variance. The bias decreases as k increases, but the variance may increase accordingly. With LOOCV, the estimator is approximately unbiased for the true performance, but it can have high variance because the training sets are so similar to each other [42] [43].

For structured data, such as temporal or spatial data, standard LOOCV might not be suitable for evaluating predictive performance. In such cases, the correlation between training and test sets could notably impact the model's prediction error. Leave-group-out cross validation (LGOCV), where groups of correlated data are left out together, has emerged as a valuable alternative for enhancing predictive performance measurement in structured models [45]. This is particularly relevant in experimental designs where multiple measurements come from the same biological replicate or where spatial correlation exists.

Recent empirical studies on traditional experimental designs have provided evidence that LOOCV can be useful in small, structured datasets, while more general k-fold CV may also be competitive, though its performance is uneven across different scenarios [46].

Experimental Protocols and Implementation

Standardized K-Fold Cross-Validation Protocol

Implementing K-Fold Cross-Validation requires careful attention to data partitioning and model evaluation. The following protocol provides a standardized approach for experimental researchers:

- Dataset Preparation: Begin with a complete, preprocessed dataset. For drug discovery applications, this might include features such as molecular descriptors, assay results, or omics measurements, with appropriate experimental outcomes as targets.

- Fold Generation: Randomly shuffle the dataset and split it into k folds. For stratified k-fold (recommended for classification problems with imbalanced classes), ensure each fold preserves the same class distribution as the full dataset [42].

- Iterative Training and Validation: For each fold i (where i ranges from 1 to k):

- Use fold i as the validation set

- Use the remaining k-1 folds as the training set

- Train the model on the training set