Accelerating Drug Discovery: GPT-Based Molecular Generation Enhanced by Active Learning

This article explores the transformative integration of Generative Pre-trained Transformer (GPT) models with active learning (AL) methodologies for de novo molecular design.

Accelerating Drug Discovery: GPT-Based Molecular Generation Enhanced by Active Learning

Abstract

This article explores the transformative integration of Generative Pre-trained Transformer (GPT) models with active learning (AL) methodologies for de novo molecular design. Aimed at researchers and drug development professionals, it provides a comprehensive analysis of how this synergy addresses critical challenges in exploring vast chemical spaces. The content covers the foundational principles of GPT architectures for processing chemical languages like SMILES and SELFIES, details innovative methodological frameworks that combine generative AI with iterative experimental feedback, and discusses strategies for optimizing model performance and overcoming data scarcity. Furthermore, the article presents a rigorous validation of these approaches through comparative benchmarking, case studies on real-world targets, and an outlook on their potential to reshape preclinical drug discovery pipelines by efficiently generating novel, potent, and synthesizable drug candidates.

The Foundations of GPT and Active Learning in Chemical Space Exploration

The application of Generative Pre-trained Transformer (GPT)-like architectures to molecular representation marks a transformative advance in chemical informatics and drug discovery. These models learn intricate molecular patterns from large-scale chemical data, enabling accurate prediction of properties, reactivity, and biological activity. By treating chemical notations as a specialized language, these architectures bridge the gap between natural language processing and molecular sciences, creating powerful tools for inverse molecular design where desired properties guide the generation of novel molecular structures.

Current GPT-based Molecular Models

The table below summarizes key GPT-like architectures developed for molecular representation and generation, highlighting their unique contributions and specialized applications.

Table 1: Overview of GPT-based Molecular Models

| Model Name | Core Architecture | Molecular Representation | Primary Application Domain | Key Innovations |

|---|---|---|---|---|

| KnowMol [1] | Multi-modal Mol-LLM | SELFIES (1D) + Hierarchical Graph (2D) | General molecular understanding & generation | Multi-level chemical knowledge; replaces SMILES with SELFIES; specialized vocabulary |

| Compound-GPT [2] | GPT-based chemical language model | Canonical SMILES | Reactivity & toxicity prediction | Predicts hydroxyl radical reaction constants & Ames mutagenicity; rapid screening (0.82 ms/prediction) |

| 3DSMILES-GPT [3] | Token-only LLM | Combined 2D & 3D linguistic expressions | 3D molecular generation in protein pockets | Encodes 3D coordinates as tokens; integrates protein pocket information |

| Generative AI with Active Learning [4] | Variational Autoencoder (VAE) + Active Learning | SMILES | Target-specific drug design | Nested active learning cycles; integrates chemoinformatics & molecular modeling predictors |

Quantitative Performance Comparison

The performance of molecular GPT architectures varies significantly across different tasks, from property prediction to molecular generation. The following table provides a quantitative comparison of model capabilities based on published benchmarks.

Table 2: Performance Metrics of Molecular GPT Architectures

| Model / Task | Property Prediction Accuracy | Generation Quality | Generation Speed | Key Metrics |

|---|---|---|---|---|

| KnowMol [1] | Superior across 7 downstream tasks | State-of-the-art in understanding & generation | Not specified | Outperforms InstructMol, HIGHT, and UniMoT |

| Compound-GPT [2] | R²: 0.74 (RCH), Accuracy: 0.83 (Ames) | Not primary focus | 0.82 ms per sample | RMSE: 0.30 (RCH); AUC: 0.90 (Ames) |

| 3DSMILES-GPT [3] | Binding affinity (Vina docking) | 33% QED enhancement | ~0.45 seconds per generation | State-of-the-art SAS; outperforms in 8/10 benchmark metrics |

| GM with Active Learning [4] | Excellent docking scores | Diverse, novel scaffolds with high SA | Not specified | 8/9 synthesized molecules showed CDK2 activity (1 nanomolar) |

Experimental Protocols for Molecular GPT Implementation

Protocol: Pre-training a Molecular GPT Model

Purpose: To create a foundational molecular language model capable of understanding chemical structures and properties. Materials: Hardware (High-performance GPUs), Software (Python, PyTorch/TensorFlow, RDKit), Data Source (Large-scale molecular dataset e.g., OMol25 [5] [6] [7] or PubChem).

Data Preparation:

- Curate a dataset of molecular structures (e.g., 267,381 compounds for Compound-GPT [2]).

- Represent molecules using standardized notations: SMILES [2], SELFIES [1], or combined 2D/3D descriptors [3].

- Implement specialized tokenization (e.g., for SELFIES in KnowMol [1]) to avoid modality confusion with natural language.

- Split data into training, validation, and test sets (e.g., 80%/10%/10%).

Model Architecture Configuration:

Pre-training Procedure:

- Objective: Next-token prediction (standard language modeling) on the molecular sequence data.

- Employ a cross-entropy loss function.

- Train for a specified number of epochs until validation loss converges.

- Monitor reconstruction accuracy (e.g., BLEU score, multi-class accuracy [2]).

Protocol: Fine-tuning for Property Prediction

Purpose: To adapt a pre-trained molecular GPT for specific property prediction tasks (e.g., reactivity, toxicity). Materials: Pre-trained molecular GPT model, Task-specific labeled data (e.g., RCH constants, Ames mutagenicity [2]).

Task-Specific Data Curation:

- Obtain a labeled dataset for the target property.

- Ensure the data falls within the model's applicability domain.

Model Adaptation:

- Add a task-specific prediction head (regression or classification) on top of the pre-trained model.

- Optionally, employ a Q-Former or adapter module to bridge modalities if needed [1].

Fine-tuning Process:

- Initialize with pre-trained weights.

- Use a lower learning rate compared to pre-training.

- Train the entire model or only the top layers on the labeled data.

- For Compound-GPT [2], fine-tuning achieved an R² of 0.74 for RCH prediction and 0.83 accuracy for Ames mutagenicity.

Protocol: Active Learning for Molecular Generation

Purpose: To generate novel, optimal molecules for a specific target by iteratively refining a generative model using oracle feedback [4]. Materials: Pre-trained generative model (e.g., VAE), Target protein structure, Cheminformatics oracles (SA, drug-likeness), Physics-based oracles (docking scores).

Initialization:

- Fine-tune a generatively pre-trained model (e.g., VAE on SMILES) on an initial target-specific dataset [4].

Inner Active Learning Cycle (Cheminformatics Optimization):

- Generation: Sample new molecules from the model.

- Evaluation: Filter molecules using cheminformatics oracles (e.g., synthetic accessibility, drug-likeness, similarity to known actives).

- Fine-tuning: Add molecules meeting thresholds to a temporal set and fine-tune the model on this set.

- Repeat for a set number of iterations.

Outer Active Learning Cycle (Affinity Optimization):

- Evaluation: Subject molecules accumulated from inner cycles to molecular docking.

- Fine-tuning: Transfer molecules with favorable docking scores to a permanent set and fine-tune the model on this set.

- Iteration: Proceed with further nested inner cycles, now assessing similarity against the permanent set.

Candidate Selection:

- Apply stringent filtration to the final permanent set.

- Use advanced molecular modeling (e.g., Monte Carlo simulations with PEL, absolute binding free energy calculations) for in-depth evaluation [4].

- Select top candidates for synthesis and experimental validation.

Workflow Visualization

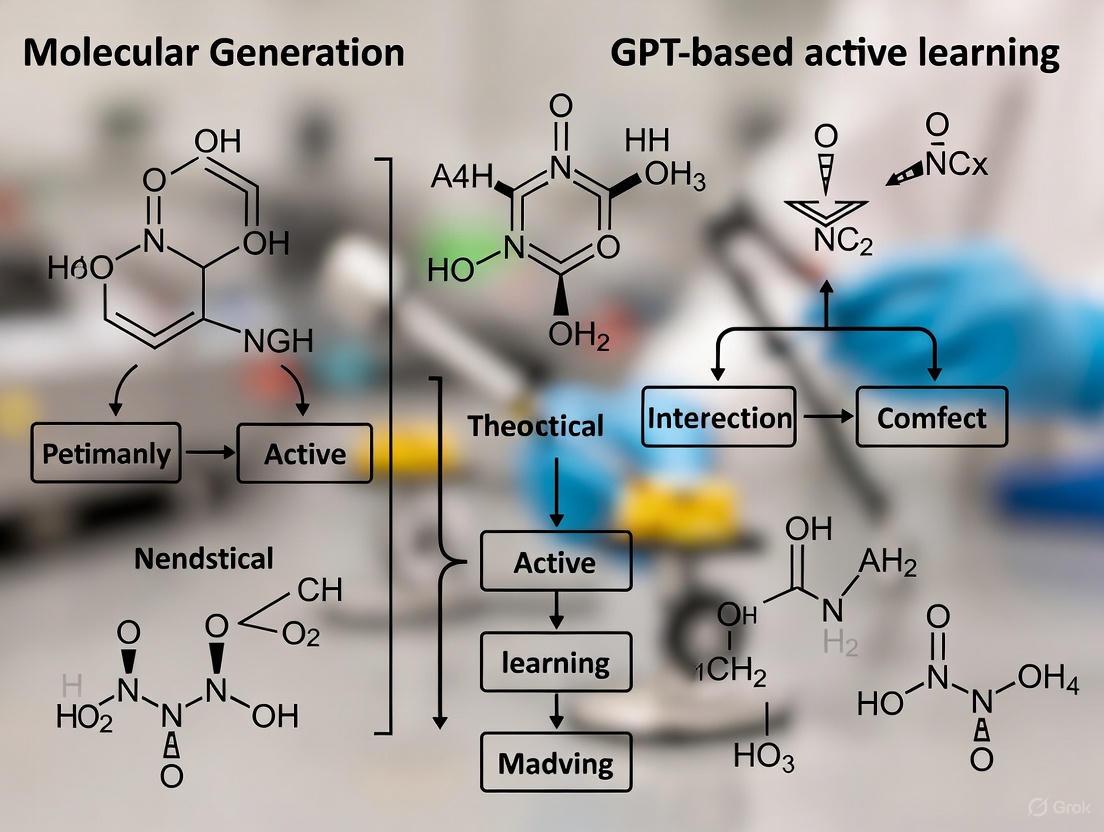

Molecular GPT Workflow with Active Learning

Molecular Representation Strategies

Molecular Representation Strategies for GPT Models

Essential Research Reagent Solutions

Table 3: Key Research Reagents and Computational Tools

| Reagent/Tool | Type | Primary Function | Example Use Case |

|---|---|---|---|

| SMILES [4] [2] [3] | Molecular Representation | Text-based encoding of molecular structure | Standard representation for training chemical language models |

| SELFIES [1] | Molecular Representation | Robust, syntactically valid molecular string representation | Replaces SMILES in KnowMol to avoid invalid structures |

| Synthetic Accessibility (SA) Score [4] [3] | Cheminformatics Oracle | Predicts ease of molecule synthesis | Filters generated molecules in active learning cycles |

| Docking Score [4] | Physics-based Oracle | Predicts ligand-protein binding affinity | Primary reward signal in outer active learning cycle |

| Quantum Mechanical Dataset (e.g., OMol25) [5] [6] [7] | Training Data | Provides high-accuracy molecular energies and properties | Pre-training neural network potentials and foundation models |

| Universal Model for Atoms (UMA) [5] [7] | Neural Network Potential | Fast, accurate energy and force predictions | Reward model for guided molecular generation with Adjoint Sampling |

In the field of computational drug discovery, the application of Generative Pre-trained Transformer (GPT) models represents a paradigm shift, enabling the de novo design of novel molecular structures. The efficacy of these models is fundamentally dependent on the chosen molecular representation, which serves as the foundational "language" through which the model comprehends and generates chemical structures. The Simplified Molecular Input Line Entry System (SMILES) and the Self-referencing Embedded String (SELFIES) have emerged as the two predominant string-based representations for this purpose. Framed within broader research on GPT-based molecular generation integrated with active learning, this document details the application notes and experimental protocols for utilizing these chemical languages. These representations allow researchers to frame molecular generation as a sequence-to-sequence task, analogous to machine translation or text generation in natural language processing. The integration of these representations with active learning frameworks creates a powerful, self-improving cycle where AI-generated molecules are computationally evaluated, and the most informative candidates are used to refine the model, thereby accelerating the exploration of chemical space for drug design [8] [4].

Chemical Language Representations: SMILES vs. SELFIES

SMILES (Simplified Molecular Input Line Entry System)

SMILES is a line notation method that uses ASCII strings to represent the structure of chemical molecules. Atoms are represented by their atomic symbols, bonds are denoted by symbols like -, =, # for single, double, and triple bonds respectively, and branches and rings are indicated with parentheses and numerals. A significant limitation of SMILES is its lack of inherent robustness; a large proportion of randomly generated or mutated SMILES strings do not correspond to valid chemical structures due to syntactic or semantic errors. This complicates their use in generative models, often requiring complex constraints and post-hoc validation [9] [10].

SELFIES (Self-referencing Embedded String)

SELFIES was developed specifically to overcome the robustness issues of SMILES. Its key innovation is a grammar based on a formal Chomsky type-2 grammar that guarantees 100% syntactic and semantic validity. Every possible SELFIES string corresponds to a molecule that obeys basic chemical valency rules. This is achieved by localizing non-local features (like rings and branches) and using a derivation state that acts as a memory to track and enforce physical constraints during the string-to-graph compilation process. This robustness makes it particularly suitable for generative AI, as it simplifies model architectures and training by eliminating invalid outputs [10] [11].

Performance Comparison in Molecular Generation

The choice of representation significantly impacts the performance and output of GPT models in molecular generation tasks. The following table summarizes key quantitative comparisons as established in recent literature.

Table 1: Performance comparison of SMILES and SELFIES in molecular generation tasks.

| Metric | SMILES | SELFIES | Context & Notes |

|---|---|---|---|

| Representational Validity | ~5-60% (model-dependent) [11] | 100% [10] [11] | Guaranteed by SELFIES formal grammar. |

| Latent Space Density (VAE) | Sparse, with disconnected valid regions [11] | Denser by two orders of magnitude [9] | Enables more efficient exploration and optimization. |

| Novelty & Diversity | Can be high but constrained by validity [9] | Enabled by robust exploration (e.g., STONED algorithm) [11] | SELFIES allows for unbiased combinatorial generation. |

| Model Dependency | Requires careful tuning to minimize invalid outputs [9] | Simplified training; robust to random mutations [11] | Enables simpler architectures like pure transformers. |

| Benchmark Performance (e.g., QED, Binding Affinity) | Competitive but can be limited by validity rate [3] [9] | State-of-the-art; e.g., 33% enhancement in QED reported for 3DSMILES-GPT [3] | Performance gains from focused learning on valid structures. |

Experimental Protocols for GPT-Driven Molecular Generation

This section provides detailed methodologies for implementing GPT models using SMILES and SELFIES representations, integrated with an active learning framework.

Protocol 1: Building a Foundational GPT Model for Molecular Generation

Objective: To pre-train a GPT model on a large-scale dataset of drug-like molecules for general molecular understanding and generation.

Materials & Reagents:

- Training Dataset: Large-scale chemical database (e.g., ZINC, PubChem) containing tens of millions of drug-like molecules represented in both SMILES and SELFIES formats [3] [9].

- Computing Infrastructure: High-performance computing cluster with multiple GPUs (e.g., NVIDIA A100/V100) for transformer model training.

- Software: Python 3.8+, PyTorch or TensorFlow, Hugging Face Transformers library, and specialized cheminformatics libraries (e.g., RDKit, SELFIES).

Procedure:

- Data Preprocessing & Tokenization:

a. For SMILES: Standardize molecules using RDKit and generate canonical SMILES strings.

b. For SELFIES: Convert canonical SMILES to SELFIES strings using the

selfiesPython library. c. Tokenization: Apply a suitable tokenization algorithm. Byte Pair Encoding (BPE) is common for SMILES. For SELFIES, the natural tokenization using square brackets or a novel method like Atom Pair Encoding (APE) can be used, with APE shown to preserve contextual relationships better than BPE in some benchmarks [9]. - Model Architecture & Training: a. Implement a Transformer decoder architecture (e.g., GPT-2) as the core model. b. Pre-train the model using a causal language modeling objective, where the task is to predict the next token in the sequence. c. Train on the large-scale dataset until the loss converges. This teaches the model the fundamental "grammar" and "vocabulary" of the chemical language [3].

Protocol 2: Target-Specific Fine-Tuning with Structural Data

Objective: To adapt the pre-trained model to generate molecules for a specific protein target by fine-tuning on protein-ligand complex data.

Materials & Reagents:

- Fine-Tuning Dataset: A curated dataset of protein-pocket and ligand structural pairs (e.g., from PDBbind). Ligands should be represented in 2D (SMILES/SELFIES) and 3D (e.g., tokenized 3D coordinates) [3].

- Protein Encoder: A detachable neural network module (e.g., Graph Neural Network) to encode the protein pocket's structural features.

Procedure:

- Data Integration:

a. Extract the 3D coordinates of atoms from the binding pocket and the corresponding ligand.

b. Tokenize the 3D coordinates, for example, by discretizing and representing them as symbolic tokens (e.g.,

x_12.34,y_5.67). c. Create a combined sequence input for the model that interleaves tokenized protein pocket information with the ligand's SELFIES (or SMILES) string [3]. - Fine-Tuning: a. Initialize the model with weights from the pre-trained model (Protocol 1). b. Add the protein encoder module to process pocket inputs. c. Fine-tune the entire model on the paired protein-ligand sequences, allowing it to learn the relationship between target structure and ligand characteristics.

Protocol 3: Active Learning-Driven Molecular Optimization

Objective: To iteratively improve the generated molecules' properties (e.g., binding affinity, drug-likeness) using a physics-based active learning framework.

Materials & Reagents:

- Oracle Functions: Computational predictors for molecular properties. These can be: a. Cheminformatics Oracles: For calculating Quantitative Estimate of Drug-likeness (QED), Synthetic Accessibility Score (SAscore) [8] [4]. b. Physics-Based Oracles: Molecular docking programs (e.g., AutoDock Vina) for estimating binding affinity [4].

- Active Learning Framework: A workflow manager to orchestrate the generation-evaluation-fine-tuning cycle.

Procedure:

- Initial Generation: Use the fine-tuned model from Protocol 2 to generate an initial library of molecules (

Nmolecules, e.g., 10,000). - Inner AL Cycle (Chemical Property Optimization):

a. Evaluation: Filter generated molecules for chemical validity (automatic with SELFIES) and evaluate them using cheminformatics oracles (QED, SAscore).

b. Selection: Select the top

Mmolecules that meet pre-defined thresholds for drug-likeness and synthetic accessibility. c. Fine-Tuning: Use this high-quality, target-specific set to further fine-tune the GPT model. This biases future generation towards more drug-like and synthesizable structures [4]. d. Iterate steps 2a-2c for a fixed number of cycles. - Outer AL Cycle (Binding Affinity Optimization):

a. Evaluation: Take molecules accumulated from the inner cycles and evaluate them with the physics-based oracle (molecular docking).

b. Selection: Select the top

Kmolecules with the best docking scores. c. Fine-Tuning: Use this high-affinity set for a final round of model fine-tuning, directly optimizing for the primary objective of strong target binding [4]. - Candidate Selection & Validation: The final output molecules can be prioritized based on a combination of all scores and undergo more rigorous experimental validation, such as absolute binding free energy calculations or synthesis and in vitro testing [4].

Workflow Visualization

The following diagram illustrates the integrated GPT and Active Learning workflow for molecular generation.

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 2: Key materials and computational tools for implementing GPT-based molecular generation with active learning.

| Item Name | Function/Application | Specifications & Notes |

|---|---|---|

| ZINC/PubChem Database | Source of millions of drug-like molecules for pre-training foundational GPT models. | Provides canonical SMILES; must be converted to SELFIES if required. [3] [9] |

| PDBbind Database | Curated database of protein-ligand complexes with 3D structural data and binding affinities. | Used for fine-tuning models on target-specific structural data. [3] |

| SELFIES Python Library | Enables conversion between SMILES and SELFIES representations. | pip install selfies; critical for ensuring 100% molecular validity. [11] |

| RDKit Cheminformatics Toolkit | Open-source platform for cheminformatics tasks: standardizing molecules, calculating descriptors (QED), and processing SMILES. | Essential for data preprocessing and cheminformatics oracles. [4] |

| Molecular Docking Software (e.g., AutoDock Vina) | Physics-based oracle for predicting ligand binding affinity and pose within a protein target. | Used as a key evaluator in the active learning outer cycle. [4] |

| Transformer Library (e.g., Hugging Face) | Provides pre-built, optimized implementations of transformer architectures (e.g., GPT-2). | Accelerates model development and training. [9] |

| Active Learning Framework Manager | Custom script or platform to automate the cycle of generation, evaluation, selection, and fine-tuning. | Orchestrates the entire optimization process, often built in-house. [4] |

Defining Active Learning and its Role in Efficient Molecular Screening

Active learning (AL) is an iterative, machine-guided methodology that efficiently identifies valuable data within vast chemical spaces, even when labeled data is limited [12]. In the context of molecular screening for drug discovery, this translates to a feedback-driven process where a machine learning (ML) model selectively chooses the most informative candidate molecules for expensive computational or experimental evaluation, thereby minimizing resource consumption while maximizing the discovery of promising compounds [13] [14]. This approach stands in stark contrast to traditional brute-force virtual screening, which exhaustively scores every molecule in a library—a process becoming increasingly impractical as chemical libraries now routinely exceed one billion compounds [13]. The core strength of active learning lies in its ability to navigate this immense search space by prioritizing molecules that are most likely to improve the model's predictive power or are most probable to be high-performing hits, thus offering a powerful solution to the "needle in a haystack" problem inherent to early-stage drug discovery [15] [12].

The Quantitative Edge: Performance of Active Learning in Screening

Empirical studies consistently demonstrate that active learning strategies yield substantial reductions in computational cost and experimental burden while maintaining high recall of top-performing molecules.

Table 1: Key Performance Metrics of Active Learning in Virtual Screening

| Study Focus | Virtual Library Size | Key Finding | Reported Metric | Efficiency Gain |

|---|---|---|---|---|

| Docking-Based Virtual Screening [13] | 100 million molecules | Identification of top ligands | 94.8% of top-50,000 ligands found | After testing only 2.4% of the library |

| TMPRSS2 Inhibitor Discovery [15] | DrugBank & NCATS libraries | Hit identification via target-specific score | All four known inhibitors identified | Required testing <20 compounds; ~29-fold reduction in computational cost |

| Combined MD & AL Screening [15] | Not Specified | Experimental validation of inhibitors | Potent nanomolar inhibitor (IC50 = 1.82 nM) discovered | Number of compounds requiring experimental testing reduced to less than 10 |

These performance gains are influenced by the choice of the surrogate model and the acquisition function. For instance, in smaller virtual libraries, a greedy acquisition strategy with a neural network model found 66.8% of the top-100 scores after evaluating only 6% of the library, corresponding to an enrichment factor (EF) of 11.9 compared to random screening [13]. This demonstrates that active learning can achieve an order-of-magnitude increase in efficiency, making large-scale screening projects feasible in academic and industrial settings where computational resources are often limited.

Experimental Protocols for Active Learning in Molecular Screening

The following section details a generalized, yet practical, workflow for implementing an active learning cycle in a structure-based virtual screening campaign. The process is iterative, with each cycle designed to maximize the information gain from a limited number of evaluations.

The diagram below illustrates the cyclical and self-improving nature of a standard active learning protocol for molecular screening.

Detailed Protocol Steps

Step 1: Initial Sampling and Data Preparation

- Objective: To create a small, initial labeled dataset for model training.

- Procedure:

- Begin with a large virtual molecular library (e.g., ZINC, Enamine, an in-house collection).

- Randomly select a small initial batch of molecules, typically 1-5% of the total library size, to ensure broad coverage of the chemical space [13] [16].

- Prepare the molecular structures (e.g., protonation, energy minimization) and the target protein structure (e.g., using a Protein Preparation Wizard to add hydrogens, assign bond orders, and optimize hydrogen bonds) [16].

Step 2: Computational Evaluation

- Objective: To generate the "ground truth" data for the selected molecules.

- Procedure:

- Perform the primary computational evaluation on the batch of molecules. This is often molecular docking (e.g., using AutoDock Vina or Glide SP) to obtain a docking score representing predicted binding affinity [13] [16].

- For higher accuracy and reduced false positives, consider using a receptor ensemble (multiple protein conformations from molecular dynamics simulations) for docking instead of a single static structure [15].

- (Optional) For a more refined score, run short molecular dynamics (MD) simulations (e.g., 100 ns per ligand) on the docked poses and calculate a dynamic score (e.g., a target-specific "h-score") based on simulation trajectories [15].

Step 3: Surrogate Model Training

- Objective: To build a machine learning model that learns the relationship between molecular structure and the computed score.

- Procedure:

- Encode the molecular structures of the evaluated batch into features. Common descriptors include molecular fingerprints (e.g., ECFP), graph-based representations, or physiochemical descriptors [13] [12].

- Train a surrogate ML model using the features as input and the computed scores (from Step 2) as the target variable.

- Model architectures can vary. Studies show that Directed-Message Passing Neural Networks (D-MPNN), feedforward neural networks, and random forests are all effective choices, with neural networks often showing superior performance [13].

Step 4: Candidate Selection via Acquisition Function

- Objective: To leverage the trained model to intelligently select the next batch of molecules for evaluation.

- Procedure:

- Use the trained surrogate model to predict the scores and associated uncertainties for all remaining unevaluated molecules in the library.

- Apply an acquisition function to rank these molecules and select the most promising batch. Common strategies include:

- The selected batch of molecules is then passed back to Step 2 for evaluation, closing the loop.

Step 5: Iteration and Stopping

- Objective: To determine when to halt the cycle.

- Procedure:

- The AL cycle (Steps 2-4) is repeated for a predefined number of iterations or until a performance plateau is reached (e.g., the fraction of newly discovered top-scoring molecules falls below a threshold) [12].

- Upon termination, all molecules evaluated throughout the process are ranked based on their final computational scores, and the top-ranked hits are recommended for experimental validation.

The Scientist's Toolkit: Essential Research Reagents and Solutions

Table 2: Key Research Reagent Solutions for Active Learning-Driven Screening

| Tool / Reagent | Category | Primary Function in Workflow | Example Software / Source |

|---|---|---|---|

| Virtual Compound Libraries | Chemical Database | Provides the vast search space of candidate molecules for screening. | ZINC [13], DrugBank [15], TargetMol Natural Compound Library [16] |

| Docking Software | Computational Tool | Scores protein-ligand interactions to generate initial training data. | AutoDock Vina [13], Glide SP [16] |

| Molecular Dynamics Engine | Computational Tool | Generates receptor ensembles and refines docking scores for higher accuracy. | GROMACS [15] [16] |

| Machine Learning Framework | Software Library | Builds, trains, and deploys surrogate models for prediction and candidate selection. | DeepAutoQSAR/AutoQSAR [16], Directed-Message Passing Neural Network (D-MPNN) [13] |

| Active Learning Platform | Integrated Software | Orchestrates the entire iterative workflow, from model updating to batch selection. | MolPAL [13], Schrödinger's Active Learning Glide [16] |

Integration with Modern AI and Future Outlook

The paradigm of active learning is highly complementary to emerging artificial intelligence techniques, including GPT-based molecular generation. In a comprehensive research thesis, active learning would serve as the critical experimental guidance engine that sits at the core of an iterative AI-driven discovery loop. While generative GPT models can propose novel molecular structures de novo, active learning provides the essential feedback mechanism to prioritize which of these generated compounds should be subjected to costly in silico or in vitro testing, thereby ensuring efficient resource allocation [17] [12]. This creates a powerful, closed-loop system: the GPT model expands the explorable chemical space, and the active learning agent intelligently exploits this space to rapidly converge on optimized candidates. Future advancements will likely focus on optimizing the integration of these advanced ML algorithms, developing more robust and transferable acquisition functions, and creating standardized pipelines to fully realize the potential of AI-augmented drug discovery [17] [12].

The Challenge of Vast Chemical Space (10^23 to 10^60 molecules)

The exploration of chemical space, estimated to contain between 10^23 to 10^60 drug-like molecules, represents a fundamental challenge in modern drug discovery. Traditional methods for virtual screening and molecular design are computationally prohibitive at this scale. This Application Note details how the integration of GPT-based molecular generation with Active Learning (AL) protocols creates a powerful, resource-efficient solution to this problem. We provide validated experimental workflows and quantitative benchmarks that demonstrate orders-of-magnitude improvements in screening efficiency, enabling the rapid discovery of novel therapeutic candidates.

Quantitative Performance Benchmarks

The following tables summarize key performance data from recent studies, demonstrating the efficacy of machine learning and active learning in navigating vast chemical spaces.

Table 1: Efficiency Gains in Virtual Screening & Active Learning

| Method / Strategy | Key Performance Metric | Efficiency Gain / Outcome | Source / Context |

|---|---|---|---|

| ML-Guided Docking (CatBoost Classifier) | Computational cost reduction for screening 3.5B compounds | >1,000-fold reduction vs. standard docking [18] | Virtual screening of make-on-demand libraries [18] |

| Active Learning for Drug Synergy | Synergistic pair discovery rate | Found 60% of synergistic pairs by exploring only 10% of combinatorial space [19] | Sequential batch testing of drug combinations [19] |

| Active Learning for Affinity Prediction | Experimental resource savings | Required 82% fewer experiments to identify top binders [19] [20] | Benchmarking on targets like TYK2, USP7, D2R [21] |

| Deep Batch Active Learning (COVDROP) | Model performance convergence | Achieved target performance with significantly fewer experimental cycles [20] | Optimization of ADMET and affinity properties [20] |

Table 2: Impact of Experimental Protocol Parameters

| Parameter | Performance Impact | Recommended Guideline | Source |

|---|---|---|---|

| Batch Size | Smaller batches increase synergy yield and model refinement [19] [21]. | Initial batch: Larger for diverse data. Subsequent cycles: 20-30 compounds [21]. | Ligand-binding affinity prediction [21] |

| Cellular Context Features | Significantly enhances prediction accuracy for synergistic pairs [19]. | Incorporate gene expression profiles; ~10 genes can be sufficient for convergence [19]. | Drug synergy prediction [19] |

| Molecular Representation | Limited impact on synergy prediction performance [19]. | Morgan fingerprints with addition operation are a robust, high-performing choice [19]. | Benchmarking of AI algorithms for synergy [19] |

Detailed Experimental Protocols

Protocol: GPT-Based Molecular Generation with Active Learning Fine-Tuning

This protocol, adapted from the ChemSpaceAL methodology, describes how to align a generative model towards a specific protein target without the need for exhaustive docking [22].

I. Pretraining the Base Generative Model

- Objective: Create a foundational model with a broad understanding of chemical space.

- Materials:

- Procedure:

- Preprocess the combined dataset to remove duplicates and invalid structures.

- Train the GPT model on the curated SMILES strings to maximize the likelihood of the sequences. This model is now capable of generating a diverse array of novel molecules.

II. Active Learning Fine-Tuning Cycle

- Objective: Steer the generative model to produce molecules with high affinity for a specific target.

- Materials:

- Pretrained GPT model from Step I.

- Target protein structure (e.g., PDB ID: 1IEP for c-Abl kinase) [22].

- Molecular docking software (e.g., AutoDock Vina, Glide).

- Clustering and sampling algorithm (k-means).

- Procedure:

- Generation: Use the current model to generate a large library of molecules (e.g., 100,000 unique, valid SMILES).

- Filtering: Apply ADMET and functional group filters to ensure drug-likeness and synthesizability [22].

- Clustering & Sampling:

- Calculate molecular descriptors (e.g., ECFP4 fingerprints, molecular weight) for all generated molecules.

- Project the descriptors into a PCA-reduced space for dimensionality reduction.

- Use k-means clustering to group molecules with similar properties.

- From each cluster, randomly sample a small, representative subset (e.g., ~1%) for evaluation [22].

- Evaluation: Dock the sampled molecules to the target protein and score them using an attractive interaction-based scoring function [22].

- Training Set Construction:

- Create a new training set by sampling molecules from all clusters. Sample proportionally to the mean scores of the evaluated molecules in each cluster (higher-scoring clusters contribute more).

- Augment this set with replicas of the top-performing evaluated molecules (e.g., those meeting a predefined score threshold).

- Fine-Tuning: Continue training (fine-tuning) the GPT model on this new, target-biased training set.

- Iteration: Repeat steps 1-6 for multiple cycles (e.g., 3-5 iterations). The model's output will progressively shift towards the promising region of chemical space.

Protocol: Machine Learning-Guided Docking for Ultralarge Libraries

This protocol uses a conformal prediction framework to enable virtual screens of billion-member libraries by drastically reducing the number of compounds that require explicit docking [18].

I. Training Set Preparation and Classifier Training

- Objective: Train a machine learning model to predict top-scoring docking compounds.

- Materials:

- Ultralarge chemical library (e.g., Enamine REAL, ZINC15).

- Target protein structure.

- Molecular docking software.

- Machine learning classifier (e.g., CatBoost).

- Procedure:

- Randomly sample a subset (e.g., 1 million compounds) from the full ultralarge library.

- Perform molecular docking for this sample against the target to obtain docking scores.

- Define an activity threshold (e.g., top 1% of scores) to label compounds as "virtual active" (minority class) or "virtual inactive" (majority class).

- Encode the molecular structures of the training set using features like Morgan fingerprints (ECFP4) or continuous data-driven descriptors (CDDD).

- Train a classifier (CatBoost is recommended for its optimal speed/accuracy balance) to distinguish between active and inactive compounds based on their features and docking scores [18].

II. Conformal Prediction and Library Screening

- Objective: Use the trained model to select a minimal subset of the full library for docking that contains the vast majority of true actives.

- Materials:

- Trained CatBoost classifier from Step I.

- Full ultralarge library (billions of compounds).

- Procedure:

- Calculate molecular features for the entire ultralarge library.

- Apply the Mondrian Conformal Prediction (CP) framework. Using the trained model and a calibration set, the CP framework assigns normalized P-values to each compound in the library [18].

- Set a significance level (ε) that controls the error rate. Based on the P-values, the CP framework divides the library into "virtual active" (to be docked) and "virtual inactive" (to be discarded) sets.

- Perform molecular docking only on the much smaller "virtual active" set. This set is guaranteed to contain the majority (e.g., 87-88%) of true top-scoring compounds while requiring docking for only a fraction (e.g., ~10%) of the original library [18].

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 3: Key Computational Tools and Datasets

| Item / Resource | Type | Function / Application | Source / Reference |

|---|---|---|---|

| ChEMBL, BindingDB, MOSES | Database | Large-scale, publicly available sources of bioactive molecules and their properties for model pretraining and benchmarking [22]. | [22] |

| Morgan Fingerprints (ECFP4) | Molecular Descriptor | A substructure-based molecular representation that provides a high-performing, fixed-length vector for machine learning models [18]. | [19] [18] |

| Gene Expression Profiles (e.g., from GDSC) | Cellular Descriptor | Provides context on the cellular environment, critically enhancing predictions in areas like drug synergy [19]. | Genomics of Drug Sensitivity in Cancer (GDSC) [19] |

| CatBoost Classifier | Machine Learning Algorithm | A gradient-boosting algorithm that provides an optimal balance of speed and accuracy for classification tasks in virtual screening [18]. | [18] |

| Conformal Prediction (CP) Framework | Statistical Framework | Provides a mathematically rigorous way to quantify the uncertainty of predictions, allowing users to control error rates when selecting compounds [18]. | [18] |

| ChemSpaceAL | Software Package | An open-source Python package implementing the active learning methodology for GPT-based molecular generation [22]. | [22] |

Workflow Visualizations

GPT-Based Active Learning Cycle

ML-Guided Docking Screen

Latent Space Exploration and Uncertainty Sampling

In the field of AI-driven molecular generation, particularly within research on GPT-based models and active learning, two methodological pillars have emerged as critical for efficient and targeted discovery: latent space exploration and uncertainty sampling. These techniques enable researchers to navigate the vast and complex chemical space in a principled, data-efficient manner.

Latent space exploration refers to the process of searching within a compressed, continuous representation of molecular structures to identify regions that correspond to desirable properties. Generative models, such as Variational Autoencoders (VAEs), learn to map discrete molecular representations (like SMILES strings or molecular graphs) into a lower-dimensional latent space where similar molecules are positioned near each other [23] [24]. Optimization can then occur in this continuous space, bypassing the need for explicitly defining chemical rules and enabling the use of powerful continuous optimization algorithms [23] [25]. The efficacy of this exploration depends heavily on the quality of the latent space, particularly its continuity (small changes in latent space correspond to small structural changes) and reconstruction rate (the ability to accurately decode latent points back to valid molecules) [23].

Uncertainty sampling, a cornerstone of active learning, addresses the challenge of expensive data acquisition—a common bottleneck in molecular property prediction. It is a model-based strategy that selects data points for which a model's prediction is most uncertain, with the goal of improving the model with minimal new data [26] [27] [28]. By prioritizing these informative points, researchers can maximize the informational gain from each costly experiment or computation, accelerating the approximation of complex structure-property relationships, or black-box functions [27].

When combined, these concepts form a powerful iterative cycle for molecular discovery: a generative model creates candidates in its latent space, a predictor model evaluates their properties and associated uncertainties, and an active learning algorithm selects the most promising and uncertain candidates for further evaluation, thereby refining both the generative and predictive models [24] [25].

Quantitative Data and Performance Comparison

The performance of latent space optimization and uncertainty sampling can be evaluated across several key metrics, including the validity and novelty of generated molecules, optimization efficiency, and predictive accuracy. The following tables summarize quantitative findings from recent studies.

Table 1: Performance of Latent Space Optimization (LSO) Methods on Molecular Design Tasks

| Method | Key Architecture | Optimization Algorithm | Key Performance Metrics | Reported Results |

|---|---|---|---|---|

| MOLRL [23] | VAE / MolMIM Autoencoder | Proximal Policy Optimization (PPO) | ↑ Penalized LogP (pLogP) under similarity constraints | Comparable or superior to state-of-the-art on benchmark tasks |

| Reinforcement Learning-Inspired Generation [29] | VAE + Latent Diffusion Model | Genetic Algorithm + Active Learning | Affinity & similarity constraints; Diversity | Generated effective, diverse compounds for specific targets |

| Multi-Objective LSO [25] | JT-VAE (Junction-Tree) | Iterative Weighted Retraining (Pareto) | Multi-property optimization; Pareto efficiency | Effectively pushed the Pareto front; predicted DRD2 inhibitors superior to known drugs (in silico) |

| Bayesian Optimization [24] | VAE | Gaussian Process (GP) | Sample efficiency for expensive evaluations | Efficient exploration of chemical space in low-dimensional latent representations |

Table 2: Efficiency of Uncertainty-Based Active Learning for Molecular Property Prediction

| Study Context | Acquisition Function | Dataset(s) | Performance vs. Random Sampling | Key Findings / Conditions |

|---|---|---|---|---|

| General Materials Science [27] | Uncertainty Sampling (US), Thompson Sampling (TS) | Liquidus surfaces (low-dim), Material databases (high-dim) | Better with low-dim descriptors Inefficient with high-dim descriptors | Efficiency is strongly dependent on the dimensionality and distribution of the input features. |

| Electrolyte Design [26] | Model Ensemble, MCDO, Density-Based | Aqueous Solubility, Redox Potential | Mixed results; Density-based best for Out-of-Domain (OOD) | No single UQ method dominated; active learning led to only modest improvements in generalization. |

| Targeted Design [28] | Expected Improvement (EI), Probability of Improvement (PI) | Various computational & experimental datasets | More efficient sampling and faster convergence | Enables optimal experimental design by maximizing the value of each measurement. |

Application Notes and Experimental Protocols

Protocol 1: Latent Space Exploration for Single-Property Optimization

This protocol details the process of optimizing a set of starting molecules for a single target property (e.g., penalized LogP) while maintaining structural similarity, using reinforcement learning in the latent space [23].

- Primary Objective: To improve a specific molecular property for a given set of initial molecules under structural similarity constraints.

- Research Reagent Solutions:

- Generative Model: A pre-trained autoencoder (e.g., VAE with cyclical annealing or MolMIM) with a validated continuous latent space and high reconstruction rate [23].

- Property Predictor: A trained model that maps a latent vector

zto the property of interest (e.g., pLogP). - Reinforcement Learning Agent: An implementation of the Proximal Policy Optimization (PPO) algorithm [23].

- Chemical Validation Suite: RDKit or similar software for parsing generated SMILES and assessing validity and structural similarity (e.g., via Tanimoto similarity) [23].

Step-by-Step Procedure:

- Model Preparation: Select and validate a pre-trained autoencoder. Ensure the latent space exhibits smooth continuity by testing that small perturbations of latent vectors lead to structurally similar molecules [23].

- Initialization: Encode the set of

Nstarting molecules{M_initial}into their latent representations{z_initial}. - RL Agent Setup: Define the RL environment.

- State: The current latent vector

z_t. - Action: A step

Δzin the latent space. - Reward: A function

R(z_t)based on the predicted property of the molecule decoded fromz_t, often including a penalty for low structural similarity to the original molecule. For pLogP optimization:R(z) = pLogP(G(z)) - λ * ||z - z_initial||, whereGis the decoder andλis a weighting parameter [23].

- State: The current latent vector

- Latent Space Exploration: For each

z_initialin the set, let the PPO agent interact with the environment over multiple episodes. The agent learns a policyπ(Δz | z)to take steps that maximize cumulative reward. - Candidate Generation & Validation: After training, use the agent's policy to propose optimized latent vectors

z_optimized. Decode these vectors into molecular structuresM_candidate. - Post-Processing: Filter the candidate molecules

M_candidatefor chemical validity using RDKit. Calculate the Tanimoto similarity between the valid candidates and their respective initial molecules. Retain only those candidates that meet a pre-defined similarity threshold (e.g., >0.5) [23].

Diagram Title: Latent Space Optimization with Reinforcement Learning

Protocol 2: Multi-Objective Optimization via Iterative Weighted Retraining

This protocol is designed for the more complex and common drug discovery scenario where multiple molecular properties must be optimized simultaneously, potentially with competing objectives [25].

- Primary Objective: To generate novel molecules that are Pareto-optimal with respect to multiple target properties (e.g., binding affinity, solubility, synthetic accessibility).

- Research Reagent Solutions:

- Generative Model: A VAE-based architecture, such as JT-VAE, which guarantees high rates of valid molecular generation [25].

- Property Predictors: A set of trained models

{P_i}for each of thektarget properties. - Optimization Algorithm: Implementation of a multi-objective Bayesian optimizer or a weighted retraining scheduler.

Step-by-Step Procedure:

- Initial Model Training: Pre-train the VAE (e.g., JT-VAE) on a large dataset of drug-like molecules (e.g., ChEMBL). This establishes the initial latent space

Z. - Generate Candidate Pool: Sample a large set of latent vectors

{z_candidate}from the prior distribution of the VAE (e.g., Gaussian) and decode them into a pool of candidate molecules{M_candidate}. - Property Prediction & Pareto Ranking: For all candidate molecules, predict the

ktarget properties using the predictor modelsP_i. Rank the candidates based on Pareto efficiency. - Calculate Weights: Assign a weight

w_jto each moleculejin the candidate pool based on its Pareto rank. Higher-ranked (non-dominated) molecules receive greater weight [25]. - Update Training Set: Form a new training dataset by combining the original data with the top-weighted candidate molecules from the generated pool.

- Iterative Retraining: Retrain the VAE on this weighted, augmented dataset. This step "shifts" the latent space towards regions that correspond to high-performing, Pareto-optimal molecules.

- Convergence Check: Repeat steps 2-6 until the Pareto front (the set of non-dominated solutions) no longer shows significant improvement or for a pre-defined number of iterations. The final model can be sampled to obtain a set of optimized candidate molecules.

Diagram Title: Multi-Objective Optimization via Iterative Retraining

Protocol 3: Uncertainty Sampling for Molecular Property Prediction

This protocol uses uncertainty sampling to efficiently build a training dataset for a molecular property predictor, minimizing the number of expensive experimental or computational measurements required [26] [27] [28].

- Primary Objective: To approximate a molecular property black-box function with high accuracy using as few labeled data points as possible.

- Research Reagent Solutions:

- Surrogate Model: A Gaussian Process Regression (GPR) model is well-suited for this task as it naturally provides uncertainty estimates (standard deviation) with its predictions [27] [28].

- Acquisition Function: A function that uses the surrogate model's predictions to score the utility of labeling an unlabeled data point. Standard uncertainty sampling uses

f_US(x) = σ(x), the predicted standard deviation [27]. - Molecular Descriptor Set: A consistent representation for all molecules (e.g., Morgan fingerprints, Matminer descriptors).

Step-by-Step Procedure:

- Initialization: Start with a small, randomly selected initial training set

D = {(x_i, y_i)}of sizeN_ini, wherey_iis the measured property for moleculex_i. Define a large poolUof unlabeled molecules. - Model Training: Train the surrogate model (GPR) on the current labeled set

D. - Uncertainty Estimation: Use the trained GPR to predict the mean

μ(x)and standard deviationσ(x)for every moleculexin the unlabeled poolU. - Query Selection: Apply the acquisition function. Select the molecule

x*with the highest uncertainty:x* = argmax_{x in U} σ(x)[27]. - Labeling: "Label" the selected molecule

x*by obtaining its true property valuey*through experiment or simulation. This is the most expensive step. - Data Augmentation: Add the newly labeled pair

(x*, y*)to the training setDand remove it from the unlabeled poolU. - Iteration: Repeat steps 2-6 until a predefined budget (number of labels) is exhausted or the prediction accuracy on a held-out validation set meets the target.

Diagram Title: Active Learning Loop with Uncertainty Sampling

The Scientist's Toolkit: Essential Reagents and Materials

Table 3: Key Research Reagent Solutions for Molecular Generation and Optimization

| Tool Category | Specific Examples & Resources | Primary Function in Research |

|---|---|---|

| Generative Models | VAE (with cyclical annealing) [23], JT-VAE [25], Generative Diffusion Models [29] | Maps discrete molecular structures to a continuous latent space for efficient exploration and generation. |

| Optimization Algorithms | Proximal Policy Optimization (PPO) [23], Genetic Algorithms [29], Bayesian Optimization (Gaussian Processes) [24] [25] | Executes the search strategy within the latent space or molecular space to find candidates with optimal properties. |

| Property Predictors | Graph Neural Networks (GNNs) [26], Fully-Connected Networks on Molecular Descriptors [26], Docking Score Simulations | Provides the reward signal for optimization by predicting molecular properties from structure. |

| Uncertainty Quantification (UQ) | Model Ensembles [26], Monte Carlo Dropout (MCDO) [26], Gaussian Process Variance [27] [28] | Estimates the model's uncertainty for its predictions, which drives the selection of samples in active learning. |

| Chemical Validation & Featurization | RDKit [23], MORDRED Descriptors, Morgan Fingerprints [27] | Handles fundamental cheminformatics tasks: validity checks, similarity calculations, and descriptor generation. |

| Datasets | ZINC [23], ChEMBL [29], QM9 [29], PubChem [26] | Provides large-scale, publicly available molecular data for pre-training generative and predictive models. |

Frameworks and Real-World Applications: Integrating GPT with Active Learning Cycles

Generative Pre-trained Transformer (GPT) models are revolutionizing molecular generation by providing a powerful framework for designing novel drug-like compounds. These models leverage their foundational ability to process sequential data to navigate the vastness of chemical space, estimated to contain over 10^60 feasible compounds, thereby identifying novel molecular structures with desired properties [30]. The integration of active learning paradigms, where AI models are trained iteratively by selecting the most informative data points for labeling, significantly enhances the efficiency of this exploration [31] [8]. This approach is particularly transformative for early-stage drug discovery, enabling researchers to accelerate the identification of hit and lead compounds against pathogenic target proteins, including those without existing inhibitors or those that have developed drug resistance [30] [17].

The architectural shift from traditional screening-based methods to generative AI, specifically GPT-based models, marks a critical evolution in computational drug design. These models move beyond searching existing libraries to creating entirely new molecular entities from scratch, conditioned on specific target protein information [30]. By framing molecular representations as linguistic sequences, these models can be pre-trained on large-scale chemical databases to learn fundamental chemical principles and then fine-tuned for target-aware generation, optimizing for critical parameters such as binding affinity, drug-likeness, and synthetic accessibility [30] [3].

Architectural Frameworks for Molecular Generation

The application of GPT architectures in molecular generation involves several sophisticated frameworks, each designed to translate the abstract concept of "language" into the domain of chemistry. The core innovation lies in treating molecular structures as sentences and their constituent atoms and bonds as tokens, enabling the model to learn the complex "grammar" and "syntax" of chemistry.

Molecular Representation as Language

The foundational step for any GPT-based molecular generator is the conversion of molecular structures into a sequential format. The Simplified Molecular Input Line Entry System (SMILES) is the most prevalent linguistic representation, where molecular graphs are linearized into strings of characters [30] [3]. For example, the benzene ring is represented as "c1ccccc1". This allows standard transformer-based decoder architectures, pre-trained on natural language corpora, to be effectively fine-tuned on millions of SMILES strings from databases like PubChem. The model learns to predict the next token in a SMILES sequence, thereby internalizing the rules of chemical validity and common structural motifs [30]. Alternative representations like SELFIES (Self-Referencing Embedded Strings) have been developed to guarantee syntactic validity in every generated string, further improving the robustness of generation [8].

Key Architectural Components

Advanced molecular generators build upon this base by incorporating additional components for conditional generation, particularly for structure-based drug design.

- Compound Decoder: This is the core GPT-like model, typically a transformer decoder. It is responsible for the autoregressive generation of the SMILES string, token-by-token [30] [3].

- Protein Encoder: To enable target-aware generation, a protein encoder processes the structural information of the target protein's binding pocket. This module, often also a transformer, encodes the 3D coordinates and sequential amino acid data into a context vector [30] [3].

- Cross-Attention Mechanism: This critical module connects the protein encoder to the compound decoder. It allows the generative process to be "conditioned" on the target protein information, ensuring that the generated molecules are geometrically and chemically complementary to the binding pocket [30].

- Contextual Encoder (for Refinement): Some architectures, like TamGen, incorporate a Variational Autoencoder (VAE) to encode a seeding compound. This allows for the refinement and optimization of existing molecules rather than generating entirely new ones from scratch, which is invaluable for lead optimization campaigns [30].

Representative Model Architectures

Recent research has produced several specialized GPT architectures for molecular generation:

- TamGen (Target-aware Molecular Generation): Employs a GPT-like chemical language model and integrates a protein encoder and a contextual VAE encoder. This enables both de novo generation and seed-based refinement of compounds, demonstrating practical success by identifying 14 inhibitory compounds against Tuberculosis ClpP protease [30].

- 3DSMILES-GPT: A token-only framework that represents both 2D and 3D molecular information as linguistic expressions. It overcomes a key limitation of 2D generation by explicitly incorporating 3D atomic coordinates (e.g., Cartesian xyz tokens) into the sequence, allowing the model to learn and generate physically plausible 3D conformations directly within target protein pockets [3].

- Active Learning-Based Models: As demonstrated by researchers at the University of Chicago, a streamlined GPT model can be coupled with an active learning loop. Starting from a minimal dataset (e.g., 58 data points), the model iteratively proposes candidates, which are then validated through real-world experiments (e.g., battery cycling). The experimental results are fed back into the model, refining its predictions and enabling the exploration of a massive chemical space (1 million electrolytes) with high efficiency [31].

Performance and Benchmarking

The efficacy of GPT-based molecular generators is quantitatively assessed against a suite of metrics that evaluate the quality, practicality, and binding potential of the generated compounds. Benchmarking is typically performed on curated datasets like CrossDocked2020, which contains protein-ligand structural pairs [30].

Table 1: Key Performance Metrics for Molecular Generation Models

| Metric | Description | Interpretation |

|---|---|---|

| Docking Score | Estimated binding affinity to the target protein (e.g., via AutoDock Vina) [30]. | More negative scores indicate stronger predicted binding. |

| QED (Quantitative Estimate of Drug-likeness) | A measure of drug-likeness based on molecular properties [3] [8]. | Score between 0 and 1; higher values are more drug-like. |

| SAS (Synthetic Accessibility Score) | Estimate of the ease of synthesizing a compound [30] [3]. | Lower scores indicate easier synthesis. |

| Lipinski's Rule of Five | A set of rules to evaluate if a compound has properties suitable for an oral drug [30]. | Fewer violations are better. |

| Molecular Diversity | Derived from Tanimoto similarity between Morgan fingerprints [30]. | High diversity indicates a broad exploration of chemical space. |

| Validity | Percentage of generated SMILES strings that correspond to a valid chemical structure [3]. | High validity is a baseline requirement. |

Comparative studies show that GPT-based models consistently outperform other deep learning approaches, such as generative adversarial networks (GANs) and diffusion models, across multiple metrics. For instance, TamGen achieved top-tier performance in docking score, QED, and SAS, demonstrating a superior ability to balance high binding affinity with drug-likeness and synthetic feasibility [30]. Notably, 3DSMILES-GPT reported a 33% enhancement in QED while maintaining state-of-the-art binding affinity, and it achieved a remarkable generation speed of approximately 0.45 seconds per molecule, a threefold increase over previous methods [3]. A key factor in this performance is the tendency of GPT-based models to generate molecules with fewer fused ring systems, a structural feature that correlates with better synthetic accessibility and lower toxicity profiles, making the outputs more closely resemble FDA-approved drugs [30].

Experimental Protocols

Integrating GPT-based molecular generators into a practical research pipeline involves a multi-stage process that combines computational design with experimental validation.

Protocol 1: Target-ConditionedDe NovoMolecular Generation

This protocol is for generating novel compounds against a specific protein target.

- Target Preparation: Obtain the 3D structure of the target protein (e.g., from PDB). Define the binding pocket coordinates, typically around a known ligand or from functional annotation.

- Model Configuration: Load a pre-trained target-aware GPT model (e.g., TamGen, 3DSMILES-GPT). Input the processed binding pocket information into the model's protein encoder.

- Conditional Generation: Generate a library of candidate molecules (e.g., 100-1000 compounds) conditioned on the target pocket. Use sampling techniques (e.g., top-k, nucleus sampling) to control the diversity of the output.

- In Silico Screening:

- Filtering: Filter generated molecules for chemical validity and remove duplicates.

- Docking: Perform molecular docking (e.g., with AutoDock Vina) to predict binding poses and scores [30].

- Property Prediction: Calculate ADMET (Absorption, Distribution, Metabolism, Excretion, Toxicity) properties, QED, and SAS to shortlist the most promising candidates [30] [8].

- Experimental Validation:

- Synthesis: Procure or synthesize the shortlisted compounds.

- Biochemical Assay: Test the compounds for biological activity against the target. A primary assay (e.g., an inhibition assay like IC50 determination) confirms potency [30].

- Secondary Assays: Confirm the mechanism of action and specificity through counter-screens and cellular assays.

Protocol 2: Active Learning-Driven Molecular Optimization

This protocol uses iterative feedback between the model and experiments to efficiently optimize molecules, ideal for scenarios with limited initial data [31].

- Initialization: Start with a small seed dataset of molecules with experimentally measured properties (e.g., 50-100 data points).

- Model Training: Train or fine-tune the GPT model on the initial dataset.

- Active Learning Loop: a. Candidate Proposal: The model proposes a batch of new molecules (e.g., 10-20), often selected from a larger generated set based on high predicted performance and high model uncertainty. b. Experimental Evaluation: Synthesize and test the proposed molecules in a relevant assay (e.g., battery cycle life test for electrolytes, IC50 for inhibitors) to obtain ground-truth data [31]. c. Data Augmentation: Add the new experimental results (both successes and failures) to the training dataset. d. Model Retraining: Update the GPT model with the augmented dataset to refine its predictive capability.

- Termination: Repeat the loop until a performance threshold is met or computational/resources budget is exhausted.

Protocol 3: Seed-Based Compound Refinement

This protocol is used for lead optimization, starting from a known active compound.

- Seed Compound Input: Provide the SMILES string of the starting molecule to the model (e.g., using the contextual encoder in TamGen) [30].

- Conditional Generation: The model generates a focused library of structural analogs or refined molecules based on the seed.

- Evaluation: The generated analogs are evaluated in silico and experimentally, as in Protocol 1, to establish structure-activity relationships (SAR) and identify improved derivatives.

The Scientist's Toolkit: Essential Research Reagents and Solutions

Table 2: Key Research Reagents and Computational Tools for GPT-Driven Molecular Generation

| Item / Reagent | Function / Role | Example Sources/Tools |

|---|---|---|

| Target Protein Structure | Provides the 3D structural context for conditional generation. | PDB (Protein Data Bank) |

| Chemical Databases | Source of millions of SMILES for pre-training and establishing baseline chemical knowledge. | PubChem, ZINC |

| Benchmark Datasets | Standardized datasets for training and fair benchmarking of models. | CrossDocked2020 [30] |

| Docking Software | Predicts the binding pose and affinity of generated molecules to the target. | AutoDock Vina [30] |

| Property Calculation Toolkit | Computes key molecular properties like QED, SAS, LogP. | RDKit [30] |

| GPU Computing Resources | Accelerates the training and inference of large GPT models. | NVIDIA A6000 GPU [30] |

| Biochemical Assay Kits | For experimental validation of generated compounds' activity (e.g., inhibition). | IC50 assay kits [30] |

Workflow Visualization

The following diagram illustrates the integrated computational and experimental workflow for active learning-driven molecular generation, as detailed in the protocols.

Diagram 1: Active Learning-Driven Molecular Generation

Diagram 2: GPT-Based Molecular Generator Architecture

The application of generative artificial intelligence, particularly GPT-based models, for de novo molecular design holds transformative potential for accelerating drug discovery. A significant challenge, however, lies in the poor generalization of molecular property predictors, which often fail to accurately evaluate molecules outside their training data distribution. This limitation prevents generative models from proposing novel, high-performing candidates. This document details a protocol for implementing an active learning (AL) feedback loop to iteratively refine generative models based on high-fidelity simulation data, thereby enabling extrapolation beyond known chemical space.

The effectiveness of the active learning methodology is demonstrated by its ability to generate molecules with properties that extrapolate beyond the training data. The following tables summarize key quantitative outcomes from published studies.

Table 1: Performance Comparison of Molecular Generation Methodologies [32]

| Methodology | Property Extrapolation (Std. Deviations) | Out-of-Distribution Classification Accuracy | Proportion of Stable Molecules |

|---|---|---|---|

| Active Learning Pipeline (Proposed) | Up to 0.44 | Improved by 79% | 3.5x higher than next-best |

| Standard Generative Model | Within training range | Baseline | Baseline |

Table 2: Application of Active Learning to Specific Protein Targets [33]

| Protein Target | Key Experimental Findings |

|---|---|

| c-Abl Kinase (with known inhibitors) | The fine-tuned model learned to generate molecules similar to FDA-approved inhibitors and reproduced two of them exactly, without prior knowledge of their existence. |

| HNH domain of Cas9 (no commercial inhibitors) | The methodology proved effective for a target without commercially available small-molecule inhibitors, demonstrating its utility in novel target exploration. |

Experimental Protocols

Protocol 1: Closed-Loop Active Learning for Molecular Generation

1. Objective: To iteratively refine a GPT-based molecular generator for a specific protein target, enabling the discovery of novel, stable, and high-affinity molecules.

2. Reagent & Computational Solutions:

- Generative Model: A pre-trained GPT-based model for molecular string generation (e.g., SMILES or SELFIES) [33].

- Initial Training Data: A publicly available chemical database (e.g., ZINC, ChEMBL).

- Property Predictor: A machine learning model (e.g., Random Forest, Neural Network) for predicting properties of interest (e.g., binding affinity, solubility).

- High-Fidelity Simulator: Quantum chemical simulation software (e.g., Gaussian, ORCA) or molecular docking software (e.g., AutoDock Vina, GOLD) for target-specific validation [32].

- Software Framework: The open-source

ChemSpaceALPython package provides a computational implementation of this methodology [33].

3. Methodology: 1. Initial Generation: * Sample a large set of candidate molecules from the pre-trained generative model. 2. Initial Property Prediction: * Use the property predictor to score and rank the generated candidates based on the desired properties. 3. Candidate Selection & Validation: * Select a diverse subset of the top-ranked candidates for high-fidelity validation using the quantum chemical or docking simulator. 4. Active Learning Feedback: * Incorporate the new, high-fidelity simulation data (molecule-property pairs) into the training dataset for the property predictor and/or the generative model. 5. Model Retraining: * Retrain or fine-tune the generative model and property predictor on the updated, augmented dataset. 6. Iteration: * Repeat steps 1-5 for multiple cycles. With each iteration, the models become increasingly accurate and specialized for the target chemical space, learning to propose molecules with extrapolated properties [32].

Protocol 2: Experimental Validation of Generated Molecules

1. Objective: To experimentally validate the synthesizability, stability, and biological activity of molecules generated by the active learning pipeline.

2. Research Reagent Solutions:

- Chemical Synthesis: Appropriate starting materials, catalysts, and solvents for solid-phase or solution-phase synthesis.

- Purification & Analysis: High-Performance Liquid Chromatography (HPLC) system for purification; Liquid Chromatography-Mass Spectrometry (LC-MS) and Nuclear Magnetic Resonance (NMR) spectroscopy for structural confirmation.

- Thermodynamic Stability Assay: Differential Scanning Calorimetry (DSC) to measure thermal stability.

- Biological Activity Assay: Target-specific assay kits (e.g., kinase activity assay for c-Abl inhibition); buffer solutions and recombinant protein for in vitro binding or functional studies [33].

3. Methodology: 1. Synthesis & Characterization: * Synthesize the top-ranking molecules identified from the final AL cycle. * Purify compounds and confirm their structural identity and purity using LC-MS and NMR. 2. Stability Testing: * Perform thermodynamic stability analysis using DSC to confirm the model's prediction of enhanced stability [32]. 3. In Vitro Biological Assay: * Test the synthesized compounds in a dose-response manner using a target-specific biochemical assay (e.g., IC₅₀ determination for an enzyme inhibitor). * Compare the activity of the newly generated molecules to known reference compounds.

Workflow Visualization

The following diagrams, generated using Graphviz, illustrate the logical and experimental workflows described in the protocols.

Active Learning Feedback Loop

Experimental Validation Workflow

The Scientist's Toolkit: Key Research Reagents & Materials

Table 3: Essential Materials for Experimental Validation [33]

| Item | Function / Explanation |

|---|---|

| GPT-based Molecular Generator | The core AI model for generating novel molecular structures in string representation (e.g., SMILES). |

| Quantum Chemical Simulation Software | Provides high-fidelity, computationally derived data on molecular properties (e.g., thermodynamic stability, electronic structure) for the active learning feedback loop [32]. |

| Molecular Docking Suite | Predicts the binding pose and affinity of generated molecules against a protein target of interest, used for in-silico validation and ranking. |

| c-Abl Kinase Protein & Assay Kit | Recombinant protein and a corresponding activity assay kit for experimentally validating the inhibitory activity of molecules generated against the c-Abl kinase target [33]. |

| Analytical Chemistry Instrumentation (LC-MS, NMR) | Essential for confirming the chemical structure, identity, and purity of synthesized molecules after they are generated in silico and produced in the lab. |

The discovery of novel battery electrolytes is traditionally a time- and resource-intensive process, often requiring the synthesis and testing of thousands of candidates to identify promising leads. However, a paradigm shift is underway through the integration of generative artificial intelligence (GenAI) and active learning frameworks. These approaches enable the efficient exploration of vast chemical spaces with minimal experimental data, dramatically accelerating the development of next-generation energy storage materials.

This application note details a groundbreaking methodology that successfully identified high-performance battery electrolytes by starting with only 58 initial data points. The approach combines an active learning model with experimental validation to navigate a virtual search space of one million potential electrolyte solvents [31]. The findings are contextualized within broader research on GPT-based molecular generation, demonstrating how generative AI models can be trained on limited datasets to produce novel, high-value molecular structures for specific technological applications [8].

Experimental Protocols & Methodologies

Active Learning Framework for Electrolyte Discovery

The core protocol employs an active learning framework that iteratively selects the most informative candidates for experimental testing, creating a closed-loop optimization system [31].

Procedure:

Initialization: Begin with a small, diverse set of 58 known electrolyte solvents as the initial training dataset. This seed data should encompass a range of chemical structures and performance characteristics relevant to the target application.

Model Training: Train a machine learning model (e.g., a Bayesian neural network or Gaussian process model) on the available data to predict battery performance metrics (e.g., discharge capacity, cycle life) based on molecular descriptors or features.

Candidate Proposal & Selection: Use the trained model to screen a large virtual library (e.g., 1 million molecules). The model proposes candidates from this library based on:

- High Predicted Performance: Molecules predicted to exceed current performance thresholds.

- High Uncertainty: Regions of the chemical space where the model's predictions are most uncertain, as evaluating these points maximizes knowledge gain.

Experimental Validation: Synthesize and test the top-ranked proposed electrolytes in actual battery cells. The key performance indicator (KPI) is the experimental cycle life of the built battery.

Iterative Model Refinement: Incorporate the new experimental results (both successful and unsuccessful) into the training dataset. Retrain the model with this augmented data and repeat steps 3-5 for several cycles (typically 7-10 campaigns).

Critical Step: The ultimate validation must be a real-world experiment. "The model suggested, 'Okay, go get an electrolyte in this chemical space,' then we actually built a battery with that electrolyte, and we cycled the battery to get the data. The ultimate experiment we care about is: Does this battery have long cycle life?" [31]

Generative AI for Molecular Generation

This protocol can be enhanced with generative models for de novo molecular design, moving beyond screening pre-defined libraries.

Procedure:

Model Selection: Employ a generative model architecture capable of operating in a low-data regime. This includes:

- Reinforcement Learning (RL) in Latent Space: Utilizing a pre-trained generative model (e.g., a Variational Autoencoder) to create a continuous latent representation of molecules. A reinforcement learning agent, such as Proximal Policy Optimization (PPO), is then used to navigate this space towards regions that correspond to molecules with desired properties [23].

- Physics-Informed Diffusion Models: Using models like MolEdit, a 3D molecular diffusion model that incorporates physical constraints (e.g., via a Boltzmann-Gaussian Mixture kernel) to ensure generated structures are physically realistic and stable [34].

Conditional Generation: Condition the model on target properties for battery electrolytes, such as high ionic conductivity, electrochemical stability, and safety.

Evaluation and Filtering: Generate novel molecular structures and filter them using the active learning framework described in Section 2.1 to select the most promising candidates for experimental testing.

Results & Data Analysis

The implementation of the active learning protocol led to the highly efficient discovery of new, high-performing electrolytes.

Key Performance Metrics

Table 1: Summary of Experimental Outcomes from the Active Learning Campaign [31]

| Metric | Initial State | Final Outcome | Improvement / Efficiency |

|---|---|---|---|

| Starting Data Points | 58 molecules | N/A | N/A |

| Virtual Search Space | 0 | 1,000,000 molecules explored | N/A |

| Active Learning Cycles | 0 | 7 campaigns completed | N/A |

| Electrolytes Tested | 58 (initial data) | ~10 tested per cycle | ~70 total experiments |

| Novel High-Performing Electrolytes Identified | 0 | 4 distinct new electrolytes | Hit-rate of ~5.7% per tested candidate |

| Performance of New Electrolytes | Baseline (state-of-the-art) | Rival state-of-the-art electrolytes | Performance achieved with ~0.07% of the data required for exhaustive screening |

Advantages Over Traditional Methods

The results demonstrate a fundamental advantage over traditional screening or combinatorial chemistry. By leveraging an AI-guided, hypothesis-generating approach, the method achieved several critical outcomes: