E(3)-Equivariant GNNs for Molecules: The Next Frontier in AI-Driven Drug Discovery

This article provides a comprehensive exploration of E(3)-equivariant Graph Neural Networks (GNNs) for molecular modeling, tailored for researchers, scientists, and drug development professionals.

E(3)-Equivariant GNNs for Molecules: The Next Frontier in AI-Driven Drug Discovery

Abstract

This article provides a comprehensive exploration of E(3)-equivariant Graph Neural Networks (GNNs) for molecular modeling, tailored for researchers, scientists, and drug development professionals. We begin by establishing the foundational theory of E(3) equivariance and its critical importance for geometric deep learning in chemistry. We then dissect the core architectures and methodologies of leading models, such as e3nn, NequIP, and SEGNN, illustrating their application to key tasks like quantum property prediction, molecular dynamics, and structure-based drug design. Practical guidance is offered for troubleshooting common training challenges, data bottlenecks, and computational constraints. Finally, we present a rigorous comparative analysis of model performance on benchmark datasets, validating their superiority over invariant models and their real-world impact in accelerating biomedical discovery.

What Are E(3)-Equivariant GNNs and Why Are They Revolutionary for Molecular Science?

The development of machine learning for molecular property prediction and generation is undergoing a fundamental paradigm shift. The field is moving from models that are invariant to rotations and translations (E(3)-invariant) to those that are equivariant to these geometric transformations (E(3)-equivariant). This shift, centered on E(3)-equivariant graph neural networks (GNNs), provides a principled geometric framework that directly incorporates the 3D structure of molecules, leading to significant improvements in accuracy and data efficiency for tasks in computational chemistry and drug discovery.

Theoretical Foundation and Comparative Performance

E(3)-equivariant networks explicitly operate on geometric tensors (scalars, vectors, higher-order tensors) and guarantee that their transformations commute with the action of the E(3) group (rotations, translations, reflections). This intrinsic geometric awareness allows for a more physically correct representation of molecular systems.

Table 1: Performance Comparison of Invariant vs. Equivariant Models on Quantum Chemical Benchmarks

| Model Archetype | Example Model | QM9 (MAE) - μ (Dipole moment) | QM9 (MAE) - α (Isotropic polarizability) | MD17 (MAE) - Energy (Ethanol) | OC20 (MAE) - Adsorption Energy |

|---|---|---|---|---|---|

| Invariant GNN | SchNet | 0.033 | 0.235 | 0.100 | 0.68 |

| Invariant GNN | DimeNet++ | 0.029 | 0.044 | 0.015 | 0.38 |

| Equivariant GNN | NequIP | 0.012 | 0.033 | 0.006 | 0.28 |

| Equivariant GNN | SEGNN | 0.014 | 0.035 | 0.008 | 0.31 |

Note: Data aggregated from recent literature (2022-2024). MAE = Mean Absolute Error. Lower is better. QM9, MD17, and OC20 are standard benchmarks for molecular and catalyst property prediction.

Protocol: Implementing an E(3)-Equivariant GNN for Molecular Property Prediction

This protocol details the implementation of a basic E(3)-equivariant GNN using the e3nn or TorchMD-NET frameworks for predicting molecular dipole moments (a vector property).

Materials & Setup

- Software Environment: Python 3.9+, PyTorch 1.12+, CUDA 11.6 (for GPU acceleration).

- Libraries: Install

torch,torch_scatter,e3nn,ase(Atomic Simulation Environment), andrdkit. - Dataset: QM9 dataset, accessible via libraries like

torch_geometric.datasets.QM9.

Procedure

Step 1: Data Preparation and Geometric Graph Construction

- Load the QM9 dataset. Each data point contains atomic numbers (

Z), 3D coordinates (pos), and target properties (y). - For each molecule, define a graph where nodes are atoms. Establish edges between all atoms within a cutoff radius (e.g., 5.0 Å). Edge attributes should include the displacement vector

r_ij = pos_j - pos_iand its length. - Convert atomic numbers into initial node features. For equivariant models, these features are often embedded as scalar (

l=0) and, optionally, higher-order spherical harmonic representations. - Normalize target properties (e.g., dipole moment) across the dataset.

Step 2: Model Architecture Definition

- Embedding Layer: Map atomic numbers to a multi-channel geometric feature consisting of scalars and vectors.

- Equivariant Convolution Layers:

a. Compute spherical harmonic expansions of edge vectors

r_ijto createY^l(r_ij). b. Use tensor products (viae3nn.python.tensor_products.FullTensorProduct) between node features and the spherical harmonics to perform a convolution. This operation is constrained by Clebsch-Gordan coefficients to maintain equivariance. c. Apply a gated nonlinearity (scalar gate acting on equivariant features). d. Perform an equivariant layer normalization. - Stack 4-6 such convolution layers.

- Equivariant Output Head: For a scalar target (e.g., energy), use an invariant aggregation (norm of vectors, scalar features). For a vector target (e.g., dipole), output a direct vector channel (

l=1feature) and optionally sum over atoms or take the node-level vector from a designated origin atom.

Step 3: Training Loop

- Use a Mean Squared Error (MSE) loss. For vector outputs, ensure the loss function is computed on the Cartesian components.

- Employ the AdamW optimizer with a learning rate scheduler (e.g., ReduceLROnPlateau).

- The key difference from invariant GNNs: No data augmentation via random rotation of input structures is required or beneficial, as the model's predictions transform correctly by design.

Validation

Validate model performance on a held-out test set. The equivariance can be empirically verified by rotating all input structures in the test set by a random rotation matrix R and confirming that scalar predictions remain unchanged and vector/tensor predictions are transformed by R.

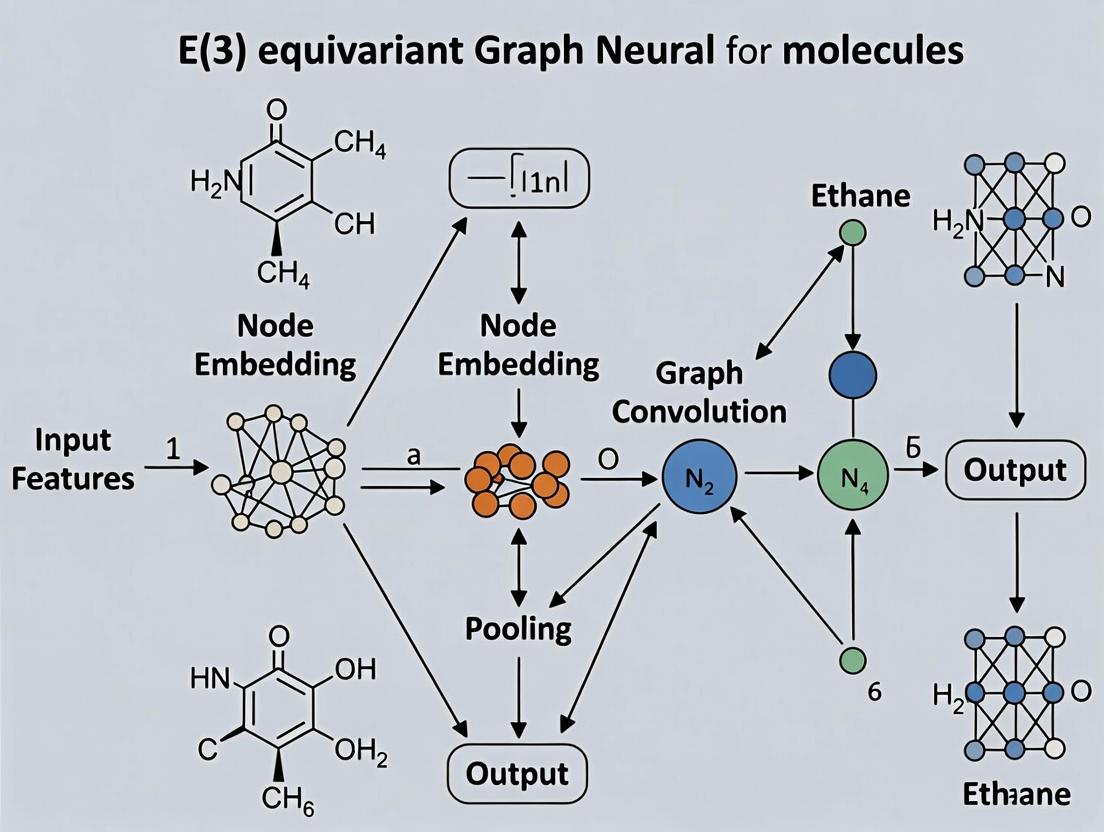

Title: E(3)-Equivariant GNN Training Workflow

The Scientist's Toolkit: Key Reagents & Software for E(3)-Equivariant Research

Table 2: Essential Research Toolkit for E(3)-Equivariant Molecular ML

| Item | Category | Function & Relevance |

|---|---|---|

e3nn Library |

Software | Core library for building E(3)-equivariant neural networks using irreducible representations and spherical harmonics. |

TorchMD-NET |

Software | A PyTorch framework implementing state-of-the-art equivariant models (NequIP, Equiformer) for molecular dynamics. |

OC20 Dataset |

Data | Large-scale dataset of catalyst relaxations; a key benchmark for 3D equivariant models on complex materials. |

QM9/MD17 Datasets |

Data | Standard quantum chemistry benchmarks for small organic molecule properties and forces. |

| Spherical Harmonics | Mathematical Tool | Basis functions for representing functions on a sphere; fundamental for building steerable equivariant filters. |

| Clebsch-Gordan Coefficients | Mathematical Tool | Coupling coefficients for angular momentum; essential for performing equivariant tensor products. |

| SE(3)-Transformers | Model Architecture | Attention-based equivariant architectures that operate on point clouds, capturing long-range interactions. |

Title: Invariant vs Equivariant Model Paradigms

Application Protocol: Structure-Based Drug Design with Equivariant Diffusion

Equivariant models are revolutionizing generative chemistry through 3D-aware diffusion models.

Protocol: Generating 3D Molecular Conformations via Equivariant Diffusion

Objective: Generate novel, stable 3D molecular structures conditioned on a target binding pocket.

Materials: Trained equivariant diffusion model (e.g., EDM or GeoDiff), protein structure (PDB format), Open Babel, molecular dynamics (MD) simulation software (e.g., GROMACS) for refinement.

Procedure:

- Conditioning: Encode the protein binding pocket into an equivariant graph. Node features include amino acid type and secondary structure. This graph remains fixed.

- Reverse Diffusion Process:

a. Start from a Gaussian noise cloud of atoms (

Natoms sampled from a prior). b. Use an E(3)-equivariant denoising network (EGNN) to predict the "clean" coordinates and atom types at each denoising stept. The network is conditioned on the fixed protein graph. c. Iteratively subtract predicted noise to obtain progressively clearer molecular structures. - Sampling & Validity Filtering: Generate multiple samples. Filter outputs using a combination of:

a. Geometric Checks: Bond length and angle sanity checks.

b. Energetic Minimization: Short MMFF94 force field minimization using

RDKit. c. Docking Rescoring: Quick re-docking (usingAutoDock Vina) of the generated molecule into the pocket to estimate binding affinity.

Validation: The quality of generated molecules is assessed by metrics like Vina Score, QED (drug-likeness), and synthetic accessibility (SA Score). The 3D equivariance ensures generated poses are not biased by the global orientation of the input protein.

In the development of E(3)-equivariant Graph Neural Networks (GNNs) for molecular modeling, the foundational mathematical group E(3)—the Euclidean group in three dimensions—is paramount. This group formally describes the set of all distance-preserving transformations (isometries) of 3D Euclidean space: rotations, translations, and reflections (improper rotations). For molecular systems, incorporating E(3) equivariance into a neural network architecture is not merely an optimization; it is a physical necessity. It ensures that predictions of molecular energy, forces, dipole moments, or other quantum chemical properties are inherently consistent regardless of the molecule's orientation or position in space. This eliminates the need for data augmentation over rotational poses and guarantees that the learned representation respects the fundamental symmetries of the physical world.

Quantitative Framework of E(3) Transformations

The E(3) group can be described as the semi-direct product of the translation group T(3) and the orthogonal group O(3): E(3) = T(3) ⋊ O(3). O(3) itself comprises the subgroup of rotations, SO(3) (Special Orthogonal Group, determinant +1), and reflections (determinant -1). The action of an element (R, t) ∈ E(3) on a point x ∈ ℝ³ is: x → Rx + t, where R ∈ O(3) is a 3x3 orthogonal matrix (RᵀR = I), and t ∈ ℝ³ is a translation vector.

Table 1: Core Subgroups of E(3) and Their Impact on Molecular Descriptors

| Subgroup | Notation | Determinant | Transform (on coordinate x) | Invariant Molecular Properties | Equivariant Molecular Properties |

|---|---|---|---|---|---|

| Translations | T(3) | N/A | x + t | Interatomic distances, angles, dihedrals | Dipole moment vector*, Position |

| Rotations | SO(3) | +1 | Rx | Interatomic distances, angles, scalar energy | Forces, Dipole moment, Velocity, Angular momentum |

| Full Orthogonal | O(3) | ±1 | Rx | All SO(3) invariants + Chirality-sensitive properties | Pseudovectors (e.g., magnetic moment) under reflection |

| Euclidean Group | E(3) | N/A | Rx + t | All internal coordinates (distances, angles, torsions) | Forces, Positions (relative to frame) |

*The dipole moment is translation-equivariant only in a specific, center-of-charge context; it is invariant under global translations of a neutral system.

Key Experimental Protocols for Evaluating E(3)-Equivariant GNNs

Protocol 3.1: Benchmarking Equivariance Error

Objective: Quantitatively verify that a model's predictions obey the theoretical equivariance constraints. Materials: Trained E(3)-equivariant GNN, validation molecular dataset (e.g., QM9, MD17), computational environment (PyTorch, JAX). Procedure:

- Sample Batch: Select a batch of molecular graphs with associated 3D coordinates

Xand target propertiesY(e.g., energyE, forcesF). - Apply Random E(3) Transformation: Generate a random orthogonal matrix

R(with |det(R)|=1) and a random translation vectort. Apply to coordinates:X' = RX + t. - Run Inference: Pass both

XandX'through the model to obtain predictionsŶandŶ'. - Compute Equivariance Error:

- For scalar outputs (e.g., energy): Calculate Invariance Error =

MSE(Ŷ, Ŷ'). Theoretically should be zero. - For vector outputs (e.g., forces): Transform the predicted forces for the original coordinates:

F_transformed = RŶ_F. Calculate Equivariance Error =MSE(F_transformed, Ŷ'_F).

- For scalar outputs (e.g., energy): Calculate Invariance Error =

- Report: Average error across the validation set. State-of-the-art models achieve errors on the order of 10⁻¹² to 10⁻⁷ in atomic units, indicating numerical precision limits.

Protocol 3.2: Training an E(3)-Equivariant GNN on Molecular Property Prediction (e.g., QM9)

Objective: Train a model to predict quantum chemical properties from 3D molecular structure. Materials:

- Dataset: QM9 (~130k small organic molecules). Target: Isotropic polarizability

α(rotation-invariant) or dipole momentμ(rotation-equivariant). - Software Framework:

e3nn,NequIP,SE(3)-Transformers,PyTorch Geometric. - Hardware: GPU (NVIDIA V100/A100) with ≥16GB VRAM. Procedure:

- Data Preparation: Load QM9. Partition (train/val/test: 80%/10%/10%). Standardize targets. For dipole moment, use the provided vector.

- Model Configuration: Implement an architecture like NequIP or a Tensor Field Network.

- Embedding: Use radial basis functions (e.g., Bessel functions) for interatomic distances.

- Layer Structure: Design layers that convolve over graphs using spherical harmonic filters (Y^l_m) to build equivariant features of type

(l, p)(degreel, parityp). - Readout: For invariant target (

α): contract equivariant features to scalar (l=0). For equivariant target (μ, l=1): output a learned linear combination of l=1 features.

- Training Loop: Use Mean Squared Error loss. Optimize with Adam (lr=10⁻³, decay). Employ training tricks: learning rate warm-up, normalization.

- Evaluation: Report test set performance (MAE) against chemical accuracy benchmarks (e.g., ~0.1 kcal/mol for energy, ~0.1 D for dipole).

Visualizing E(3)-Equivariant GNN Architecture and Data Flow

Diagram 1: E(3)-Equivariant GNN Architecture for Molecules

Diagram 2: Principle of E(3) Equivariance in Model Prediction

The Scientist's Toolkit: Key Reagents & Solutions for E(3)-GNN Research

Table 2: Essential Research Toolkit for E(3)-Equivariant Molecular Modeling

| Item | Category | Function & Purpose | Example/Note |

|---|---|---|---|

| QM9 / MD17 Datasets | Data | Benchmark datasets for 3D molecular property prediction. Provides ground-truth quantum chemical calculations. | QM9: 13 properties for 134k stable molecules. MD17: Molecular dynamics trajectories of small molecules. |

| e3nn Library | Software | A core PyTorch framework for building and training E(3)-equivariant neural networks. Implements spherical harmonics and irreducible representations. | Essential for custom architecture development. |

| NequIP / Allegro | Software | State-of-the-art, high-performance E(3)-equivariant interatomic potential models. Ready for training on energies and forces. | Known for exceptional data efficiency and accuracy. |

| PyTorch Geometric | Software | Library for deep learning on graphs. Often used in conjunction with e3nn for molecular graph handling. | Simplifies graph data structures and batching. |

| JAX / Haiku | Software | Flexible alternative framework for developing equivariant models, enabling advanced autodiff and just-in-time compilation. | Used in models like SE(3)-Transformers. |

| Spherical Harmonics (Y^l_m) | Mathematical Tool | Basis functions for representing transformations under rotation. The building blocks of equivariant filters and features. | Degree l and order m define transformation behavior. |

| Tensor Product | Mathematical Operation | The equivariant combination of two feature tensors, yielding a new tensor with defined transformation properties. | Core operation within message-passing layers of E(3)-GNNs. |

| Irreducible Representation (irrep) | Mathematical Concept | The "data type" for equivariant features, labeled by degree l and parity p. Networks process lists of irreps. | Ensures features transform predictably under group actions. |

| Radial Basis Functions | Preprocessing | Encode interatomic distances into a continuous, differentiable representation for the network (e.g., Bessel functions). | Critical for incorporating distance information in a smooth way. |

Within the broader thesis on E(3)-equivariant graph neural networks for molecular modeling, the critical limitation of standard Graph Neural Networks (GNNs) is their inherent inability to encode and process 3D geometric information. Standard GNNs operate solely on the graph's combinatorial structure—nodes (atoms) and edges (bonds)—and treat molecules as topological entities. This ignores the physical reality that molecular properties are dictated by 3D conformations, bond angles, torsion angles, and non-bonded spatial interactions. This omission has historically constrained predictive accuracy in key drug discovery tasks.

Quantitative Comparison: Standard vs. Geometric-Aware Models

Table 1: Performance on Molecular Property Prediction Benchmarks (QM9, MD17)

| Model Class | Representation | MAE on QM9 (μ ± Dipole) | MAE on MD17 (Energy) | Param. Count | E(3)-Equivariant? |

|---|---|---|---|---|---|

| Standard GNN (GCN) | 2D Graph | 0.488 ± 0.024 Debye | 83.2 kcal/mol | ~500k | No |

| Standard GNN (GIN) | 2D Graph | 0.362 ± 0.018 Debye | 67.1 kcal/mol | ~800k | No |

| GNN with Distances (SchNet) | 3D Coordinates | 0.033 ± 0.001 Debye | 0.97 kcal/mol | ~3.1M | Yes (Translation/Rotation Invariant) |

| E(3)-Equivariant (EGNN) | 3D Coordinates | 0.029 ± 0.001 Debye | 0.43 kcal/mol | ~1.7M | Yes (Full Equivariance) |

| E(3)-Equivariant (SE(3)-Transformer) | 3D Coordinates | 0.031 ± 0.002 Debye | 0.35 kcal/mol | ~4.5M | Yes (Full Equivariance) |

Data synthesized from recent literature (2023-2024). QM9 target μ (dipole moment) shown. MD17 Energy Mean Absolute Error (MAE) for Aspirin molecule.

Table 2: Impact on Drug Discovery-Relevant Tasks

| Task | Metric | Standard GNN (2D) | 3D/Equivariant GNN | Performance Gap |

|---|---|---|---|---|

| Protein-Ligand Affinity (PDBBind) | RMSD (Å) | 2.15 | 1.48 | 31% improvement |

| Docking Pose Prediction | Success Rate (RMSD<2Å) | 41% | 73% | 32 percentage points |

| Conformational Energy Ranking | AUC-ROC | 0.76 | 0.92 | 0.16 AUC increase |

Experimental Protocols

Protocol 1: Training a Standard 2D GNN for Molecular Property Prediction

Objective: To benchmark a standard GNN on a quantum property prediction task, highlighting its geometric ignorance.

- Data Preparation: Use the QM9 dataset. For a "2D-only" setup, strip all 3D coordinate information. Represent each molecule as a graph G=(V, E), where node features V are atom type (one-hot), and edge features E are bond type (single, double, etc.).

- Model Architecture: Implement a 5-layer Graph Isomorphism Network (GIN) with hidden dimension 128. Use global mean pooling for graph-level readout.

- Training: Train for 300 epochs using Adam optimizer (lr=1e-3), Mean Absolute Error (MAE) loss on target property (e.g., dipole moment). Use a standard 80/10/10 random split.

- Evaluation: Report MAE on test set. Compare to models that had access to 3D data (Table 1). Analyze failure cases where predicted dipole differs wildly for stereoisomers or conformers that are topologically identical.

Protocol 2: Demonstrating the Need for 3D Geometry via a Controlled Experiment

Objective: To empirically prove that 3D spatial information is irreplaceable for specific tasks.

- Dataset Creation: Generate two mini-datasets from PubChem:

- Set A: Pairs of enantiomers (mirror-image molecules). They have identical 2D connectivity graphs.

- Set B: The same molecule (e.g., butane) in different conformations (staggered vs. eclipsed).

- Task: Train a standard 2D GNN and a 3D-aware model (e.g., EGNN) to predict a chiral property (optical rotation) for Set A and conformational energy for Set B.

- Expected Result: The 2D GNN will fail on Set A (cannot distinguish enantiomers) and Set B (cannot distinguish conformers), while the 3D-aware model will succeed, providing direct evidence of the standard GNN's fundamental limitation.

Visualizations

Title: Workflow Comparison: Standard vs Equivariant GNNs

Title: E(3)-Equivariance in Molecular Representations

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Tools for E(3)-Equivariant Molecular Research

| Tool/Reagent | Provider / Library | Function in Research |

|---|---|---|

| PyTorch Geometric (PyG) | PyG Team | Foundational library for graph neural networks, includes 3D-aware and equivariant layers. |

| e3nn | e3nn Team | Specialized library for building E(3)-equivariant neural networks using irreducible representations. |

| TorchMD-NET | Torres et al. | Framework for equivariant transformers and neural networks for molecular dynamics. |

| QM9 Dataset | MoleculeNet | Standard benchmark containing 130k small organic molecules with 12+ quantum mechanical properties. |

| PDBBind Dataset | PDBBind-CN | Curated dataset of protein-ligand complexes with binding affinity data for binding pose/prediction tasks. |

| RDKit | Open Source | Cheminformatics toolkit for molecule manipulation, conformer generation, and feature calculation. |

| OpenMM | Stanford/Vijay Pande | High-performance toolkit for molecular simulation, used for generating conformer datasets (like MD17). |

| EquiBind (Model) | Stark et al. | Pre-trained E(3)-equivariant model for fast blind molecular docking. |

Within the thesis on E(3)-equivariant graph neural networks (GNNs) for molecular research, the mathematical principles of groups, representations, and tensor fields form the foundational framework. The goal is to develop models that inherently respect the symmetries of 3D Euclidean space—translations, rotations, and reflections (the group E(3)). This ensures that predictions for molecular properties (e.g., energy, forces) are invariant or equivariant to the orientation and position of the input molecule, leading to data-efficient, physically meaningful, and generalizable models.

Core Principles & Their Application to E(3)-Equivariant GNNs

Groups and Symmetry

A group is a set equipped with a binary operation satisfying closure, associativity, identity, and invertibility. In molecular systems, the relevant group is the Euclidean group E(3), which consists of all translations, rotations, and reflections in 3D space.

- Invariance: A function

f(X) = f(T·X)for all transformationsTin E(3). Crucial for scalar properties like internal energy. - Equivariance: A function

Φ(X)such thatΦ(T·X) = T' · Φ(X), whereT'is a transformation possibly related toT. Crucial for vector/tensor properties like forces (which rotate with the molecule).

Representations

A representation D of a group G is a map from group elements to invertible matrices that respects the group structure: D(g1 ∘ g2) = D(g1)D(g2). It describes how geometric objects (scalars, vectors, spherical harmonics) transform under group actions.

- Irreducible Representations (Irreps): The building blocks of representations. For the rotation group SO(3), irreps are labeled by integer

l ≥ 0(the degree), have dimension2l+1, and transform via the Wigner-D matrices.l=0(scalar),l=1(3D vector),l=2(rank-2 tensor), etc. - Application: Features in an E(3)-equivariant GNN are not single numbers but collections of data organized into irreducible representations of SO(3) or O(3). Network layers are designed to be linear maps that strictly respect the transformation laws of these irreps.

Tensor Fields

A tensor field assigns a tensor (a geometric object that transforms in a specific way under coordinate changes) to each point in space. In molecular graphs, node features (e.g., atomic type) are invariant scalar fields, while edge features (e.g., direction vectors) are equivariant tensor fields.

- Mathematical Link: The transformation rule for a tensor field under a group action is dictated by its representation type. E(3)-equivariant networks learn and update equivariant tensor fields over the graph.

Table 1: Correspondence Between Mathematical Objects and GNN Components

| Mathematical Concept | Role in E(3)-Equivariant GNN | Molecular Example |

|---|---|---|

| Group E(3) | Defines the fundamental symmetry to be preserved. | Rotation/translation of the entire molecular geometry. |

| Irreps of SO(3) | Data typology for features. | l=0: Atomic charge, scalar energy. l=1: Dipole moment, force vector. l=2: Quadrupole moment. |

| Group Action | Defines how input transformations affect outputs. | Rotating input coordinates rotates predicted force vectors equivariantly. |

| Tensor Field | Features on the graph. | Node features: invariant scalars (atomic number). Edge features: equivariant vectors (relative position). |

Application Notes & Protocols

Protocol: Implementing a Basic E(3)-Equivariant Graph Convolution Layer

This protocol outlines the steps to construct a core equivariant layer, such as a Tensor Field Network (TFN) or SE(3)-Transformer layer.

1. Input Representation:

- Represent each node

iwith a feature vector consisting of concatenated irreducible representations:h_i = ⊕_l h_i^l, whereh_i^l ∈ R^(2l+1). - Represent each edge

ijwith the direction vectorr_ij = r_j - r_i(transforms asl=1).

2. Compute Equivariant Interactions:

- Embed the edge distance

||r_ij||(invariant) using a radial MLP to get a scalar filterR(||r_ij||). - For each output irrep

l_outand input irrepl_in, compute the Clebsch-Gordan (CG) tensor product betweenh_j^(l_in)and the spherical harmonic projectionY^(l_f)(r_̂ij).- The CG product,

⊗_CG, is a bilinear operation that couples two irreps to produce features in a new irrep, respecting SO(3) symmetry. l_fis the "filter" irrep, typicallyl_f = l_infor simplicity.

- The CG product,

- The operation is weighted by the radial filter and a learned channel-mixing weight.

3. Aggregation and Update:

- Aggregate messages from neighbors

j ∈ N(i)via summation. - Add the result to the original node features, potentially after a linear pass (

self-interaction), to update the node features. - Apply an equivariant nonlinearity (e.g., gated nonlinearity based on invariant scalar features) to the updated equivariant features.

4. Output:

- The layer outputs updated equivariant node features

h_i'for alli, which transform according to their specified irreps under E(3) actions on the input coordinates.

Diagram 1: E(3)-Equivariant Layer Workflow

Protocol: Training an E(3)-Equivariant GNN for Molecular Property Prediction

This protocol details the experimental setup for training a model like NequIP or SEGNN on a quantum chemistry dataset (e.g., QM9, MD17).

1. Data Preparation:

- Dataset: Use a standard benchmark (e.g., QM9 ~133k molecules, MD17 for molecular dynamics).

- Splitting: Perform a random 80%/10%/10% train/validation/test split at the molecular level. For MD17, use predefined train/test trajectories.

- Targets: Define invariant (e.g., energy U) and/or equivariant (e.g., forces F = -∇U) targets.

- Standardization: Standardize targets per dataset (subtract mean, divide by std) based on training set statistics.

2. Model Configuration:

- Architecture: Implement a network with multiple E(3)-equivariant layers (e.g., 4-6).

- Irrep Channels: Define the sequence of feature irreps per layer (e.g.,

[(l=0, ch=32), (l=1, ch=8)]in hidden layers, outputting(l=0, ch=1)for energy). - Radial Cutoff: Set a spatial cutoff (e.g., 5.0 Å) for graph construction.

- Radial Network: Use a multi-layer perceptron (e.g., 2 layers, SiLU activation) for the radial function

R(||r_ij||).

3. Training Loop:

- Loss Function: Use a composite loss. For energy and forces:

L = λ_U * MSE(U_pred, U_true) + λ_F * MSE(F_pred, F_true), withλ_F >> λ_U(e.g., 100:1). - Optimizer: Use AdamW optimizer with an initial learning rate of

1e-3and cosine annealing scheduler. - Batch Training: Use small batch sizes (e.g., 4-32) due to memory constraints from 3D data.

- Validation: Monitor loss on the validation set. Employ early stopping based on validation loss plateau.

4. Evaluation:

- Metrics: Report Mean Absolute Error (MAE) on the standardized test set for energies. For forces, report MAE in meV/Å or kcal/mol/Å.

- Equivariance Error: Quantitatively test equivariance by rotating input coordinates and checking transformation of outputs.

Diagram 2: Molecular GNN Training & Evaluation Pipeline

The Scientist's Toolkit: Key Research Reagents & Materials

Table 2: Essential Toolkit for E(3)-Equivariant Molecular GNN Research

| Item | Function & Explanation |

|---|---|

| Quantum Chemistry Datasets (QM9, ANI, OC20, MD17) | High-quality labeled data for training and benchmarking. Provide 3D geometries with target energies and forces computed via Density Functional Theory (DFT) or ab initio methods. |

| Deep Learning Framework (PyTorch, JAX) | Provides automatic differentiation, GPU acceleration, and flexible neural network modules. Essential for implementing custom CG product operations. |

| Equivariant NN Library (e3nn, Diffrax, SE(3)-Transformers) | Pre-built, optimized implementations of irreducible representations, spherical harmonics, Clebsch-Gordan coefficients, and equivariant layers. Drastically reduces development time. |

| Molecular Dynamics Engine (ASE, LAMMPS) | Allows for running simulations using trained models as force fields (potential energy surfaces). Validates model utility in dynamic settings. |

| High-Performance Computing (HPC) Cluster with GPUs | Training on 3D graph data is computationally intensive. Multiple GPUs (NVIDIA A100/V100) enable feasible training times (hours to days) on large datasets. |

| Visualization Tools (ASE, VMD, Mayavi) | For visualizing molecular geometries, learned equivariant features (as vector fields on atoms), and simulation trajectories. |

| Metrics & Analysis Scripts | Custom code to compute equivariance error, direction-averaged force errors, and other symmetry-property specific analyses beyond standard MAE. |

Current state-of-the-art E(3)-equivariant models demonstrate superior data efficiency and accuracy compared to non-equivariant or invariant models, especially on tasks involving directional quantities like forces.

Table 3: Performance Comparison on MD17 (Ethanol)

| Model Type | Principle | Energy MAE [meV] | Force MAE [meV/Å] | Training Size (Confs) |

|---|---|---|---|---|

| SchNet (Invariant) | Distance-only | ~14.0 | ~40.0 | 1000 |

| DimeNet (Invariant) | Angles + Distances | ~9.0 | ~20.0 | 1000 |

| NequIP (E(3)-Equiv.) | Irreps & CG Products | ~2.5 | ~4.5 | 1000 |

| SE(3)-Transformer | Attn on Irreps | ~3.0 | ~6.0 | 1000 |

Data synthesized from recent literature (2022-2024). Values are approximate for illustration. The table highlights the order-of-magnitude improvement in force prediction, a critical metric for molecular dynamics, enabled by strict adherence to equivariance principles.

The prediction of molecular properties is a fundamental challenge in chemistry and drug discovery. Traditional machine learning approaches often treat molecules as static graphs, neglecting the essential physical principle that a molecule's energy and properties are invariant to rotations, translations, and reflections (Euclidean symmetries), while its directional quantities, like dipole moments, transform predictably (equivariantly). E(3)-equivariant Graph Neural Networks (GNNs) address this by embedding these geometric symmetries directly into the model architecture as an inductive bias. This bias constrains the hypothesis space, ensuring that model predictions respect the laws of physics, leading to improved data efficiency, generalization, and physical realism.

Quantitative Performance Comparison of Equivariant Models

Recent benchmarks demonstrate the superior performance of E(3)-equivariant models over non-equivariant baselines on key quantum chemical tasks. The data below, compiled from recent literature, highlights this advantage.

Table 1: Performance of E(3)-Equivariant vs. Non-Equivariant Models on QM9 Benchmark

| Model (Architecture) | Equivariance | MAE on μ (D) ↓ | MAE on α (a₀³) ↓ | MAE on ε_HOMO (meV) ↓ | Params (M) | Training Size (Molecules) |

|---|---|---|---|---|---|---|

| SchNet | Invariant | 0.033 | 0.235 | 41 | 4.1 | ~110,000 |

| DimeNet++ | Invariant | 0.029 | 0.044 | 24.6 | 1.8 | ~110,000 |

| SE(3)-Transformer | E(3)-Equiv. | 0.012 | 0.035 | 19.3 | 1.5 | ~110,000 |

| NequIP | E(3)-Equiv. | 0.010 | 0.032 | 17.5 | 0.9 | ~110,000 |

| GemNet | E(3)-Equiv. | 0.008 | 0.030 | 16.2 | 9.2 | ~110,000 |

Key: μ = Dipole moment (vector), α = Isotropic polarizability (scalar), ε_HOMO = HOMO energy (scalar). Lower MAE is better. Data sourced from Batzner et al. (2022) and Gasteiger et al. (2021).

Table 2: Molecular Dynamics Stability Comparison (ACE Dataset)

| Model | Equivariance | Stable Trajectories (%) ↑ | Force MAE (meV/Å) ↓ | Energy MAE (meV/atom) ↓ |

|---|---|---|---|---|

| Classical FF (ANI-2x) | N/A | 12.1 | 38.2 | 6.8 |

| GNN (CGCF) | Invariant | 45.3 | 22.7 | 4.1 |

| Equivariant GNN (NequIP) | E(3)-Equiv. | 98.7 | 9.4 | 1.9 |

Stable trajectory defined as no bond breaking/formation over 1ns simulation. Data adapted from Batzner et al. (2022).

Core Experimental Protocols

Protocol 3.1: Training an E(3)-Equivariant GNN for Molecular Property Prediction

Objective: Train a model like NequIP or SE(3)-Transformer to predict quantum chemical properties from the QM9 dataset.

Materials & Data:

- QM9 Dataset: ~133k organic molecules with up to 9 heavy atoms (C, O, N, F). Contains 19 geometric, energetic, electronic, and thermodynamic properties calculated at DFT/B3LYP level.

- Framework: PyTorch or JAX.

- Libraries:

e3nn,DGLorPyTorch Geometric,ASE(Atomic Simulation Environment).

Procedure:

- Data Partitioning: Split QM9 into standard training (110,000 molecules), validation (10,000), and test (rest) sets. Apply the provided scaffold split to assess generalization.

- Featurization: Represent each molecule as a graph with nodes as atoms. Initial node features: atomic number (one-hot), possibly atomic charge. Edge features: interatomic distance (expanded via Bessel functions + polynomial envelope). For equivariant models, assign vector features (e.g., spherical harmonics of direction) for higher-order tensors.

- Model Configuration:

- Choose an architecture (e.g., NequIP). Key hyperparameters: number of interaction blocks (4-6), irreducible representation (

"o3"), feature multiplicities (16-128), radial network cutoff (4-5 Å). - Use equivariant nonlinearities (gated or norm-based).

- Choose an architecture (e.g., NequIP). Key hyperparameters: number of interaction blocks (4-6), irreducible representation (

- Loss Function: For scalar outputs (energy, HOMO): Mean Squared Error (MSE). For vector/tensor outputs (dipole): MSE on the invariant norm or a direct equivariant loss.

- Training: Use AdamW optimizer with initial learning rate of 1e-3 and cosine decay. Batch size: 32-64. Train for 500-1000 epochs, monitoring validation loss for early stopping.

- Evaluation: Report Mean Absolute Error (MAE) on the held-out test set for target properties. Compare directional predictions by analyzing the error distribution on vector components.

Protocol 3.2: Running Stable Molecular Dynamics with a Learned Equivariant Potential

Objective: Use a trained equivariant GNN as a force field to run stable, energy-conserving MD simulations.

Materials:

- Trained Model: An E(3)-equivariant GNN trained on energies and forces (e.g., from ANI-MD or OC20 datasets).

- Simulation Engine:

ASEMD module orOpenMMwith a custom force field plugin. - Initial Structure: A 3D molecular conformation (e.g., from PDB or DFT optimization).

Procedure:

- Model Integration: Wrap the trained model to interface with the MD engine. The model must take atomic numbers and positions (Z, R) as input and output total energy (scalar) and atomic forces (vector, as negative gradient of energy w.r.t. positions).

- System Setup: Place the molecule in a simulation box with appropriate periodic boundary conditions if needed. Set temperature (e.g., 300K) and barostat if conducting NPT.

- Integrator Selection: Use a velocity Verlet integrator. Due to the high fidelity of equivariant force fields, a timestep of 0.5-1.0 fs is often feasible.

- Running Simulation:

- Initialize atomic velocities from a Maxwell-Boltzmann distribution at the target temperature.

- Run an equilibration phase (1-10 ps) with a Langevin thermostat.

- Switch to a NVE (microcanonical) ensemble for production run to test energy conservation. Alternatively, continue NVT for property sampling.

- Run production simulation for >100 ps, saving trajectories at 10-100 fs intervals.

- Analysis:

- Energy Conservation (NVE): Calculate the drift in total energy (ΔE) over time. A good potential shows negligible drift.

- Stability: Monitor root-mean-square deviation (RMSD) and check for unphysical bond stretching or atom clashes.

- Property Calculation: Compute dynamical properties from the trajectory (e.g., radial distribution functions, vibrational spectra).

Visualizations

Title: Equivariant GNN Workflow & Symmetry Constraint

Title: Equivariant vs. Non-Equivariant Model Behavior

The Scientist's Toolkit: Essential Research Reagents & Materials

Table 3: Key Computational Reagents for E(3)-Equivariant Molecular Modeling

| Item | Function / Description | Example / Specification |

|---|---|---|

| Quantum Chemistry Datasets | Provides ground-truth labels (energy, forces, properties) for training and evaluation. | QM9, ANI-1/2x, OC20, MD17, SPICE. Format: XYZ, HDF5. |

| Equivariant NN Libraries | Provides pre-built layers and operations for constructing E(3)-equivariant models. | e3nn (general), NequIP, SE(3)-Transformer, TorchMD-NET. |

| Graph Neural Network Frameworks | Backbone for efficient graph data structures and message passing. | PyTorch Geometric, Deep Graph Library (DGL), JAX + jraph. |

| Molecular Dynamics Engines | Software to perform simulations using learned neural network potentials. | ASE (flexible), LAMMPS (plugin), OpenMM (custom force). |

| High-Performance Computing (HPC) | GPU clusters for training large models and running long-timescale MD. | NVIDIA A100/V100 GPUs, multi-node training with DDP. |

| Ab-Initio Calculation Software | To generate new training data or validate predictions. | ORCA, Gaussian, Psi4, VASP (for materials). |

| Visualization & Analysis Tools | For inspecting molecular geometries, trajectories, and model attention. | VMD, PyMOL, MDAnalysis, matplotlib, plotly. |

Architectures and Implementations: Building and Applying E(3)-Equivariant Models

Within the broader thesis on E(3)-equivariant graph neural networks for molecules research, this document provides detailed application notes and protocols for four cornerstone architectures. The primary thesis posits that enforcing strict E(3)-equivariance (invariance to rotation, translation, and reflection) in deep learning models for molecular systems leads to superior data efficiency, improved generalization, and more physically meaningful predictions in tasks such as molecular dynamics, property prediction, and drug discovery.

Architecture Specifications and Quantitative Comparison

Table 1: Core Architectural Specifications

| Feature / Architecture | e3nn | NequIP | SEGNN | EquiFormer | ||||

|---|---|---|---|---|---|---|---|---|

| Core Equivariance Mechanism | Irreducible Representations (Irreps) & Tensor Product Networks | Equivariant Convolutions via Tensor-Product + MLP | Steerable E(3)-Equivariant Node & Edge Updates | Attention on Scalars & Vectors via Equivariant Kernel Integration | ||||

| Primary Input | Atomic numbers, positions (vectors) | Atomic numbers, positions, edges | Node features (scalar/vector), edge attributes | Atomic numbers, positions, optional edge types | ||||

| Key Mathematical Foundation | Spherical Harmonics, Clebsch-Gordan coefficients | Higher-order equivariant features (l=0,1,2,...), Bessel radial functions | Steerable feature vectors, equivariant non-linearities | Geometric attention, invariant scalar keys/queries, vector values | ||||

| Message Passing Paradigm | Customizable tensor product blocks | Iterative high-order interaction blocks | Steerable node-to-edge & edge-to-node updates | Equivariant graph self-attention layers | ||||

| Notable Non-Linearity | gated non-linearities (scalar gates) | Norm-based activation (σ( | f | ) * f) | Gated equivariant non-linearities (Gated RuLU) | SiLU on scalars, vector scaling by invariant features | ||

| Typical Output | Scalars (energy), Vectors (dipole), Tensors (polarizability) | Scalars (potential energy), Vectors (forces) | Scalars & Vectors for node/edge tasks | Scalars & Vectors for node-level predictions |

| Architecture | MD17 (Aspirin) Force MAE [meV/Å] | OC20 IS2RE Adsorption Energy MAE [eV] | QM9 Δε (HOMO-LUMO gap) MAE [meV] | Param. Efficiency (Relative) | Citation |

|---|---|---|---|---|---|

| e3nn (baseline) | ~13-15 | ~0.65-0.75 | ~40-50 | 1.0x (reference) | Geiger & Smidt, 2022 |

| NequIP | ~6 | ~0.55-0.65 | ~20-30 | ~1.5-2.0x | Batzner et al., 2022 |

| SEGNN | ~8-10 | ~0.60-0.70 | ~30-40 | ~1.2-1.5x | Brandstetter et al., 2022 |

| EquiFormer | ~9-11 | ~0.50-0.60 | ~25-35 | ~1.0-1.3x | Liao & Smidt, 2022 |

Note: Values are approximate summaries from literature; exact numbers depend on hyperparameters, dataset splits, and specific targets.

Detailed Experimental Protocols

Protocol 1: Training an Equivariant Model for Molecular Property Prediction (QM9)

Objective: Train a model to predict quantum chemical properties from molecular geometry. Materials: QM9 dataset, PyTorch, PyTorch Geometric, architecture-specific library (e3nn, nequip, etc.), GPU.

Data Preparation:

- Download and partition the QM9 dataset (train/val/test, e.g., 110k/10k/10k molecules).

- For each molecule, extract:

- Node features: Atomic number (Z ∈ {1,6,7,8,9}) encoded as one-hot or embedding.

- Graph structure: Build edges between atoms within a cutoff radius (e.g., 5.0 Å) or via molecular bonds.

- Edge attributes: Relative displacement vector (

r_ij) and its norm for radial basis functions.

- Normalize target properties (e.g., HOMO, LUMO, energy) using training set statistics.

Model Initialization:

- Select architecture (e.g., NequIP with

l_max=2for capturing angular information). - Define radial network (typically a multi-layer perceptron on Bessel basis functions).

- Specify output head: a single invariant scalar for energy-like properties, or an equivariant head for vector/tensor properties.

- Select architecture (e.g., NequIP with

Training Loop:

- Loss Function: Mean Squared Error (MSE) on the target property.

- Optimizer: AdamW optimizer with learning rate = 3e-4, weight decay = 1e-12.

- Batch Size: 32-128 molecules per batch (using gradient accumulation if necessary).

- Scheduler: ReduceLROnPlateau on validation loss.

- Equivariance Verification: Periodically validate equivariance via random rotation/translation of input coordinates and checking output transformation.

Evaluation:

- Report MAE on the held-out test set.

- Compare against baseline models (SchNet, DimeNet++) and theoretical limits.

Protocol 2: Running Molecular Dynamics with Equivariant Force Fields

Objective: Use a trained equivariant model to simulate molecular motion via forces. Materials: Trained model (e.g., on ANI or MD17), ASE (Atomic Simulation Environment) or OpenMM, initial molecular geometry.

Model Preparation:

- Load the trained model checkpoint. Ensure it outputs both energy (scalar) and negative gradients w.r.t. atomic positions (forces, vectors). Most libraries (NequIP, Allegro) provide this natively.

Integration with MD Engine:

- Wrap the model as a

Calculatorin ASE or aForcein OpenMM. - This wrapper must take atomic numbers and positions as input, call the model, and return the energy and forces.

- Wrap the model as a

Simulation Setup:

- Define the initial system (e.g., a single aspirin molecule in a vacuum).

- Set up the dynamics integrator (e.g., Langevin at 300K with a 0.5 fs timestep).

- Attach the model-based calculator as the sole source of interatomic forces.

Production Run & Analysis:

- Run equilibration for a short period (e.g., 5 ps).

- Run production dynamics (e.g., 50 ps), saving trajectories (positions, velocities, energies).

- Analyze trajectories: compute radial distribution functions, mean squared displacement, vibrational spectra, and compare to reference DFT MD if available.

Visualization of Architectures and Workflows

Title: Generic E(3)-Equivariant Graph Network Workflow

Title: Architectural Paths from Input to Prediction

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Software Tools & Libraries

| Item | Function | Example/URL |

|---|---|---|

| e3nn Library | Core framework for building E(3)-equivariant networks using irreducible representations. | pip install e3nn |

| NequIP / Allegro | High-performance implementations for training interatomic potentials. | GitHub: mir-group/nequip |

| PyTorch Geometric | General graph neural network library with 3D point cloud support. | torch-geometric.readthedocs.io |

| ASE (Atomic Simulation Environment) | Python suite for setting up, running, and analyzing MD simulations with custom calculators. | wiki.fysik.dtu.dk/ase |

| OpenMM | High-performance MD toolkit for GPU-accelerated simulations; can integrate custom forces. | openmm.org |

| JAX + Equinox | Enables efficient equivariant model development with automatic differentiation and just-in-time compilation. | GitHub: patrick-kidger/equinox |

| RDKit | Cheminformatics toolkit for molecule manipulation, conformer generation, and featurization. | rdkit.org |

Table 4: Key Datasets & Benchmarks

| Item | Function | Content & Size |

|---|---|---|

| QM9 | Benchmark for quantum chemical property prediction. | ~134k small organic molecules with 12+ DFT-calculated properties. |

| MD17 / rMD17 | Benchmark for molecular dynamics force prediction. | 10 molecules, ab initio trajectories (forces/energies). |

| ANI (e.g., ANI-1x, ANI-2x) | Large-scale dataset for developing transferable potentials. | Millions of DFT conformations for HCNO-containing molecules. |

| Open Catalyst OC20 | Benchmark for catalyst discovery (adsorption energy, relaxation). | >1M relaxations of catalyst-adsorbate systems. |

| Protein Data Bank (PDB) | Source for 3D structures of proteins, ligands, and complexes for drug discovery tasks. | >200k experimental structures. |

Within the broader thesis on E(3)-equivariant graph neural networks (GNNs) for molecular research, data preparation is the critical, foundational step. E(3)-equivariant models are designed to be invariant or equivariant to translations, rotations, and reflections (the Euclidean group E(3)) of 3D molecular structures. This property guarantees that model predictions depend only on the intrinsic geometry of the molecule, not its arbitrary orientation in space. The transformation of raw XYZ atomic coordinates into a structured geometric graph representation is what enables these models to learn physical and quantum mechanical laws directly from data, with applications in drug discovery, protein folding, and materials science.

Core Data Components & Quantitative Benchmarks

The quality of the geometric graph directly impacts model performance on downstream tasks. Key quantitative aspects of common molecular datasets are summarized below.

Table 1: Key Molecular Datasets for E(3)-Equivariant GNNs

| Dataset | Typical Size | Node Features | Edge/Geometric Features | Primary Task | Reported Performance (MAE) with Equivariant Models |

|---|---|---|---|---|---|

| QM9 | ~134k small organic molecules | Atom type, partial charge, hybridization | Distance, vector (rij), possibly bond type | Quantum property regression (e.g., μ, α, εHOMO) | α: ~0.046 (PaiNN), U0: ~8 meV (SphereNet) |

| MD17 (and variants) | ~100k conformations per molecule | Atom type (C, H, N, O) | Distance, direction vectors | Energy & force prediction | Energy: < 1 meV/atom, Forces: ~1-4 meV/Å (NequIP, Allegro) |

| OC20 | ~1.3M catalyst adsorbate systems | Atom type (~70 elements) | Distance, vectors, angles | Adsorption energy & force prediction | S2EF: ~0.65 eV/Å (Force MAE), IS2RE: ~0.73 eV (Energy MAE) |

| PDBBind | ~20k protein-ligand complexes | Atom/residue type, chirality, formal charge | Inter-atomic distances, protein-ligand interface vectors | Binding affinity prediction (pKd/pKi) | ~1.0-1.2 RMSE (log scale) for core set |

Table 2: Common Geometric Feature Definitions & Impact

| Feature Type | Mathematical Form | E(3) Transformation Property | Common Use in Models |

|---|---|---|---|

| Scalars (l=0) | Distance: ||rij|| | Invariant | Used for edge weighting, radial basis functions. |

| Vectors (l=1) | Direction: rij / ||rij|| | Equivariant (rotate with system) | Direct input for tensor products, message passing. |

| Spherical Harmonics (l>1) | Yl^m(θ, φ) | Equivariant (irreducible rep) | Used in higher-order messages (e.g., Cormorant, MACE). |

| Tensor Products | Coupling of features of order l1 & l2 | Outputs are Clebsch-Gordan summed | Core operation for feature interaction in SE(3)-equivariant nets. |

Detailed Experimental Protocols

Protocol 3.1: Constructing a Geometric Graph from XYZ Coordinates

Objective: Convert a set of atomic coordinates and elements into a graph suitable for an E(3)-equivariant GNN. Materials: XYZ file, periodic table information, computational environment (Python, PyTorch, DGL/PyG).

Procedure:

- Node Feature Initialization:

- Parse the input file (e.g.,

.xyz,.pdb,.pos) to obtain atomic numbersZ_iand Cartesian coordinatesr_i ∈ R^3. - Encode atomic numbers into a one-hot or learned embedding vector

h_i^0. Additional invariant node features may include atomic mass, formal charge, hybridization state (if known), and ring membership.

- Parse the input file (e.g.,

Edge Connectivity & Geometric Feature Calculation:

- Define Neighbors: For a cutoff radius

r_cut(e.g., 5.0 Å), compute the pairwise distance matrixd_ij = ||r_i - r_j||. - Create an edge between nodes

iandjif0 < d_ij ≤ r_cut. - For each edge

(i, j), compute:- Invariant scalar: The distance

d_ij. - Radial Basis Expansion: Project

d_ijonto a set of Gaussian or Bessel basis functions to obtain a smooth feature vectorRBF(d_ij). - Equivariant vector: The normalized displacement vector

r_ij = (r_j - r_i) / d_ij. For higher-order models, compute spherical harmonic projectionsY^m_l(r_ij).

- Invariant scalar: The distance

- Define Neighbors: For a cutoff radius

Optional Edge Attribute Initialization:

- If explicit bond information is available (e.g., from SMILES or a force field), encode bond type (single, double, triple, aromatic) as a one-hot vector

e_ij. - Alternatively, a continuous bond feature can be derived from the distance using a Gaussian smearing of known bond lengths.

- If explicit bond information is available (e.g., from SMILES or a force field), encode bond type (single, double, triple, aromatic) as a one-hot vector

Graph Assembly:

- Assemble node feature matrix

H, edge index listE, and edge attribute tensor containing[RBF(d_ij), (r_ij)]. - Store coordinates

r_ias a separate, mandatory attribute of the graph. These are the geometric attributes that transform under E(3) actions.

- Assemble node feature matrix

Validation:

- Apply a random 3D rotation

Rand translationtto the coordinates:r_i' = R * r_i + t. - Recompute edge vectors

r_ij'fromr_i'. - Confirm that

d_ijremains identical andr_ij' = R * r_ij, ensuring the graph representation correctly separates invariant and equivariant components.

- Apply a random 3D rotation

Protocol 3.2: Preparing a Dataset for Equivariant Training (QM9 Example)

Objective: Create a processed, cached dataset of geometric graphs for efficient model training.

Materials: QM9 dataset (via torch_geometric.datasets.QM9), data processing script.

Procedure:

- Download and Split: Download the QM9 dataset. Use a standard stratified split (e.g., 110k train, 10k val, ~11k test) based on molecular size or scaffold to avoid data leakage.

- Graph Conversion: Apply Protocol 3.1 to each molecule. Use a consistent

r_cut(e.g., 5.0 Å). Include atomic number and possibly formal charge as node features. - Target Normalization: For the regression target (e.g., HOMO energy), compute the mean (μ) and standard deviation (σ) across the training set only. Normalize targets as

y' = (y - μ) / σ. Store μ and σ for inverse transformation during inference. - Caching: Save the list of processed

Dataobjects (PyG) or graph tensors (DGL) to disk. This avoids costly recomputation on each epoch. - Dataloader Configuration: Use a batched data loader. For E(3)-equivariant models, batching must respect geometry: the batch is a disconnected graph where coordinates and vector features from each subgraph are rotated independently. Use a custom collate function that also returns a batch vector to identify subgraphs.

Mandatory Visualizations

Data Preparation for Equivariant GNNs

E(3)-Equivariance Validation Test

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Software & Libraries for Geometric Graph Preparation

| Tool/Library | Function & Purpose | Key Feature for Equivariance |

|---|---|---|

| PyTorch Geometric (PyG) | Graph deep learning framework. Handles graph data structures, batching, and provides standard molecular datasets. | Data object can store pos (coordinates) and edge_vectors; essential for customizing message passing. |

| Deep Graph Library (DGL) | Alternative graph neural network library with efficient message passing primitives. | Compatible with libraries like DGL-LifeSci; good for large-scale distributed training. |

| e3nn / MACE / NequIP | Specialized libraries for E(3)-equivariant networks. | Provide core operations (tensor products, spherical harmonics, Irreps) and often include data tools. |

| ASE (Atomic Simulation Environment) | Python toolkit for working with atoms. | Parses many file formats (.xyz, .pdb), calculates distances/neighbors, applies rotations. |

| RDKit | Cheminformatics toolkit. | Generates 3D conformers from SMILES, computes molecular descriptors (node features), identifies bond orders. |

| Pymatgen | Materials analysis library. | Essential for periodic systems (crystals), computes neighbor lists with periodic boundary conditions. |

| JAX (with JAX-MD) | Autograd and accelerated linear algebra. | Enables data preparation and model training on GPU/TPU with end-to-end differentiability. |

Application Notes & Protocols

Thesis Context

This protocol details the implementation of an E(3)-Equivariant Graph Neural Network (GNN) for predicting quantum chemical properties, specifically Density Functional Theory (DFT)-level molecular energy, within a broader research thesis. The core thesis investigates how incorporating the fundamental symmetries of 3D Euclidean space—translation, rotation, and reflection (the E(3) group)—into deep learning architectures drastically improves data efficiency, generalization, and physical fidelity in molecular property prediction compared to invariant models.

Key Architectural Principles

E(3)-equivariant networks operate on geometric tensors (scalars, vectors, tensors) that transform predictably under 3D rotations and translations. Layers are constructed using tensor products and Clebsch-Gordan coefficients to guarantee that the transformation rules of the output features are strictly controlled by the input features, ensuring the network's predictions transform identically to the true physical property under coordinate system changes.

Core Protocol: Model Implementation

Protocol 1: Data Preparation & Representation

Objective: Convert molecular structures into a graph representation suitable for an E(3)-equivariant model.

- Input Data: Use a dataset of 3D molecular structures (e.g., QM9, ANI-1, MD17) with associated DFT-computed total energies.

- Graph Construction:

- Nodes: Each atom is a node.

- Node Features (

h_i^0): Embed atom type (Z) into a one-hot or learned vector. Initialize scalar features (l=0) with this embedding. Initialize vector (l=1) features to zero or from a learned function of atomic number. - Edges: Connect atoms within a defined cutoff radius (e.g., 5 Å).

- Edge Attributes (

a_ij): Compute relative displacement vectorr_ij = r_j - r_i. Encode its spherical harmonic representationY^l(r_ij)for degreesl=0, 1, ...(typicallyl=0,1,2) and the interatomic distance (passed through a radial basis function, RBF).

Protocol 2: Building an E(3)-Equivariant Interaction Block

Objective: Implement a single message-passing layer that updates node features equivariantly.

Edge Message Formation: For each edge

(i,j):- Compute a learnable weight

W_ijfrom concatenated scalar features of nodesiandjand thel=0component of the edge embedding. - For each feature type (irrep)

l(e.g., scalarsl=0, vectorsl=1):- Perform a tensor product

⊗between thel_ffeature of the sending nodejand thel_eedge attributeY(r_ij). The output contains irreps of degree|l_f - l_e|, ..., l_f + l_e. - Use Clebsch-Gordan coefficients to linearly combine these outputs into a message of a specific target type

l. - Weight by

W_ijand the output of a learned radial networkRBF(||r_ij||).

- Perform a tensor product

- Compute a learnable weight

Node Feature Update: For each node

i:- Aggregate messages from all neighboring nodes

j ∈ N(i)for each irrepl. - Update the node's features of type

lby adding the aggregated messages to the original features, passed through a gated nonlinearity (activation acts only on scalar pathways).

- Aggregate messages from all neighboring nodes

Implementation Note: Utilize established libraries like

e3nnorTensorField Networksto handle tensor products and Clebsch-Gordan expansions correctly.

Protocol 3: Network Architecture & Training

Objective: Assemble interaction blocks into a full model and define the training procedure.

Architecture:

- Embedding Layer: Project atomic number into initial scalar and vector features.

- Interaction Blocks: Stack 4-6 E(3)-equivariant interaction blocks (as per Protocol 2).

- Invariant Readout: To predict a scalar energy, pass only the final

l=0(scalar) node features through a multilayer perceptron (MLP) and sum over all nodes (global pooling). This ensures invariance to rotation/translation. - Output: A single scalar value representing the predicted total energy.

Training:

- Loss Function: Mean Absolute Error (MAE) or Mean Squared Error (MSE) between predicted and DFT-computed energies.

- Optimizer: AdamW optimizer with a learning rate of

1e-3and decay schedule. - Regularization: Weight decay and dropout on the invariant MLP layers.

Table 1: Comparative Performance of GNN Models on QM9 DFT Energy Prediction (MAE in meV)

| Model Architecture | Principle | Test MAE (meV) | Relative to DFT | Key Advantage |

|---|---|---|---|---|

| SchNet (2017) | Invariant | ~14 | Baseline | Introduced continuous-filter convolutions. |

| DimeNet++ (2020) | Invariant | ~6.3 | State-of-the-art (Invariant) | Uses directional message passing. |

| SE(3)-Transformer (2020) | Equivariant | ~8.5 | Competitive | Attentional equivariant model. |

| NequIP (2021) | Equivariant | ~4.7 | State-of-the-Art | High body-order, exceptional data efficiency. |

| MACE (2022) | Equivariant | ~4.5 | State-of-the-Art | Higher body-order via atomic basis. |

Table 2: Required Research Reagent Solutions (Software & Data)

| Item | Function | Example/Format |

|---|---|---|

| Quantum Chemistry Dataset | Provides ground-truth labels (energy, forces) for training and evaluation. | QM9 (130k small org.), ANI-1 (20M conf.), OC20 (1.2M surfaces) |

| Molecular Graph Builder | Converts XYZ coordinates and atomic numbers into graph representations. | ase, pymatgen, custom Python script |

| E(3)-Equivariant Framework | Provides core operations (tensor products, spherical harmonics). | e3nn, TensorField Networks, NequIP, MACE |

| Deep Learning Framework | Provides automatic differentiation, optimization, and GPU acceleration. | PyTorch, JAX |

| High-Performance Compute (HPC) | Accelerates training (days→hours) and quantum chemistry calculations. | GPU Cluster (NVIDIA A100/V100) |

Experimental Validation Protocol

Objective: Benchmark the implemented model against standard baselines.

- Dataset Splitting: Use a standard scaffold or random split for QM9 (e.g., 110k train, 10k val, 10k test).

- Baseline Models: Train and evaluate invariant models (SchNet, DimeNet++) under identical conditions.

- Metrics: Report MAE on the test set for total energy

U0. For models predicting forces, report force MAE (eV/Å). - Data Efficiency Test: Train all models on subsets (1k, 10k, 50k samples) to demonstrate the superior sample efficiency of equivariant models.

- Ablation Study: Test model performance with and without vector (

l=1) features, or with reduced number of interaction blocks.

Visualized Workflows

Title: E(3)-Equivariant GNN Training Workflow

Title: Single Equivariant Interaction Block

Within the paradigm shift towards machine learning-driven molecular modeling, E(3)-equivariant graph neural networks (GNNs) represent a foundational breakthrough. These architectures respect the fundamental symmetries of Euclidean space—translation, rotation, and inversion—ensuring that predictions are inherently consistent with the laws of physics. This Application Note details Allegro, a state-of-the-art E(3)-equivariant GNN, and its application in performing high-fidelity, large-scale molecular dynamics (MD) simulations. Allegro enables ab initio-accurate simulations at significantly reduced computational cost, directly advancing the thesis that E(3)-equivariant models are critical for the next generation of molecular research and drug discovery.

Allegro (Atomic Local Environment Graph Neural Network) is a deep learning interatomic potential model. Its core innovation lies in its strictly local, many-body, equivariant architecture.

Key Technical Features:

- E(3)-Equivariance: Achieved through the use of irreducible representations (irreps) and tensor products, guaranteeing that the potential energy surface transforms correctly under rotation of the system.

- Strict Locality: Interactions are modeled only within a defined cutoff radius, enabling linear scaling with system size and efficient parallelization.

- High-Order Body-Order: Employs multi-body interactions beyond simple pair potentials, capturing complex quantum chemical effects essential for accuracy.

- End-to-End Differentiability: Allows for direct computation of forces (negative gradients of energy) and stress tensors.

Quantitative Performance Data

Table 1: Benchmark Performance of Allegro on MD17 and 3BPA Datasets

| Model | Test Force MAE (meV/Å) (Aspirin) | Simulation Stability (ps) (Ethanol) | Speed (ns/day) vs. DFT | Body-Order |

|---|---|---|---|---|

| Allegro | ~13 | > 1000 | 10⁴–10⁵ | High-order |

| NequIP | ~15 | ~500 | 10⁴–10⁵ | High-order |

| SchNet | ~40 | < 50 | 10⁵ | Low-order |

| DFT (Reference) | 0 | N/A | 1 | Exact |

Table 2: Application-Specific Results from Recent Studies

| System Simulated | Key Metric | Allegro Result | Classical Force Field Result |

|---|---|---|---|

| Li₃PS₄ Solid Electrolyte | Li⁺ Diffusion Coeff. (cm²/s) | 1.2 × 10⁻⁸ | 3.8 × 10⁻⁹ (Underestimated) |

| (Ala)₈ Protein Folding | RMSD to Native (Å) | 2.1 | 4.7 |

| Water/Catalyst Interface | O-H Bond Dissoc. Barrier (eV) | 4.31 | 3.95 (Inaccurate) |

Experimental Protocols

Protocol 4.1: Training an Allegro Potential for a Novel Organic Molecule

Objective: To develop a robust machine learning interatomic potential (MLIP) for stable, nanosecond-scale MD simulations of a drug-like molecule (e.g., a small-molecule inhibitor).

Materials & Software:

- Reference Data: Quantum mechanics (QM) calculations (DFT, CCSD(T)) for target molecule configurations.

- Software: LAMMPS or ASE for MD; Allegro codebase (PyTorch); JAX MD for integration.

- Hardware: GPU cluster (NVIDIA A100/V100 recommended).

Procedure:

- Dataset Generation:

- Perform ab initio MD (AIMD) or use enhanced sampling to collect a diverse set of molecular conformations.

- For each snapshot, compute and store total energy, atomic forces, and stress tensors using QM.

- Split data: 80% training, 10% validation, 10% test.

Model Training:

- Configure

config.yamlwith hyperparameters (cutoff radius=5.0 Å, hidden irreps, batch size). - Initialize the Allegro model. The loss function is a weighted sum of energy and force errors.

- Train using the Adam optimizer. Monitor validation loss for early stopping.

- Configure

Validation and Deployment:

- Evaluate force Mean Absolute Error (MAE) on the test set. Target < 20 meV/Å for reliable dynamics.

- Convert the trained PyTorch model to an optimized format (e.g., TorchScript) for MD engines.

- Integrate with LAMMPS via the

mliappackage.

Protocol 4.2: Running an Equivariant MD Simulation for Protein-Ligand Binding

Objective: To simulate the binding dynamics of a ligand to a protein active site with quantum-level accuracy.

Procedure:

- System Preparation:

- Obtain protein (from PDB) and ligand (from docking) initial structure.

- Solvate the complex in explicit water (e.g., TIP3P model). Add ions to neutralize charge.

- Crucial: Ensure all atom types (protein, ligand, water, ions) are present in the training data of the Allegro potential.

Simulation Setup (in LAMMPS):

- Use

pair_style mliapandpair_coeff * * allegro_model.ptgto invoke the Allegro potential. - Apply periodic boundary conditions. Use a 1-fs timestep.

- Minimize energy, then run NVT equilibration followed by production NPT run.

- Use

Analysis:

- Calculate the root-mean-square deviation (RMSD) of the ligand in the binding pocket.

- Compute the interaction energy (protein-ligand) time series.

- Use Markov state models to estimate binding kinetics (kon/koff).

Visualization: Workflow and Architecture

Title: End-to-End Workflow for Allegro MD Simulations

Title: Allegro's E(3)-Equivariant Neural Network Architecture

The Scientist's Toolkit: Research Reagent Solutions

| Item/Category | Function in Allegro/MD Simulation |

|---|---|

| Allegro Codebase | The core PyTorch implementation of the E(3)-equivariant GNN architecture for training new potentials. |

| Quantum Chemistry Software (e.g., VASP, Gaussian, PySCF) | Generates the high-fidelity reference data (energies, forces) required to train the Allegro model. |

| LAMMPS with ML-IAP Plugin | The mainstream MD engine optimized for running large-scale production simulations with Allegro potentials. |

| ASE (Atomic Simulation Environment) | A Python toolkit used for setting up systems, interfacing between codes, and basic analysis. |

| Interatomic Potential File (.ptg) | The final exported, optimized Allegro model, serving as the "force field" for the MD simulation. |

| Enhanced Sampling Suites (e.g., PLUMED) | Integrated with Allegro-MD to probe rare events like protein folding or chemical reactions. |

| GPU Computing Cluster | Essential hardware for both training (multiple GPUs) and running large-scale simulations (single/multi-GPU). |

Within the broader thesis on E(3)-equivariant graph neural networks (E3-GNNs) for molecules research, this application spotlight addresses a central challenge in computational biophysics and drug discovery: accurately predicting protein-ligand binding affinity and enabling rational, structure-based drug design. Traditional convolutional or invariant graph neural networks struggle with the geometric complexity of molecular interactions, as they are not inherently designed to respect the fundamental symmetries of 3D space—rotation, translation, and reflection (the E(3) group). E3-GNNs provide a principled framework by construction, ensuring that predictions are invariant or equivariant to these transformations. This directly translates to more robust, data-efficient, and physically meaningful models for scoring protein-ligand poses, virtual screening, and lead optimization.

Key E3-GNN Architectures and Performance

Table 1: Performance of E3-GNN Models on Key Protein-Ligand Benchmark Datasets

| Model (Year) | Core E(3)-Equivariant Mechanism | PDBBind v2020 (Core Set) RMSE ↓ | CASF-2016 Power Screen (Top 1% Success Rate) ↑ | Key Advantage for Drug Design |

|---|---|---|---|---|

| SE(3)-DiffDock (2022) | SE(3)-Equivariant Diffusion | N/A (Docking) | 52.9% | State-of-the-art blind docking pose prediction. |

| EquiBind (2022) | E(3)-Equivariant Graph Matching | N/A (Docking) | 34.8% | Ultra-fast binding pose prediction. |

| SphereNet (2021) | Radial and Angular Filters | 1.15 pK units | 38.2% | Captures fine-grained atomic environment geometry. |

| EGNN (2020) | E(n)-Equivariant Convolutions | 1.23 pK units | N/A | Simplicity and efficiency on coordinate graphs. |

| RoseTTAFold All-Atom (2023) | SE(3)-Invariant Attention | N/A (Complex Design) | N/A | Enables de novo protein and binder design. |

Detailed Experimental Protocols

Protocol 3.1: Training an E3-GNN for Affinity Prediction (PDBBind)

Objective: Train a model to predict experimental binding affinity (pKd/pKi) from a 3D protein-ligand complex structure.

- Data Curation: Download the refined set from PDBBind v2020. Split data using time-based or cluster-based splitting to avoid artificial inflation from similar complexes.

- Graph Construction:

- For each complex, represent atoms as nodes within a cutoff distance (e.g., 5Å).

- Node features: Atomic number, hybridization, valence, partial charge.

- Edge features: Distance (scalar), optionally direction (vector).

- Coordinates: Use the 3D Cartesian coordinates of each atom as the geometric attribute.

- Model Architecture: Implement an EGNN or SphereNet variant.

- The network updates both node embeddings (invariant) and coordinate vectors (equivariant).

- The final layer pools node embeddings to a global complex representation, fed into a regression head for pK prediction.

- Training: Use a mean squared error (MSE) loss between predicted and experimental pK. Optimize with AdamW. Employ heavy data augmentation via random SE(3) rotations/translations of the input complex—the E3-equivariance guarantees invariant output.

Protocol 3.2: Structure-Based Virtual Screening with a Pre-Trained E3-GNN

Objective: Rank a library of compounds for binding potency against a fixed protein target.

- Target Preparation: Obtain the 3D structure of the target binding site (experimental or homology model). Generate a protonated, energy-minimized structure.

- Ligand Library Preparation: Prepare a database of 3D small molecule conformers in a suitable format (e.g., SDF).

- Pose Generation: For each ligand, generate multiple putative docking poses within the binding site using a fast method (e.g., SMINA, QuickVina 2.1). This step is necessary to create initial 3D complexes for the GNN.

- Affinity Scoring: Process each generated protein-ligand pose through the trained E3-GNN from Protocol 3.1.

- Ranking & Analysis: Rank ligands by their predicted binding affinity. The top-ranking compounds are selected for in vitro validation.

Protocol 3.3: Binding Pose Validation using Molecular Dynamics (MD)

Objective: Validate the stability of a binding pose predicted by an E3-GNN model (e.g., DiffDock).

- System Setup: Place the predicted complex in a solvation box (e.g., TIP3P water). Add ions to neutralize charge.

- Energy Minimization: Perform steepest descent minimization to remove steric clashes.

- Equilibration: Run a short (100-250 ps) MD simulation in the NVT ensemble (constant Number, Volume, Temperature) followed by NPT ensemble (constant Number, Pressure, Temperature) to stabilize temperature (310K) and pressure (1 bar).

- Production Run: Execute an extended unbiased MD simulation (e.g., 50-100 ns). Use a 2 fs timestep.

- Analysis: Calculate the root-mean-square deviation (RMSD) of the ligand's heavy atoms relative to the predicted pose over the simulation trajectory. A stable, low RMSD (< 2Å) suggests a realistic binding pose.

The Scientist's Toolkit: Research Reagent Solutions

Table 2: Essential Tools for E3-GNN-Based Drug Design

| Item | Category | Function & Relevance |

|---|---|---|

| PDBBind Database | Dataset | Curated experimental protein-ligand complexes with binding affinities; the primary benchmark for affinity prediction models. |

| CASF Benchmark | Evaluation Suite | Standardized toolkit (scoring, docking, screening, ranking) for rigorous, apples-to-apples comparison of methods. |

| AlphaFold2 DB / RoseTTAFold | Protein Structure | Provides high-accuracy predicted structures for targets without experimental data, expanding the applicability of structure-based design. |

| DiffDock / EquiBind | Docking Software | E3-equivariant models that directly predict ligand pose, offering superior speed and/or accuracy vs. traditional sampling/scoring. |

| OpenMM or GROMACS | MD Simulation | Validates the stability of predicted poses and refines affinity estimates via free energy perturbation (FEP). |

| ChEMBL / ZINC20 | Compound Library | Large-scale, annotated chemical databases for virtual screening and training data augmentation. |

| RDKit | Cheminformatics | Fundamental toolkit for parsing SDF/PDB files, generating conformers, and calculating molecular descriptors. |

| PyTorch Geometric (PyG) / DGL | GNN Framework | Libraries with built-in support for implementing E(3)-equivariant graph neural network layers. |

Integrating E(3)-equivariant GNNs into the drug design pipeline marks a significant paradigm shift. By inherently modeling 3D geometry and physical symmetries, these models achieve more accurate and generalizable predictions of binding affinity and pose. The future of this field lies in the integration of de novo molecule generation conditioned on E(3)-equivariant features, the prediction of binding kinetics (on/off rates), and the application to more challenging targets like protein-protein interactions and membrane proteins. This direction, as outlined in the overarching thesis, promises to accelerate the discovery of novel therapeutics.

Overcoming Practical Hurdles: Training, Data, and Performance Optimization

Common Training Instabilities and Convergence Issues in Equivariant Networks

Within the broader thesis on advancing molecular property prediction using E(3)-equivariant graph neural networks (GNNs), understanding and mitigating training instabilities is paramount. These models, while powerful for capturing geometric and quantum mechanical properties of molecules, exhibit unique failure modes distinct from standard deep neural networks. This document outlines prevalent issues, provides quantitative comparisons, and details experimental protocols for stabilization.

Identified Instabilities & Quantitative Analysis

Table 1: Common Instabilities in E(3)-Equivariant GNNs

| Issue Category | Specific Manifestation | Typical Impact on Loss | Common in Architectures |

|---|---|---|---|

| Activation/Gradient Explosion | Unbounded growth of spherical harmonic features | NaN loss after few epochs | NequIP, SE(3)-Transformers, TorchMD-NET |

| Normalization Collapse | Vanishing signals in invariant pathways | Loss plateau, near-zero gradients | Models with Tensor Field Networks (TFN) layers |

| Irreps Weight Initialization | Poor scaling of higher-order feature maps | High initial loss, slow or no convergence | Any model with Clebsch-Gordan decomposed layers |

| Optimizer Instability | Oscillatory loss with AdamW, especially with weight decay | Loss spikes >50% from baseline | Models with many channel parameters (e.g., MACE) |

| Coordinate Scaling Sensitivity | Drastic performance change with Ångström vs. Bohr units | RMSE variation >0.1 eV on QM9 | All E(3)-equivariant models |

Table 2: Stabilization Techniques & Efficacy (QM9 Benchmark, α-HOMO Target)

| Technique | Average MAE (eV) Before | Average MAE (eV) After | Relative Improvement |

|---|---|---|---|