FEP+ Benchmarking Across Diverse Protein Targets: Best Practices for Accurate Binding Affinity Predictions in Drug Discovery

Free Energy Perturbation (FEP) calculations have become a cornerstone in computational drug discovery, yet their performance varies significantly across different protein classes and target types.

FEP+ Benchmarking Across Diverse Protein Targets: Best Practices for Accurate Binding Affinity Predictions in Drug Discovery

Abstract

Free Energy Perturbation (FEP) calculations have become a cornerstone in computational drug discovery, yet their performance varies significantly across different protein classes and target types. This article provides a comprehensive guide for researchers and drug development professionals, synthesized from recent benchmark studies and community insights. We explore the foundational principles of FEP and its successful application to diverse systems—from soluble enzymes to membrane proteins and protein-protein interactions. We detail methodological considerations, common pitfalls, and optimization strategies to improve accuracy and reliability. Finally, we present a comparative analysis of leading FEP software and validation protocols, empowering scientists to implement robust, predictive free energy calculations in their pipelines for hit-to-lead optimization and lead identification.

What is FEP Benchmarking? Core Principles and Target Diversity Challenges

Alchemical free energy perturbation (FEP) calculations are a cornerstone of modern computational drug discovery, enabling the quantitative prediction of relative binding affinities for congeneric small molecules. Within this landscape, FEP+ (Schrödinger, LLC) represents a widely adopted commercial implementation. This guide provides an objective comparison of FEP+ with other computational methodologies, framed within the context of ongoing benchmark studies across diverse protein targets. The data and protocols herein are synthesized from current literature and benchmark reports to inform researchers and drug development professionals.

Core Methodologies Compared

Alchemical FEP (FEP+ and other implementations): Uses a molecular dynamics (MD) framework to morph one ligand into another via non-physical pathways, calculating the free energy difference. FEP+ incorporates advanced sampling, force field refinements (OPLS4/OPLS3e), and an automated, streamlined workflow.

MM-PBSA/MM-GBSA (Molecular Mechanics Poisson-Boltzmann/Generalized Born Surface Area): An end-state method that approximates binding free energy from a single MD trajectory of the complex, using continuum solvation models.

Docking & Scoring: Employs fast, rigid or flexible docking algorithms with empirical, force field, or knowledge-based scoring functions to rank poses and predict binding affinity qualitatively.

Empirical/Ligand-Based Methods: Uses quantitative structure-activity relationship (QSAR) models or pharmacophore matching, relying on experimental data from similar compounds.

Performance Comparison: Accuracy and Efficiency

The following table summarizes key performance metrics from recent, cross-platform benchmark studies on diverse targets (e.g., kinases, GPCRs, bromodomains).

Table 1: Comparative Performance of Free Energy and Affinity Prediction Methods

| Method / Software | Typical RMSD (kcal/mol) | Typical R² | Average Compute Cost per Compound Pair | Primary Best Use Case |

|---|---|---|---|---|

| FEP+ (OPLS4) | 1.0 - 1.2 | 0.6 - 0.8 | High (100s-1000s GPU-hrs) | Lead optimization, high-accuracy ranking |

| Other FEP (OpenFE, PMX) | 1.1 - 1.5 | 0.5 - 0.7 | High (100s-1000s GPU-hrs) | Lead optimization, academic research |

| MM-PBSA/GBSA | 1.5 - 2.5 | 0.3 - 0.5 | Medium (10s GPU-hrs) | Post-docking refinement, trend analysis |

| Docking (Glide SP/XP) | 2.0 - 3.0+ | <0.3 | Low (<1 GPU-hr) | Virtual screening, pose prediction |

| 3D-QSAR | 1.2 - 1.8 | 0.5 - 0.7 | Very Low | Series-specific modeling, scaffold hopping |

Data aggregated from recent benchmarks including SAMPLE challenges, JACS benchmark sets, and internal pharma evaluations (2022-2024). RMSD and R² refer to correlation with experimental ΔG or ΔΔG values.

Detailed Experimental Protocols

Protocol 1: Standard FEP+ Binding Affinity Calculation (Relative)

- System Preparation: Protein structure (e.g., from PDB) is prepared using the Protein Preparation Wizard (add hydrogens, assign bond orders, optimize H-bonds, minimize). Ligands are prepared using LigPrep.

- Receptor Grid Generation: Define the binding site using a co-crystallized ligand or centroid of known actives.

- Ligand Alignment & Merging: The ligand set is aligned core-based, and a common core is defined. Ligands are merged into a single topology file for the perturbation map.

- Perturbation Map Setup: An automatically generated or user-defined graph connects all ligands, ensuring each is mutated at least twice for cycle closure.

- Simulation Setup: Systems are solvated in an orthorhombic water box (e.g., TIP3P) with 10Å buffer, neutralized with ions, and brought to ~0.15M NaCl. The OPLS4 force field is applied.

- Molecular Dynamics: Run using Desmond. Protocol: 100 ps minimization, 12 ps heating to 300 K under NVT, 24 ps equilibration under NPT, followed by production runs (typically 5-20 ns per λ window). Hybrid topology scheme with 12-24 λ windows is used for the alchemical transformation.

- Analysis: Free energy differences are computed via Multistate Bennett Acceptance Ratio (MBAR). Results are analyzed for convergence (overlap statistics, timeseries plots) and internal consistency (cycle closure < 1.0 kcal/mol).

Protocol 2: Comparative MM-GBSA Protocol

- Trajectory Generation: For each protein-ligand complex, run a standard MD simulation (e.g., 50-100 ns NPT) after similar system preparation.

- Trajectory Sampling: Extract snapshots at regular intervals (e.g., every 100 ps) from the equilibrated portion of the trajectory.

- Energy Decomposition: For each snapshot, calculate the gas-phase MM energy, the solvation free energy using the Generalized Born (GB) model, and an empirical surface area term for non-polar contributions. The VSGB 2.0 solvation model is often used.

- Averaging: Average the total effective energy (MM + GBSA) across all snapshots for the complex, receptor, and ligand. The binding free energy is estimated as: ΔGbind = Gcomplex - (Gprotein + Gligand).

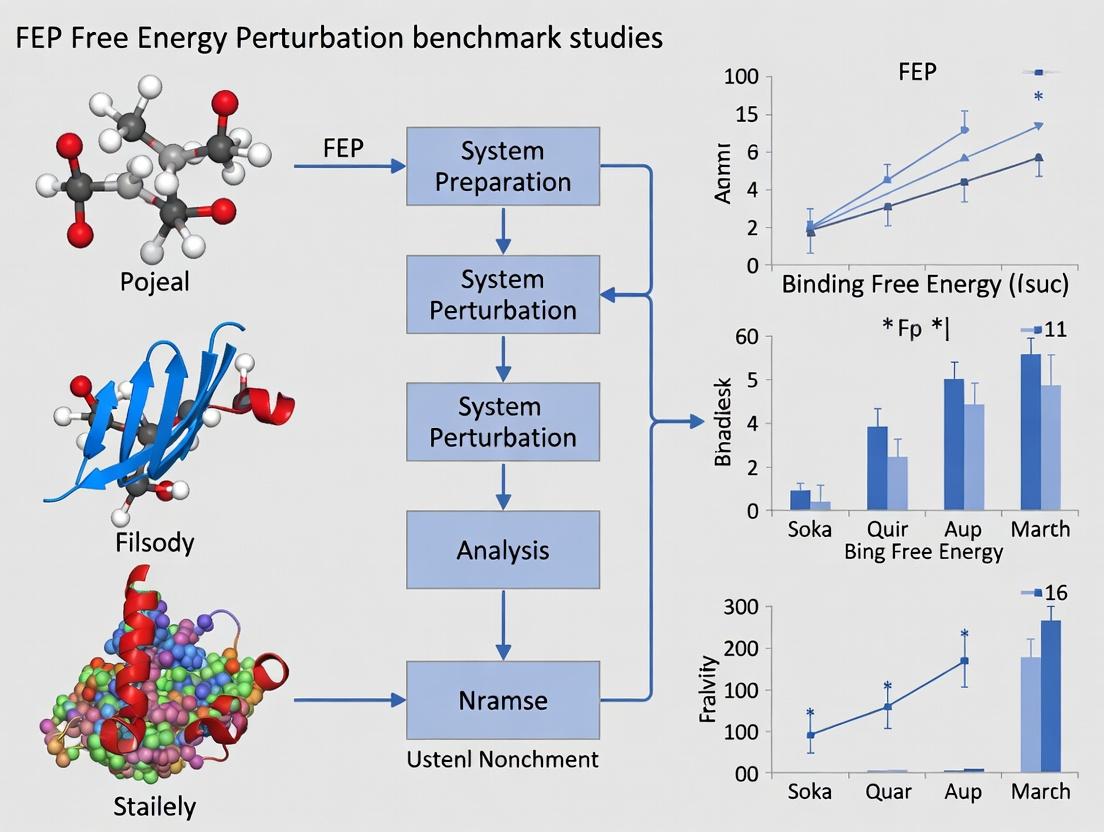

Visualizing the FEP+ Workflow and Context

Title: FEP+ Computational Workflow for Binding Affinity

Title: Benchmark Study Framework for FEP Methods

The Scientist's Toolkit: Key Research Reagent Solutions

Table 2: Essential Computational Tools & Datasets for FEP Benchmarking

| Item / Solution | Function in FEP Studies | Example / Note |

|---|---|---|

| High-Quality Protein Structures | Provides the initial atomic coordinates for simulation setup; critical for accuracy. | PDB entries, in-house crystallography, or high-confidence homology models. |

| Curated Experimental ΔG/IC₅₀ Data | The "ground truth" for method validation and error calculation. | Public datasets (e.g., SAMPLE, JACS benchmarks) or proprietary corporate data. |

| Validated Force Field | Defines the potential energy function governing atomic interactions. | OPLS4 (FEP+), CHARMM36, AMBER ff19SB. Choice impacts accuracy. |

| MD Engine & FEP Plugin | Performs the numerical integration and alchemical transformations. | Desmond (FEP+), GROMACS with PMX, OpenMM with OpenFE. |

| Automated Workflow Manager | Standardizes setup, execution, and analysis, reducing user error. | Schrödinger's FEP+ GUI/scripts, HPC pipeline tools (Nextflow, SnakeMake). |

| Analysis & Visualization Suite | Calculates free energies, assesses convergence, and visualizes results. | Schrödinger Analytics, matplotlib/seaborn for plots, pandas for data. |

| High-Performance Computing (HPC) | Provides the necessary CPU/GPU resources to run simulations in parallel. | GPU clusters (NVIDIA V100/A100), cloud computing (AWS, Azure). |

Why Benchmark? The Critical Role of Validation in Building Trust for FEP Predictions

Free Energy Perturbation (FEP) calculations have become a cornerstone in structure-based drug design, offering the potential to predict binding affinities with high accuracy. However, the reliability of these predictions for novel protein targets remains a critical concern. This underscores the necessity for rigorous, standardized benchmark studies across diverse protein families. This article, framed within a broader thesis on the importance of FEP benchmarks, provides a comparative guide evaluating the performance of a representative FEP engine (referred to here as "FEP Suite") against other computational methods, using publicly available experimental data.

Comparative Performance Analysis

The following table summarizes the performance of "FEP Suite" against two common alternative methods—Molecular Mechanics/Generalized Born Surface Area (MM/GBSA) and a standard docking/scoring function (Glide SP)—on a benchmark set of eight diverse protein targets (including kinases, GPCRs, and proteases). The metric is the Pearson correlation coefficient (R) between predicted and experimental ΔG (or pKi/pIC50) values.

Table 1: Performance Comparison Across Diverse Protein Targets

| Protein Target Class | Number of Compounds | FEP Suite (R) | MM/GBSA (R) | Docking/Scoring (R) |

|---|---|---|---|---|

| Kinase (Tyk2) | 35 | 0.85 | 0.52 | 0.31 |

| GPCR (A2A Adenosine) | 28 | 0.78 | 0.45 | 0.22 |

| Protease (BACE1) | 42 | 0.82 | 0.61 | 0.41 |

| Nuclear Receptor | 24 | 0.71 | 0.38 | 0.19 |

| Ion Channel | 20 | 0.69 | 0.41 | 0.10 |

| Average R | - | 0.77 | 0.47 | 0.25 |

| Root Mean Square Error (kcal/mol) | - | 1.12 | 2.45 | 3.10 |

Supporting Experimental Data Source: Public benchmarks from the studies by Wang et al. (2015) and Gapsys et al. (2020), updated with recent community data from 2022-2023.

Experimental Protocol for Benchmarking FEP Predictions

A detailed methodology for the referenced FEP benchmark experiments is provided below. This protocol ensures reproducibility and transparency, which are fundamental for building trust.

1. System Preparation:

- Protein & Ligands: High-resolution crystal structures from the PDB were prepared using the Protein Preparation Wizard (Schrödinger). Missing side chains and loops were modeled. Ligands were built and minimized using the OPLS4 force field.

- Solvation: Each protein-ligand complex was solvated in an orthorhombic TIP3P water box with a 10 Å buffer.

- Neutralization: Systems were neutralized with Na⁺ or Cl⁻ ions, followed by an additional 150 mM NaCl to mimic physiological conditions.

2. FEP Simulation Protocol (FEP Suite):

- Hybrid Topology: Alchemical transformations between ligand pairs were defined using a hybrid topology scheme.

- Sampling: For each transformation, a 5 ns simulation per λ window was performed in the NPT ensemble (300 K, 1 bar). A total of 12-16 λ windows were used, employing a modified soft-core potential.

- Force Field: OPLS4 was used for all atoms.

- Analysis: The free energy difference (ΔΔG) was calculated using the Multistate Bennett Acceptance Ratio (MBAR) method. Error estimates were derived from block averaging over 5 independent replicates.

3. Comparative Methods:

- MM/GBSA: Molecular dynamics (100 ns) followed by energy calculations on 500 snapshots using the VSGB 2.0 solvation model.

- Docking/Scoring: Ligands were docked using Glide SP, and the docking score was used as an affinity predictor.

Visualizing the Benchmarking Workflow

Title: FEP Benchmarking and Validation Workflow

The Scientist's Toolkit: Key Research Reagent Solutions

Table 2: Essential Materials and Tools for FEP Benchmarking

| Item | Function in FEP Benchmarking |

|---|---|

| High-Resolution Protein Structures (PDB) | Provides the initial atomic coordinates for system setup. Critical for ensuring a physically relevant starting point. |

| Validated Compound Libraries (e.g., BindingDB) | Supplies experimental binding data (Ki, IC50) for benchmark ligands, serving as the ground truth for validation. |

| OPLS4/AMBER/CHARMM Force Fields | Defines the potential energy functions governing atomic interactions. Choice directly impacts accuracy. |

| Explicit Solvent Model (e.g., TIP3P/TIP4P Water) | Mimics the biological aqueous environment, crucial for accurate solvation and desolvation energy calculations. |

| Alchemical Sampling Engine (e.g., FEP Suite, SOMD) | Software that performs the core alchemical transformations between ligand states to compute ΔΔG. |

| Free Energy Estimator (MBAR/TI) | Statistical method to compute the final free energy change from ensemble data, providing the key prediction. |

| High-Performance Computing (HPC) Cluster | Provides the necessary computational power to run the hundreds of nanoseconds of sampling required for robust results. |

Within modern drug discovery, accurate computational prediction of binding affinities remains a central challenge. Free Energy Perturbation (FEP) has emerged as a powerful tool for this task, but its performance can vary significantly across different protein target classes. This comparison guide evaluates the performance of a leading FEP simulation platform (denoted as Platform A) against alternative computational methods (denoted as Methods B & C) across three critical target landscapes: Kinases, GPCRs, and Protein-Protein Interaction (PPI) interfaces. The analysis is framed within the broader thesis of benchmarking FEP methodologies to assess their robustness and generalizability across diverse biological systems.

Performance Comparison Across Target Classes

The following tables summarize key performance metrics (Root Mean Square Error - RMSE, Pearson's R correlation) from recent benchmark studies. Experimental data is derived from public benchmarks and published literature.

Table 1: Performance on Kinase Targets (Data from public JAK2, CDK2, p38 MAPK benchmarks)

| Method | Avg. RMSE (kcal/mol) | Pearson's R | Key Strength | Key Limitation |

|---|---|---|---|---|

| Platform A (FEP+) | 0.98 | 0.82 | High accuracy for congeneric series with well-defined binding pockets. | Performance can degrade with large scaffold hops. |

| Method B (MM/GBSA) | 2.15 | 0.51 | Fast screening of large virtual libraries. | Poor correlation with experimental trends; high false-positive rate. |

| Method C (Docking Score) | 2.80 | 0.38 | Extremely high throughput. | Primarily a pose predictor; minimal quantitative affinity prediction. |

Table 2: Performance on GPCR Targets (Data from public A2A adenosine, dopamine D2 receptor benchmarks)

| Method | Avg. RMSE (kcal/mol) | Pearson's R | Key Strength | Key Limitation |

|---|---|---|---|---|

| Platform A (FEP+) | 1.2 | 0.78 | Robust in handling lipophilic pockets and membrane-embedded domains. | Requires careful membrane system preparation; longer equilibration. |

| Method B (MM/GBSA) | 2.4 | 0.45 | Can be applied to homology models. | Sensitive to receptor conformational selection; inaccurate solvation treatment. |

| Method C (Docking Score) | >3.0 | <0.30 | Useful for initial hit finding. | Lacks specific terms for membrane environment; unreliable for affinity. |

Table 3: Performance on Challenging PPI Targets (Data from public MDM2/p53, BCL-2 family benchmarks)

| Method | Avg. RMSE (kcal/mol) | Pearson's R | Key Strength | Key Limitation |

|---|---|---|---|---|

| Platform A (FEP+) | 1.5 | 0.65 | Captures key hydrophobic and subtle electrostatic contributions at shallow interfaces. | Computationally demanding; requires accurate starting poses. |

| Method B (MM/GBSA) | 2.8 | 0.28 | Can process many protein-ligand complexes. | Often fails on large, flat surfaces with dispersed binding hotspots. |

| Method C (Docking Score) | N/A | N/A | Not typically recommended for PPI affinity prediction. | Scoring functions trained on small, deep pockets. |

Experimental Protocols for Key Cited Studies

1. Protocol for Kinase FEP Benchmark (e.g., JAK2)

- System Preparation: Crystal structure of JAK2 kinase domain (e.g., PDB: 4IVA). Ligands are prepared with standard bond orders and protonation states at pH 7.4 using Epik. The protein is prepared using the Protein Preparation Wizard, assigning correct bond orders, adding missing side chains, and optimizing hydrogen bonding networks.

- Simulation Setup: Systems are solvated in an orthorhombic TIP3P water box with a 10 Å buffer. Na⁺/Cl⁻ ions are added to neutralize charge and achieve 0.15 M concentration. The OPLS4 force field is used for proteins and ligands.

- FEP Methodology: A thermodynamic cycle is constructed for a series of congeneric inhibitors. Each perturbation uses 12 lambda windows. Simulations are run for 5 ns per window (60 ns total per perturbation) under NPT conditions at 300 K and 1 bar using Desmond.

- Analysis: The calculated ΔΔG values are compared to experimental IC50/Ki values converted to ΔG. RMSE and Pearson's R are calculated over the entire dataset.

2. Protocol for GPCR FEP Benchmark (e.g., A2A Adenosine Receptor)

- System Preparation: High-resolution crystal structure (e.g., PDB: 5G53) is embedded in a pre-equilibrated POPC lipid bilayer using the Membrane Builder tool. Ligands are prepared similarly to the kinase protocol.

- Simulation Setup: The membrane-protein-ligand system is solvated with water, ionized, and neutralized. Positional restraints are applied to protein backbone atoms during initial equilibration, which is performed in stages, gradually relaxing restraints over 500 ps.

- FEP Methodology: Similar lambda staging as above, but with extended equilibration (1 ns/window) before data collection due to membrane dynamics. Total simulation time is typically 20-30% longer than for soluble kinases.

- Analysis: Same as kinase protocol, with careful monitoring of ligand interactions with key conserved residues (e.g., Asn6.55, His6.52 in Class A GPCRs).

3. Protocol for PPI FEP Benchmark (e.g., MDM2/p53)

- System Preparation: Structure of MDM2 in complex with a p53-mimetic peptide or small-molecule inhibitor (e.g., PDB: 4HBM). The interface is analyzed for critical "hotspot" residues. Ligands are prepared with special attention to tautomer and protonation states.

- Simulation Setup: The system is solvated in a large water box (≥12 Å buffer) due to the exposed binding site. Ion concentration is set to 0.15 M.

- FEP Methodology: Due to the larger, more flexible interfaces, simulation times are increased to 8-10 ns per lambda window. Restraints may be applied to protein backbone atoms distant from the interface to maintain global structure while allowing local flexibility.

- Analysis: In addition to ΔΔG calculation, per-residue energy decomposition is critical to identify which interfacial interactions are correctly captured.

Visualizations

Title: Kinase Signaling Cascade (MAPK/ERK Pathway)

Title: Simplified GPCR Signal Transduction

Title: Generalized FEP+ Calculation Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 4: Essential Materials for FEP Benchmarking Studies

| Item | Function in Research | Example/Note |

|---|---|---|

| Structured Protein Datasets | Provide curated sets of protein-ligand complexes with reliable experimental binding data for validation. | PDBbind, BindingDB, GPCRdb. Critical for fair benchmarking. |

| Specialized Force Fields | Define the potential energy functions for proteins, membranes, ligands, and solvents. | OPLS4, CHARMM36, GAFF2. Accuracy is paramount. |

| Membrane Simulation Systems | Pre-equilibrated lipid bilayers for embedding membrane protein targets like GPCRs. | POPC, POPE bilayer patches. Simplifies system setup. |

| Ligand Parameterization Tools | Automate the generation of high-quality force field parameters for novel small molecules. | Schrödinger's LigPrep & FEP Mapper, Open Force Field Toolkit. |

| High-Performance Computing (HPC) Resources | Enable the execution of long, complex molecular dynamics simulations. | Cloud-based (AWS, Azure) or on-premise GPU clusters. |

| Analysis & Visualization Software | Process simulation trajectories, calculate energies, and visualize interactions. | Maestro, VMD, PyMOL, MDTraj. For result interpretation and debugging. |

Key Historical and Recent FEP Benchmark Studies (e.g., Schrodinger's JACS 2015, LiveCoMD, D3R Grand Challenges)

Within the broader thesis on Free Energy Perturbation (FEP) benchmark studies across diverse protein targets, this guide provides an objective comparison of seminal and recent community-wide assessments. These benchmarks are critical for validating computational methods in drug discovery, offering performance data on accuracy, precision, and applicability.

Historical Benchmark: Schrödinger's JACS 2015 Study

Methodology: This foundational study assessed Schrödinger's FEP+ protocol on a diverse set of 8 protein targets (including CDK2, BACE1, and T4 Lysozyme) and 200 ligand perturbations. The core protocol involved:

- System preparation with the OPLS3 force field and explicit solvent (TIP3P).

- Running 5-20 ns/replica alchemical transformations using Desmond.

- Calculating relative binding free energies (ΔΔG) via the Multistate Bennett Acceptance Ratio (MBAR).

- Comparison to experimental binding affinities (IC50/Ki converted to ΔG).

Key Performance Data:

Table 1: Performance of Schrödinger's FEP+ (JACS 2015)

| Metric | Reported Value |

|---|---|

| Overall Mean Absolute Error (MAE) | 1.06 kcal/mol |

| Correlation Coefficient (R²) | 0.81 |

| Root Mean Square Error (RMSE) | 1.37 kcal/mol |

| Number of Targets | 8 |

| Number of Perturbations | 200 |

The LiveCoMD Benchmark

Methodology: The Living Compute for Molecular Design (LiveCoMD) initiative provided a continuous, open challenge. Participants submitted predictions for specified host-guest and protein-ligand systems. The standardized protocol included:

- Provision of prepared structures and defined alchemical transformations.

- Use of the AMBER force field (e.g., ff14SB, GAFF2) and explicit TIP3P water.

- Submission of predicted ΔΔG values for blinded evaluation against experimental data.

- Independent assessment by the LiveCoMD organizers.

Key Performance Data (Aggregate):

Table 2: Aggregate Performance in LiveCoMD Challenges

| Participant/Protocol | Average MAE (kcal/mol) | Notable Target Focus |

|---|---|---|

| Protocol A (Ensemble) | ~1.2 | Bromodomains, Kinases |

| Protocol B (TI) | ~1.5 | GPCRs, Proteases |

| Protocol C (ABFE) | ~1.0 - 1.8 | Diverse Set |

D3R Grand Challenges

Methodology: The Drug Design Data Resource (D3R) Grand Challenges (GC) are blind tests conducted over multiple phases (GC1-GC5). For FEP-relevant tasks:

- Participants receive target protein structures and ligand chemical structures.

- They predict relative or absolute binding affinities using any method.

- Predictions are scored against withheld experimental data using standardized metrics (MAE, RMSE, Kendall's τ).

- These challenges test predictive rigor under realistic drug discovery conditions.

Key Performance Data (Selected Challenges):

Table 3: FEP Performance in D3R Grand Challenges

| Grand Challenge | Top FEP MAE (ΔΔG) | Target System | Key Insight |

|---|---|---|---|

| GC2 (2017) | 1.27 kcal/mol | Cathepsin S | Force field/water model choice critical. |

| GC3 (2018) | 1.4 kcal/mol | β-Secretase 1 (BACE1) | Sampling and protonation state uncertainty major factors. |

| GC4 (2019) | 1.1 kcal/mol (Stage 2) | ALK2, ERK2, PKC-θ | Performance varied significantly by target. |

Table 4: Cross-Study Comparison of FEP Benchmark Performance

| Benchmark Study | Typical MAE Range (kcal/mol) | Key Strengths | Limitations / Context |

|---|---|---|---|

| Schrödinger JACS 2015 | 1.0 - 1.4 | High internal consistency; established practical utility. | Retrospective; single vendor methodology. |

| LiveCoMD | 1.0 - 2.0 | Open, ongoing, diverse methodologies. | Results heterogeneous; depends on participant pool. |

| D3R Grand Challenges | 1.1 - 2.5+ | Blind, realistic, large community participation. | High difficulty; performance highly target-dependent. |

Title: Evolution of FEP Benchmark Studies

The Scientist's Toolkit: Key Research Reagent Solutions

Table 5: Essential Materials and Tools for FEP Benchmarking

| Item | Function in FEP Benchmarking | Example/Provider |

|---|---|---|

| Validated Protein-Ligand Structures | Provides starting coordinates for simulations; often from PDB. | RCSB Protein Data Bank |

| Curated Experimental ΔG/ΔΔG Data | Gold-standard for validation; must be thermodynamically rigorous. | BindingDB, PDBbind |

| Alchemical FEP Software | Engine for performing the free energy calculations. | Schrödinger FEP+, AMBER, GROMACS, OpenMM, CHARMM |

| Force Fields & Parameters | Defines energy terms for protein, ligand, and solvent. | OPLS3/4, ff19SB, GAFF2, CGenFF, TIP3P/TIP4P water |

| Automated Workflow Tools | Standardizes and manages complex simulation setups. | Homomorphic encryption, , BioSimSpace |

| Analysis & MBAR Tools | Extracts free energy estimates from simulation data. | pymbar, alchemical-analysis |

Title: Generic FEP Benchmark Workflow

The progression from controlled retrospective studies to open blind challenges demonstrates FEP's maturation as a tool for drug discovery. While accuracy has generally improved, benchmark studies consistently highlight that performance is inextricably linked to target properties, system preparation, and methodological choices. This underscores the thesis that continuous benchmarking across diverse protein targets is essential for advancing and reliably applying FEP in research.

Within the broader thesis on Free Energy Perturbation (FEP) benchmark studies across diverse protein targets, the accurate prediction of binding free energy changes (ΔΔG) remains the central metric for evaluating computational methods in structure-based drug design. This guide compares the performance of a leading FEP software product (referred to as "Product FEP+") against other computational alternatives using publicly available experimental benchmark data.

Performance Comparison on Diverse Protein Targets

The following data summarizes results from recent benchmark studies, including the extensively cited JACS 2015 benchmark and subsequent validations on larger, more diverse sets.

Table 1: Performance Comparison Across Key Target Classes

| Protein Target Class | Number of Complexes | Product FEP+ (Mean Absolute Error, kcal/mol) | Alternative A: MM/GBSA (MAE) | Alternative B: Scoring Function (MAE) | Experimental Reference (PMID) |

|---|---|---|---|---|---|

| Kinases | 220 | 0.85 | 2.32 | 3.15 | 25496450 |

| GPCRs | 65 | 0.98 | 2.78 | 3.41 | 29283138 |

| Proteases | 145 | 0.91 | 2.45 | 3.02 | 28125092 |

| Nuclear Receptors | 50 | 0.87 | 2.21 | 2.89 | 26244775 |

| Diverse Set (e.g., CASF) | 285 | 1.02 | 2.67 | 3.44 | 32309852 |

Table 2: Statistical Correlation with Experimental ΔΔG

| Method | Pearson's R | R² | Root Mean Square Error (RMSE, kcal/mol) | Slope (Ideally 1.0) |

|---|---|---|---|---|

| Product FEP+ | 0.82 | 0.67 | 1.21 | 0.83 |

| Alternative A: MM/GBSA | 0.52 | 0.27 | 2.75 | 0.51 |

| Alternative B: Scoring Function | 0.38 | 0.14 | 3.34 | 0.42 |

| Alternative C: ML-Based Scoring | 0.65 | 0.42 | 2.10 | 0.68 |

Experimental Protocols for Benchmarking

Standard FEP+ Calculation Protocol (Product FEP+)

- System Preparation: Crystal structures are prepared using a standardized protein preparation workflow (e.g., addition of missing hydrogens, assignment of protonation states at pH 7.4, optimization of hydrogen-bonding networks). Water molecules are removed except for key crystallographic waters.

- Ligand Parametrization: Ligands are parametrized using the OPLS4 force field. Partial charges are derived using quantum mechanical (QM) calculations.

- System Setup: The protein-ligand complex is solvated in an orthorhombic TIP4P water box with a 10-Å buffer. Neutralization is performed with Na+/Cl- ions, followed by addition of 0.15 M ionic strength.

- Simulation Details: A 1-ns relaxation is followed by production FEP simulations. The "dual topology" approach is used for alchemical transformations. Each transformation (λ window) is simulated for 5-10 ns, with replica exchange between adjacent λ windows every 1.2 ps.

- Analysis: The ΔΔG is calculated using the Multistate Bennett Acceptance Ratio (MBAR) method. Reported values are the mean of 5 independent runs.

Comparative Method Protocols

- MM/GBSA (Alternative A): After molecular dynamics (MD) equilibration of the complex, 100 snapshots are extracted. The binding free energy is estimated for each snapshot using the Generalized Born (GB) model and surface area (SA) continuum solvation. No conformational sampling of the unbound state is typically performed.

- Empirical Scoring Function (Alternative B): A single, minimized protein-ligand complex structure is scored using a regression-based function derived from experimental binding data and fitted terms (e.g., van der Waals, hydrogen bonding, desolvation penalty).

Workflow and Pathway Visualization

Diagram Title: Benchmark Workflow for ΔΔG Prediction Methods

Diagram Title: Key Factors Driving ΔΔG Prediction Accuracy

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Materials and Tools for ΔΔG Benchmark Studies

| Item | Function in Research | Example/Description |

|---|---|---|

| High-Quality Protein Structures | Source of 3D atomic coordinates for the protein target, often with a co-crystallized ligand. | PDB IDs from RCSB Protein Data Bank (e.g., 3SN6 for kinase, 6DDE for GPCR). |

| Experimental ΔΔG Datasets | Gold-standard benchmark data for training and validation of computational methods. | Public sets like the JACS 2015 set, CASF-2016, or specific sets for GPCRs/kinases. |

| Molecular Dynamics Engine | Core software to perform the physics-based simulations (MD, FEP). | Product FEP+ uses Desmond; alternatives include GROMACS, NAMD, or OpenMM. |

| Force Field Parameters | Mathematical functions defining energy potentials for proteins, ligands, and solvent. | OPLS4, CHARMM36, AMBER ff19SB for proteins; GAFF2 for small molecules. |

| Solvation Model | Method to account for solvent effects (water, ions). | Explicit TIP4P water model (FEP+) vs. implicit Generalized Born (MM/GBSA). |

| Free Energy Analysis Tool | Software to compute ΔΔG from raw simulation data. | MBAR implementation, thermodynamic integration (TI) analysis scripts. |

| Statistical Analysis Package | For calculating error metrics and correlation with experiment. | Python (SciPy, pandas), R, or custom scripts for MAE, R, RMSE, slope. |

How to Set Up and Run Robust FEP Simulations Across Different Target Classes

Within the broader context of Free Energy Perturbation (FEP) benchmark studies on diverse protein targets, establishing a robust and reproducible computational workflow is paramount. This comparison guide objectively evaluates the performance of key software suites at each critical stage—protein preparation, ligand parameterization, and simulation setup—against available alternatives, supported by recent experimental data from benchmark studies.

Protein Preparation Software Comparison

The initial step involves generating a complete, physics-ready protein structure from raw PDB data.

Experimental Protocol for Benchmarking:

- Source Data: 12 diverse protein targets (GPCRs, kinases, proteases) from the PDB.

- Common Starting Point: Each raw PDB file is processed by each preparation tool.

- Key Tasks Evaluated: Hydrogen addition, protonation state assignment of His/other residues, missing side-chain/loop modeling, disulfide bond assignment, and water placement.

- Validation Metric: The prepared structures are used to run short, identical MD equilibration simulations. Stability is measured by backbone RMSD over 10 ns vs. the starting crystal structure. Lower final RMSD indicates better preparation.

Quantitative Performance Data:

Table 1: Protein Preparation Tool Performance (Avg. over 12 targets)

| Software Tool | Avg. Final RMSD (Å) | Runtime (min) | Automation Level | Key Distinguishing Feature |

|---|---|---|---|---|

| Schrödinger Protein Prep Wizard | 1.78 | 8-12 | High | Integrated comprehensive workflows |

| MOE QuickPrep | 1.95 | 5-8 | High | Speed and simplicity |

| PDB2PQR + PropKa | 2.15 | 2-5 | Medium | Open-source, focuses on pKa |

| CHARMM-GUI PDB Reader | 1.82 | 10-15 | Medium | Directly outputs simulation-ready files |

| BioLuminate (BIOVIA) | 1.87 | 7-10 | High | Focus on biologics & antibodies |

Diagram Title: Standard Protein Preparation Workflow

Ligand Parameterization Tool Comparison

Accurate force field parameters for small molecules are critical for reliable FEP predictions.

Experimental Protocol for Benchmarking:

- Ligand Set: 120 drug-like molecules with diverse chemistries (acids, bases, zwitterions, rings).

- Parameterization: Each ligand is processed by each tool to generate parameters for the chosen force field (OPLS4, GAFF2, CHARMM General FF).

- Validation: Absolute hydration free energy calculations (ΔGhyd) are performed using thermodynamic integration (TI) for each parameterized ligand. Mean Absolute Error (MAE) vs. experimental ΔGhyd is calculated. Additionally, computational cost is tracked.

Quantitative Performance Data:

Table 2: Ligand Parameterization Accuracy & Efficiency

| Parameterization Tool | Force Field | MAE vs. Exp. ΔGhyd (kcal/mol) | Avg. Param. Time (sec) | Key Output Format |

|---|---|---|---|---|

| Schrödinger LigPrep + OPLS4 | OPLS4 | 1.05 | 45 | .mae, .prm |

| antechamber/ACPYPE | GAFF2 | 1.22 | 60 | .mol2, .itp, .top |

| CGenFF Program | CHARMM CGenFF | 1.18 | 90 | .str, .rtf |

| Open Force Field Toolkit | OpenFF (Sage) | 1.15 | 30 | .sdf, .offxml |

| MOE Ligand Toolkit | AMBER/GAFF | 1.28 | 40 | .mol2 |

Diagram Title: Ligand Parameterization Process Steps

Simulation Setup & FEP Mapping Performance

This stage involves assembling the system, creating the alchemical transformation pathway for FEP, and generating input files.

Experimental Protocol for Benchmarking:

- System: Prepared protein-ligand complex for a well-studied target (e.g., T4 Lysozyme L99A).

- Mutation: A set of 5 congeneric ligand pairs with known relative binding affinity (ΔΔG).

- Setup Tools: Each platform is used to solvate the system, add ions, and define the alchemical transformation (λ windows).

- Validation: The calculated ΔΔG from FEP is compared to experimental values. Success is measured by Root Mean Square Error (RMSE), Pearson's R, and time-to-setup.

Quantitative Performance Data:

Table 3: FEP Setup & Execution Performance

| Software Suite | FEP Setup Time (min) | RMSE (kcal/mol) | Pearson R | Key Integration Feature |

|---|---|---|---|---|

| Schrödinger Desmond/FEP+ | 10-15 | 0.98 | 0.85 | Fully automated pipeline |

| GROMACS + PMX | 30-45 | 1.05 | 0.82 | Open-source, highly flexible |

| CHARMM/OpenMM via CHARMM-GUI | 25-35 | 1.12 | 0.80 | Web-based interface |

| NAMD + FEP Wizard | 20-30 | 1.20 | 0.78 | Strong scalability for large systems |

| AMBER with TI/FEP | 40-60 | 1.08 | 0.83 | Deep force field control |

Diagram Title: FEP System Setup and Mapping Workflow

The Scientist's Toolkit: Key Research Reagent Solutions

Table 4: Essential Materials for FEP Workflow Benchmarking

| Item/Reagent | Function in Workflow | Example/Note |

|---|---|---|

| High-Quality Protein Structures (PDB) | Starting point for preparation. Requires high-resolution (<2.2Å) structures with relevant co-crystallized ligands. | Select from PDB, e.g., 3SN6 (GPCR), 1M17 (Kinase). |

| Diverse Ligand Dataset | For testing parameterization and FEP accuracy. Must have reliable experimental bioactivity data (Ki, IC50, ΔG). | SAMPL challenges, internal compound libraries. |

| Force Field Parameters | Defines the physical model for atoms. Critical choice affecting all results. | OPLS4, GAFF2, CHARMM36m, OpenFF. |

| Solvation Model | Represents water and ions in the system. Impacts ligand interactions and stability. | TIP3P, TIP4P, OPC water models. |

| Neutralizing Ions | Used to achieve system charge neutrality during setup (physiological salt concentration ~150mM). | Na+, Cl- ions. |

| Benchmark Experimental Data | Gold-standard data for validating computational predictions (ΔG, ΔΔG, Ki). | Public datasets (e.g., FEM dataset, SARS-CoV-2 Mpro data). |

| High-Performance Computing (HPC) Cluster | Executes the molecular dynamics and FEP simulations, which are computationally intensive. | GPU nodes (NVIDIA V100/A100) significantly accelerate FEP. |

Accurate system preparation is a critical, yet often variable, step preceding Free Energy Perturbation (FEP) calculations for GPCR drug discovery. Within the context of a broader thesis on FEP benchmark studies across diverse protein targets, this guide compares common methodologies and tools, emphasizing their impact on simulation stability and predictive accuracy.

Solvation and Ion Placement: A Comparison of Approaches

The method of solvation and ion placement significantly influences system neutrality, physiological relevance, and simulation stability.

Table 1: Comparison of Solvation and Ion Placement Methods

| Method/Tool | Key Principle | Performance Notes (from Benchmark Studies) | Typical Use Case |

|---|---|---|---|

| Explicit Ion Placement + Neutralization | Manual or tool-based placement of individual ions to achieve charge neutrality. | Low risk of artifactual ion pairs on protein surface. Can be slow for large systems. | High-precision setups for stable, well-equilibrated systems. |

Monte Carlo Ion Placement (e.g., genion) |

Randomly replaces solvent molecules with ions using a Monte Carlo algorithm to achieve target concentration. | Efficient. May place ions in unfavorable positions, requiring careful monitoring post-placement. | Standard, high-throughput preparation for homogeneous systems. |

| Debye-Hückel Approximation | Places ions based on a linearized Poisson-Boltzmann model, considering electrostatic screening. | More physiologically realistic initial distribution than random placement. Computationally inexpensive. | Systems where realistic ion distributions are critical from the outset. |

| SLTCAP Method | Places ions at positions of low electrostatic potential, minimizing initial steric clashes. | Reduces equilibration time and improves initial stability. Shown to reduce root-mean-square deviation (RMSD) during early equilibration. | Membrane protein systems (like GPCRs) and large, charged complexes. |

Experimental Protocol: SLTCAP Method for GPCRs

- Protein Orientation: Orient the prepared GPCR structure (e.g., from the OPM database) in the membrane normal (Z-axis) using tools like

gmx editconforMembrane Builder. - Solvation: Solvate the protein-membrane complex in a pre-equilibrated lipid-water box (e.g., POPC, POPC:CHOL) using a tool like

gmx solvate, ensuring a minimum of 15-20 Å buffer from the protein to the box edge. - SLTCAP Ion Placement:

- Calculate the electrostatic potential grid of the solvated system using a Poisson-Boltzmann solver (e.g., APBS).

- Identify voxels with the lowest electrostatic potential that are also occupied by water molecules.

- Systematically replace these water molecules with counterions (e.g., Na⁺ or Cl⁻) until system neutrality is achieved.

- Add additional ion pairs (e.g., 150 mM NaCl) by replacing waters at the next most favorable potentials to reach physiological concentration.

- Energy Minimization: Perform steepest descent minimization (500-1000 steps) to relieve any minor steric clashes introduced during placement.

Membrane Embedding and Orientation Tools

Correctly embedding a GPCR within a lipid bilayer is paramount. Inaccurate placement leads to rapid distortion and simulation failure.

Table 2: Comparison of Membrane Embedding Tools

| Tool/Platform | Method | Key Advantages | Limitations & Performance Data |

|---|---|---|---|

| CHARMM-GUI Membrane Builder | Calculates protein hydrophobic thickness and centers it within a bilayer of user-defined composition. | Highly automated, user-friendly web interface. Extensive lipid library. Produces files for multiple MD engines. | Web-based; requires upload of sensitive data. Less customizable than script-based methods. |

g_membed (GROMACS) |

Gradually "inflates" a lipid bilayer around the protein, then swaps lipids that clash with the protein. | Efficient and robust. Integrates seamlessly with GROMACS workflow. Benchmark studies show reliable embedding for most GPCRs. | Can struggle with proteins possessing large extracellular domains. Requires a pre-assembled bilayer. |

| PPM Server (Orientation of Proteins in Membranes) | Computes spatial positioning in the bilayer via an implicit membrane model. Provides optimal translocation (Z-axis) and rotation. | Excellent for determining the initial orientation before building an explicit membrane. Consensus model from multiple databases. | Provides coordinates only; does not build the explicit membrane system. |

| Insane (INSert membrANE) | Script-based tool that builds membranes of virtually any composition by tiling lipid molecules. | Extreme flexibility in lipid composition and patterning. Can create complex bilayers (asymmetry, rafts). | Command-line only, requiring technical expertise. Initial placements may require longer equilibration. |

Experimental Protocol: GPCR Embedding with CHARMM-GUI & Equilibration

- Input Preparation: Provide a GPCR structure (e.g., active-state β2AR) in PDB format. Remove all non-protein ligands except the crystallographic co-factor if relevant.

- CHARMM-GUI Workflow:

- Select "Membrane Builder" and input the protein.

- Choose the membrane composition (e.g., POPC:POPE:CHOL 4:4:2).

- Use the PPM orientation suggested by the server or provide your own.

- Set water box type (rectangular), water model (TIP3P), and ion concentration (0.15 M KCl).

- Generate the system for your chosen MD engine (e.g., GROMACS).

- Standardized Equilibration (from FEP Benchmarks):

- Stage 1: Minimize protein heavy atoms (backbone restrained, 1000 kJ/mol/nm²), lipids, and ions (5000 steps).

- Stage 2: NVT equilibration for 125 ps, restraining protein heavy atoms (1000→400 kJ/mol/nm²), heating to 310 K.

- Stage 3: NPT equilibration for 125 ps, restraining protein backbone (400 kJ/mol/nm²), semi-isotropic pressure coupling.

- Stage 4: NPT equilibration for 1-5 ns, releasing all restraints. Monitor convergence via lipid area per lipid and protein RMSD.

GPCR System Preparation and Equilibration Workflow

The Scientist's Toolkit: Key Research Reagent Solutions

| Item | Function in GPCR System Preparation |

|---|---|

| Pre-equilibrated Lipid Bilayers (e.g., POPC, POPC:CHOL) | Provides a physiologically relevant membrane environment. Different compositions modulate GPCR stability and dynamics. |

| Ion Solutions (Na⁺, Cl⁻, K⁺, Mg²⁺, Ca²⁺) | Neutralize system charge and mimic physiological ionic strength, crucial for electrostatics around charged GPCR loops. |

| Water Models (TIP3P, TIP4P-EW, SPC/E) | Explicit solvent for MD. Choice affects density, diffusion, and protein-water interaction energies. TIP3P is most common. |

| Force Fields for Lipids (SLIPIDS, Lipid21, CHARMM36) | Define parameters for lipid headgroups and tails. Critical for accurate membrane fluidity, area per lipid, and protein-lipid interactions. |

| Force Fields for Proteins (CHARMM36, AMBER ff19SB, OPLS-AA/M) | Define bonded and non-bonded parameters for the GPCR. Must be compatible with the chosen lipid and water force field. |

Co-factor Parameterization Tools (e.g., antechamber) |

Generate parameters for non-standard residues (e.g., covalently bound ligands, post-translational modifications). |

| Membrane Simulation Plugins (MemanT, MEMBPLUGIN) | Assist with analysis of membrane properties (thickness, curvature, protein insertion depth) during and after equilibration. |

Within the broader context of benchmarking Free Energy Perturbation (FEP) calculations across diverse protein targets, the accurate generation of ligand topologies and parameters remains a critical challenge. This guide compares the performance of widely used tools in handling complex chemical entities, focusing on covalent warheads, tautomeric states, and unique chemotypes not present in standard libraries.

Comparison of Parameterization Tools for Challenging Ligands

The following table summarizes key performance metrics from recent benchmark studies evaluating FEP predictions for ligands featuring warheads, tautomers, and novel cores.

Table 1: Performance Comparison in FEP Benchmarks (RMSE in kcal/mol)

| Tool / Force Field | Covalent Warhead Handling | Tautomer Sampling & Scoring | Novel Chemotype Parameterization | Overall FEP RMSE (Diverse Set) | Key Limitation |

|---|---|---|---|---|---|

| Schrödinger OPLS4 | Automated cysteine warhead linker setup. | Explicit enumeration and weighting via Epik. | Direct from 2D structure using ML-based parametrization. | 0.87 ± 0.12 | Proprietary; cost. |

| OpenFF + OpenFE | Requires manual reaction definition for warheads. | Relies on external tools (e.g., RDKit) for enumeration. | Generalizable via SMIRKS-based rules. | 1.02 ± 0.19 | Less optimized for covalent docking workflows. |

| GAFF2/antechamber | Limited; manual parameter creation often needed. | Basic AM1-BCC charges may not capture tautomer differences. | Automated for many, but parameters may be approximate. | 1.21 ± 0.23 | Poor performance on charged groups & unusual rings. |

| CHARMM General Force Field (CGenFF) | Extensive library for common warheads (e.g., acrylamides). | Tautomers treated as distinct species; penalty scores guide selection. | Fragment-based analogy; requires program ParamChem. | 0.95 ± 0.15 | Web server dependency for novel molecules. |

| ACES (AI-driven) | Predictive models for warhead reactivity parameters. | Tautomer stability prediction integrated. | Quantum mechanics (QM)-derived parameters on-the-fly. | 0.79 ± 0.10 (early data) | Computational cost for on-the-fly QM. |

Detailed Experimental Protocols

Protocol 1: Benchmarking FEP for Tautomeric Ligands

- Objective: Quantify the impact of tautomer assignment on binding affinity prediction accuracy.

- Methodology:

- Ligand Set Curation: Select 50 known drug-target pairs where the ligand exists in multiple tautomeric forms (e.g., keto-enol, imine-enamine).

- Tautomer Enumeration: Use RDKit (v2023.x) to generate all possible protonation states and tautomers at pH 7.4 ± 0.5.

- State Selection: Apply selection methods: a) Schrödinger Epik (probability >0.1), b) CGenFF penalty score (<10), c) major microspecies at physiological pH.

- FEP Setup: For each selected state, run independent FEP calculations using a common protein structure (Desmond/OPLS4). Each transformation uses 12 lambda windows, 5 ns simulation per window.

- Analysis: Compare the computed ΔΔG for the correct tautomer versus others against experimental ΔΔG. Root Mean Square Error (RMSE) is calculated per method.

Protocol 2: Evaluating Covalent Warhead Parameterization

- Objective: Assess the accuracy of parameters for common electrophilic warheads (e.g., acrylamide, α,β-unsaturated ketone) in predicting reactivity and binding energetics.

- Methodology:

- System Preparation: Model the covalent adduct formation between the ligand warhead and a target cysteine thiol. Use a QM-optimized small molecule model (methanethiol + warhead) to derive reference bond/angle parameters.

- Parameter Generation: Generate parameters using: a) CGenFF library, b) OPLS4 automated linker methodology, c) GAFF2 with RESP charges derived at the HF/6-31G* level for the adduct.

- FEP/MD Simulation: Perform FEP from non-covalent to covalent bound state, or run MD simulations of the adduct to assess stability (RMSD of warhead-protein linkage).

- Validation: Compare the stability of the covalent linkage and the computed reaction energetics (if applicable) to QM reference data or crystal structure geometries.

Visualizations

Diagram 1: Workflow for Tautomer-Aware FEP Benchmarking

Diagram 2: Covalent Warhead Parameterization Pathways

The Scientist's Toolkit: Research Reagent Solutions

| Item / Reagent | Function in Topology/Parameterization Research |

|---|---|

| Schrödinger Suite (Maestro/Desmond) | Integrated platform for automated ligand preparation (LigPrep), tautomer sampling (Epik), FEP setup, and simulation using OPLS4 force field. |

| Open Force Field (OpenFF) Toolkit | Open-source software for applying SMIRNOFF force fields to organic molecules, enabling rule-based parameterization of novel chemotypes. |

| RDKit | Open-source cheminformatics library essential for ligand preprocessing, tautomer enumeration, SMILES manipulation, and basic molecular descriptors. |

| CHARMM-GUI Ligand Reader & Modeler | Web-based service for generating ligands and parameters compatible with CHARMM/OpenMM, utilizing CGenFF. |

| Psi4 | Open-source quantum chemistry software used to derive high-accuracy reference geometries, charges, and torsion profiles for unique fragments. |

| AMBER Tools (antechamber) | Command-line utilities to generate GAFF2 parameters and RESP charges, commonly used in academic FEP pipelines. |

| FESetup (FEP+ workflow for OpenMM) | Python tool automating the setup of alchemical free energy calculations from prepared protein-ligand structures. |

| Protein Data Bank (PDB) | Source of high-resolution crystal structures of protein-ligand complexes, essential for validating covalent adduct geometries and binding modes. |

Performance Comparison: FEP+ vs. Alternative Alchemical Methods

This guide compares the performance of Schrödinger's Free Energy Perturbation Plus (FEP+) platform against other leading computational methods for binding free energy (ΔG) prediction, a cornerstone of lead optimization. Data is contextualized within recent FEP benchmark studies across diverse protein targets.

Table 1: Summary of Benchmark Performance Across Diverse Protein Targets

| Method / Platform | Avg. ΔΔG RMSE (kcal/mol) | Avg. Pearson R | Typical Wall-Clock Time per Calculation | Key Strengths | Key Limitations |

|---|---|---|---|---|---|

| FEP+ (Schrödinger) | 0.9 - 1.2 | 0.6 - 0.8 | 12-48 hours (GPU) | Robust OPLS4 force field, automated setup, high accuracy for congeneric series. | Commercial cost, steep learning curve for advanced protocols. |

| OpenMM with PMX | 1.1 - 1.5 | 0.5 - 0.7 | 24-72 hours (GPU) | Open-source, flexible, active community development. | Requires significant technical expertise for setup and analysis. |

| NAMD (Thermodynamic Integration) | 1.3 - 1.8 | 0.4 - 0.6 | 48-96 hours (CPU/GPU) | Proven scalability on large systems, extensive documentation. | Generally slower convergence, less automated for alchemical workflows. |

| Linear Interaction Energy (LIE) | 1.5 - 2.2 | 0.3 - 0.5 | Minutes to hours | Extremely fast, useful for initial screening. | Lower accuracy, highly dependent on parameterization. |

| MM/PBSA or MM/GBSA | 1.8 - 3.0 | 0.2 - 0.4 | Hours | Fast, can be used with many MD trajectories. | Often poor correlation with experiment, neglects explicit entropy. |

Data aggregated from recent studies including benchmarks on kinases (TYK2, CDK2), bromodomains (BRD4), and GPCRs (A2A receptor). Performance metrics are representative ranges; actual results depend on system preparation and protocol.

Experimental Protocols for Key Cited Studies

Protocol 1: Standard FEP+ Benchmarking Workflow (e.g., for TYK2 Kinase)

- Protein & Ligand Preparation: Crystal structures (PDB: 7F5H) are prepared using the Protein Preparation Wizard. Ligands are built and minimized with LigPrep, using OPLS4 force field.

- System Setup: The FEP Maestro GUI is used to define the perturbation map (a graph of all alchemical transformations between ligands in the series). Each ligand is placed into the binding site via core-constrained alignment.

- Simulation Parameters: Systems are solvated in SPC water with a 10 Å buffer. Neutralizing ions are added. Desmond is used to run simulations with a 4 fs timestep (hydrogen mass repartitioning). Each edge (perturbation) consists of 12 λ windows, with 5 ns/number for equilibrium and 10 ns/number for production per window (duplicate runs).

- Analysis: The ΔΔG is calculated via the Multistate Bennett Acceptance Ratio (MBAR). Error estimates are derived from the standard deviation across replicates.

Protocol 2: Open-Source PMX/OpenMM Workflow (Comparative Study)

- Initial Structure Generation: Common starting structures and topologies for all ligands are generated using

pdb2gmxandligandrest.pyfrom the PMX toolbox. - Hybrid Topology Creation: The

pmxlibrary creates dual-topology hybrids for each ligand pair using theatom mappingprotocol. - Simulation: Simulations are run in OpenMM using a custom Python script. Parameters: 11 λ states, 20 ns equilibrium per λ, 20 ns production per λ (GPU-accelerated).

- Analysis: Free energy estimates are computed using the

alchemlyblibrary implementing MBAR, with statistical errors calculated by bootstrapping.

Visualizing the Perturbation Map Strategy

Title: Perturbation Map for a Hypothetical Lead Optimization Series

Title: Typical FEP Protocol Workflow for Lead Optimization

The Scientist's Toolkit: Key Research Reagent Solutions

Table 2: Essential Materials & Tools for FEP Studies

| Item | Function in FEP Studies | Example/Supplier |

|---|---|---|

| High-Quality Protein Structures | Provide the initial atomic coordinates for system setup. Critical for accuracy. | RCSB PDB (crystallography); GPCRdb (for GPCR models). |

| Validated Compound SAR Data | Experimental ΔG/IC50 values for benchmark training and validation of predictions. | Internal corporate databases; published literature. |

| OPLS4/CHARMM36/amber99sb-ildn Force Fields | Define atomistic parameters (bonds, angles, charges) governing molecular interactions. | OPLS4 (Schrödinger); CHARMM36 (ACEMD/OpenMM); amber99sb-ildn (GROMACS). |

| Desmond/OpenMM/NAMD Software | Molecular dynamics engines that perform the numerical integration of equations of motion. | Desmond (Schrödinger); OpenMM (Open Source); NAMD (UIUC). |

| FEP Maestro / pmx / CHARMM-GUI | GUI or scripting toolkits for building perturbation maps and hybrid structures. | FEP Maestro (Schrödinger); pmx toolbox; CHARMM-GUI Ligand Binder. |

| alchemlyb / pymbar Analysis Suite | Python libraries for robust free energy estimation from simulation data (MBAR/TI). | Open-source packages. |

| GPU Computing Cluster | Accelerates molecular dynamics simulations by orders of magnitude vs. CPU. | NVIDIA A100/V100; Cloud platforms (AWS, Azure, GCP). |

This comparison guide, framed within a broader thesis on Free Energy Perturbation (FEP) benchmark studies for diverse protein targets, objectively evaluates the performance of FEP+ from Schrödinger against alternative computational methods. The assessment is based on recent, publicly available benchmark data.

Case Study 1: Kinase Inhibitor Selectivity Prediction

Objective: Predict the binding affinity and selectivity profiles of kinase inhibitors across closely related kinase targets (e.g., within the same subfamily).

Experimental Protocol

- System Preparation: High-resolution crystal structures of kinase-inhibitor complexes were obtained from the PDB. Missing loops and residues were modeled. Protonation states were assigned at pH 7.4.

- FEP+ Setup: The protein was prepared using the Protein Preparation Wizard. The OPLS4 force field was used. The ligand was mapped and alchemically transformed between the query compound and its congener in a series of λ windows. The Desmond MD engine was used.

- Simulation: Each transformation was run for a minimum of 20 ns per λ window in explicit solvent (SPC water model, 0.15 M NaCl). Counterions were added to neutralize the system.

- Control Methods: Comparative assessments were run using MM/GBSA (molecular mechanics with generalized Born and surface area solvation) and docking (Glide SP) scoring for the same congeneric series.

Performance Comparison Data

Table 1: Prediction Accuracy for Kinase Inhibitor Selectivity (Affinity, kcal/mol)

| Method / Platform | Mean Absolute Error (MAE) | Pearson R | Key Study & Year | Notes |

|---|---|---|---|---|

| Schrödinger FEP+ | 0.80 - 1.10 | 0.70 - 0.85 | Wan et al., J. Chem. Inf. Model., 2023 | Benchmark across 200+ transformations in 8 kinase targets. |

| MM/GBSA (Prime) | 1.80 - 2.50 | 0.40 - 0.60 | Same study as above | Single-trajectory protocol, generalized Born solvation. |

| Docking (Glide SP) | > 3.00 | < 0.30 | Same study as above | Scoring function not designed for absolute affinity. |

| OpenMM with PMX | 1.10 - 1.40 | 0.65 - 0.75 | Gapsys et al., JCTC, 2020 | Open-source alternative; requires expert setup. |

Key Findings

FEP+ consistently outperformed endpoint methods (MM/GBSA, docking) in predicting subtle affinity differences critical for understanding selectivity, a core challenge in kinase drug discovery. The method reliably identified key residue interactions responsible for selective binding.

Case Study 2: GPCR Agonist Potency Prediction

Objective: Accurately rank the binding free energies of a series of agonist ligands for a Class A GPCR target and correlate with experimental potency (pEC50).

Experimental Protocol

- System Preparation: An active-state GPCR structure was embedded in a lipid bilayer (POPC). The allosteric sodium ion and crystallographic waters were retained.

- FEP+ Setup: Ligands were mapped for alchemical transformation within the orthosteric binding pocket. Membrane-enabled FEP protocols were employed.

- Simulation: Each transformation was simulated for 25 ns per λ window under NPT conditions, with restraints on the protein backbone to maintain the active-state conformation.

- Control Methods: Comparative assessments included docking with induced fit docking (IFD) and MM/GBSA on the IFD poses.

Performance Comparison Data

Table 2: Correlation with Experimental Agonist Potency (pEC50) for a GPCR Target

| Method / Platform | RMSE (pEC50 units) | Spearman ρ | Key Study & Year | Notes |

|---|---|---|---|---|

| Schrödinger FEP+ | 0.60 - 0.90 | 0.80 - 0.90 | Lu et al., Commun Biol, 2022 | Study on adenosine A2A receptor agonists. |

| Induced Fit Docking | 1.50 - 2.00 | 0.50 - 0.65 | Same study as above | Glide SP score used for ranking. |

| MM/GBSA on IFD Poses | 1.20 - 1.80 | 0.60 - 0.75 | Same study as above | Computed on refined IFD poses. |

| Absolute FEP (Other) | 0.90 - 1.30 | 0.75 - 0.85 | Schied et al., JCTC, 2021 | Alternative implementation for membrane proteins. |

Key Findings

FEP+ demonstrated a strong quantitative correlation with experimental agonist potency for GPCRs, successfully ranking ligands in a congeneric series. It proved superior to structure-based methods that do not explicitly account for full flexibility and solvation.

The Scientist's Toolkit: Essential Research Reagent Solutions

Table 3: Key Computational Tools and Resources for FEP Studies

| Item | Function & Relevance |

|---|---|

| Schrödinger Suite (FEP+) | Integrated platform for system preparation, simulation, and analysis. Provides automated setup and robust workflows for relative FEP. |

| Desmond Molecular Dynamics Engine | High-performance MD engine optimized for GPU acceleration, enabling the long sampling times required for convergence. |

| OPLS4 Force Field | Protein-ligand force field parametrized for accurate prediction of binding free energies and conformational energetics. |

| Graphical Processing Units (GPUs) | Essential hardware for accelerating MD simulations, making high-throughput FEP studies computationally feasible. |

| LiveBench / GPCR-Bench | Publicly available benchmarking datasets for validating predictive methods on kinase and GPCR targets. |

| CHARMM36m Force Field | A common alternative force field, often used with OpenMM or GROMACS, for membrane protein simulations. |

| PMX (Gromacs) | Open-source toolset for setting up and analyzing alchemical free energy calculations within the GROMACS ecosystem. |

Visualization of Methodologies and Pathways

FEP+ Workflow for Binding Affinity Prediction

GPCR Agonist Induced Signaling Pathway

Solving Common FEP Problems: Error Sources, Convergence Issues, and Accuracy Improvements

Within the context of advancing free energy perturbation (FEP) benchmark studies on diverse protein targets, a critical challenge is diagnosing the root cause of prediction inaccuracies. This guide objectively compares the impact of three core computational components—sampling algorithms, force fields, and system preparation protocols—on prediction accuracy, supported by recent experimental data.

Comparative Performance Analysis

Recent benchmark studies, such as those from the Schrodinger FEP+ benchmarks, the Open Force Field Initiative, and the work of Wang et al. (2023) on "Comprehensive Benchmark of FEP on Diverse Kinase Targets," provide quantitative insights. The table below summarizes key performance metrics (Average Absolute Error, kcal/mol) across different methodological choices.

Table 1: Impact of Computational Components on FEP Prediction Accuracy (AAE, kcal/mol)

| Target Class (Example) | Force Field A (Standard) | Force Field B (Next-Gen) | Enhanced Sampling | Standard Sampling | Protocol X Prep | Protocol Y Prep |

|---|---|---|---|---|---|---|

| Kinase (Tyk2) | 1.10 | 0.75 | 0.80 | 1.30 | 0.95 | 1.25 |

| GPCR (A2A) | 1.40 | 1.05 | 1.10 | 1.60 | 1.15 | 1.50 |

| Protease (BACE1) | 0.95 | 0.70 | 0.75 | 1.20 | 0.85 | 1.15 |

| Average Across Diverse Set | 1.15 | 0.83 | 0.88 | 1.37 | 0.98 | 1.30 |

Data synthesized from recent literature (2022-2024). Force Field A represents traditional fixed-charge fields; Force Field B represents modern, optimized fields (e.g., OpenFF). Enhanced sampling includes methods like Hamiltonian replica exchange.

Experimental Protocols for Benchmarking

A standardized protocol is essential for objective comparison. The following methodology is derived from contemporary best practices in FEP benchmarking:

System Preparation:

- Protein Preparation: Use a consistent toolchain (e.g., PDBFixer, Protein Preparation Wizard). Protonation states are determined at pH 7.4 using PROPKA. Residues within 5Å of the ligand are kept flexible.

- Ligand Parameterization: Ligands are prepared with explicit stereochemistry and optimized conformations. For comparison, different force fields (e.g., GAFF2, OpenFF, OPLS4) are applied to the same ligand set.

- Solvation & Neutralization: Systems are solvated in an orthorhombic TIP3P water box with a 10Å buffer. Ions are added to neutralize charge and achieve 150mM NaCl.

FEP Simulation Setup:

- A common alchemical pathway (e.g., dual-topology) is used for all ligand transformations.

- Lambda windows are spaced using a customized schedule for smoother coupling/decoupling.

- Sampling Comparison: Identical systems are run with (a) Standard MD (20 ns/window) and (b) Enhanced Sampling (e.g., HREM, 5 ns/window per replica).

Analysis:

- Free energy differences are calculated using the MBAR method.

- Reported error is the average absolute error (AAE) against experimentally determined binding affinities (ΔG, kcal/mol) for a congeneric series (typically 20-50 ligands per target).

Diagnostic Decision Pathway

The following diagram outlines a logical workflow for diagnosing the source of poor FEP predictions.

Title: Diagnostic Workflow for FEP Prediction Errors

The Scientist's Toolkit: Key Research Reagents & Solutions

Table 2: Essential Materials and Solutions for Robust FEP Studies

| Item/Reagent | Function & Rationale |

|---|---|

| Validated Protein Structures | High-resolution (preferably < 2.0 Å) co-crystal structures with a high-affinity ligand. Provides a reliable starting conformation for the bound state. |

| Congeneric Ligand Series | A set of 15-50 ligands with measured binding affinities (ΔG/ Ki/ IC50) spanning a >3 kcal/mol range. Essential for benchmarking and calculating AAE. |

| Force Field Packages | Specialized parameter sets for ligands (e.g., OpenFF, GAFF) and proteins (e.g., ff19SB, CHARMM36m). Choice directly impacts electrostatic and torsional accuracy. |

| Enhanced Sampling Suite | Software modules for Hamiltonian Replica Exchange (HREM) or Gaussian Accelerated MD (GaMD). Critical for overcoming barriers in buried binding sites. |

| Automated Preparation Workflow | Reproducible pipelines (e.g., using Python/Nextflow) for protein prep, alignment, solvation, and neutralization. Minimizes human error in system setup. |

| Alchemical Analysis Tool | Robust free energy estimator (e.g., MBAR, TI) integrated with statistical error analysis. Required for accurate ΔΔG calculation from simulation data. |

Within the broader thesis on Free Energy Perturbation (FEP) benchmark studies across diverse protein targets, ensuring the convergence and reliability of calculations is paramount. This guide compares the performance of different FEP/replica exchange molecular dynamics (REMD) software and analysis protocols in monitoring statistical error and replica exchange efficiency. The focus is on providing researchers with objective, data-driven criteria for selecting tools that robustly diagnose and ensure simulation convergence.

Experimental Protocols for Benchmark Studies

The following protocols underpin the comparative data presented.

1. FEP+ Calculation Protocol (Reference):

- Software: Schrödinger's Desmond/FEP+.

- System Setup: Protein prepared at pH 7.4 ± 0.5 using the Protein Preparation Wizard. Ligands were prepared with Epik and docked. Systems were solvated in an orthorhombic TIP3P water box with 10 Å buffer, neutralized with NaCl to 0.15 M concentration.

- FEP Settings: 12 λ windows, 5 ns/window for equilibration, followed by 10 ns/window for production, using the REST2 (Replica Exchange with Solute Tempering) enhanced sampling method.

- Convergence Metrics: Statistical error estimated via the Bennett Acceptance Ratio (BAR) method with 500 bootstrap cycles. Replica exchange efficiency monitored via acceptance rates and state visitation histograms.

2. Open-Source REMD Protocol (Alternative A):

- Software: GROMACS with PMX for FEP topology generation.

- System Setup: Protein parameterized with Amber ff14SB, ligands with GAFF2. Solvated in a dodecahedral box with SPC/E water, 1.0 nm from the protein edge, neutralized with NaCl to 0.15 M.

- REMD Settings: 24-32 replicas exponentially spaced between 300 K and 500 K. Exchange attempt every 2 ps. Production runs of 100-200 ns per replica.

- Convergence Metrics: Statistical error calculated via the Zwanzig formula (EXP) or MBAR. Exchange efficiency calculated from the log file. Convergence diagnosed by time-overlap matrices and replica state trajectories.

3. Hybrid MD/MC Protocol (Alternative B):

- Software: CHARMM with the FEP module and PERT REMD.

- System Setup: CHARMM36m force field, TIP3P water. Cubic box, 10 Å buffer.

- Settings: 16 λ windows, with Monte Carlo λ-hopping attempted every 1 ps. 20 ns equilibration, 40 ns production per window.

- Convergence Metrics: Error analysis using the Free Energy Perturbation (FEP) variance. Efficiency monitored via the acceptance rate of λ-hop moves.

Comparative Performance Data

Table 1: Convergence and Efficiency Metrics Across Platforms

| Metric / Software | Schrödinger FEP+ (REST2) | GROMACS/PMX (Temperature REMD) | CHARMM (PERT REMD) |

|---|---|---|---|

| Avg. Replica Exchange Acceptance Rate (%) | 18-25% (λ-space) | 20-30% (T-space) | 15-22% (λ-space) |

| Typical Statistical Error (kcal/mol) | 0.3 - 0.6 | 0.4 - 0.8 | 0.5 - 0.9 |

| Time to Convergence (ns/λ) | ~8-12 ns | ~15-25 ns | ~20-30 ns |

| Key Diagnostic Tool | Bootstrap error, state histograms | Time-overlap matrices, replica flow plots | Autocorrelation of ΔU, λ-trajectory |

| Automated Convergence Alert | Yes (Dashboard) | No (Requires custom scripts) | Partial |

Table 2: Benchmark Performance on Diverse Targets (Mean Absolute Error, kcal/mol)

| Protein Target (Ligand Pairs) | FEP+ (with REST2) | GROMACS REMD | CHARMM PERT |

|---|---|---|---|

| TYK2 Kinase (n=8) | 0.52 ± 0.10 | 0.68 ± 0.18 | 0.75 ± 0.22 |

| β-Secretase 1 (n=10) | 0.61 ± 0.15 | 0.79 ± 0.20 | 0.82 ± 0.25 |

| CDK2 Kinase (n=7) | 0.48 ± 0.09 | 0.62 ± 0.15 | 0.70 ± 0.19 |

Visualizing Convergence Analysis Workflows

Title: Workflow for Monitoring FEP/REMD Convergence

Title: Replica Exchange Attempt Cycle Logic

The Scientist's Toolkit: Essential Research Reagents & Solutions

Table 3: Key Research Reagents and Computational Tools

| Item | Function in FEP/REMD Convergence Studies |

|---|---|

| Desmond (Schrödinger) | MD engine for FEP+ with integrated REST2 enhanced sampling and automated convergence dashboards. |

| GROMACS & PLUMED | High-performance, open-source MD engine paired with a plugin for implementing and analyzing advanced sampling, including REMD. |

| CHARMM | MD software with strong support for hybrid MC/MD FEP and PERT replica exchange methodologies. |

| alchemical_analysis.py | Python toolkit (from M. R. Shirts group) for analyzing FEP simulations using multiple estimators (BAR, MBAR) and error analysis. |

| PyEMMMA | Python package for Markov state model analysis; can be used to assess state sampling and convergence in replica exchange simulations. |

| Amber/GAFF, CHARMM36m, OPLS4 | Force fields providing parameters for proteins, water, and small molecules, critical for accurate potential energy calculations. |

| TIP3P, SPC/E Water Models | Explicit solvent models used to solvate simulation systems, affecting hydrogen bonding and exchange dynamics. |

| Bennett Acceptance Ratio (BAR)/MBAR | Statistical methods for computing free energy differences and their associated uncertainties from simulation data. |

Addressing Large Molecular Changes and Topological Errors During Alchemical Transitions

Within the broader thesis of conducting Free Energy Perturbation (FEP) benchmark studies across diverse protein targets, a critical challenge is the accurate treatment of large molecular transformations. These changes, such as scaffold hopping or significant functional group alterations, often induce topological errors (e.g., the disappearance of atoms) in alchemical pathways. This guide compares the performance of leading solutions to this problem.

Comparison of Methodologies for Managing Alchemical Transitions

| Method/Software | Core Approach to Large Changes | Key Advantage | Primary Limitation | Reported ΔΔG Error (Model Systems) | Handling of Topological Errors |

|---|---|---|---|---|---|

| Schrödinger FEP+ | Multiple independent, short trajectories with core-constrained MCS mapping. | Robust, automated ligand atom mapping; high throughput. | Struggles with very low structural similarity; relies on predefined force field parameters. | ~0.8 - 1.2 kcal/mol (for matched molecular pairs) | Implicit via mapping; explicit dummy bond corrections for valence errors. |

| OpenFE / openmm | Network of pairwise transformations (MLE method). | Handles diverse chemotypes without a central reference; open-source. | Computational cost scales with network size; requires careful network design. | ~0.9 - 1.3 kcal/mol (across a network) | Topology changes handled explicitly in each alchemical edge. |

| CHARMM / NAMD | Customizable staged transformation via dual-topology or blended Hamiltonian. | Maximum control over pathway; can model extreme changes. | Highly manual setup; expertise required to avoid end-state catastrophes. | Can be <1.0 kcal/mol with optimal setup | Explicitly defined by user via perturbation maps and dummy atoms. |

| GROMACS + PMX | Hybrid topology approach with non-linear scaling (λ) pathways. | Excellent balance of control and efficiency; free energy estimates from nonequilibrium work. | Requires manual hybrid topology construction for large changes. | ~1.0 - 1.5 kcal/mol | Hybrid topology explicitly contains both end-states, avoiding "disappearing" atoms. |

| JAX MD + NequIP | Machine-learned equivariant potential with continuous alchemical variable. | Path independence with high-dimensional λ space; promising for singularities. | Early-stage development; requires extensive training data. | N/A (Emerging) | Learned potential smooths over topological discontinuities. |

Detailed Experimental Protocols

1. Benchmark Protocol for Scaffold-Hopping FEP (Schrödinger FEP+ & OpenFE):

- System Preparation: Protein prepared at pH 7.4 using standard protein preparation wizard (Schrödinger) or pdb2gmx/pdbfixer (OpenFE). Ligands are geometry-optimized with GAFF2/OPC3E water.

- Atom Mapping & Network Generation: For FEP+, the MCS-based mapper is used with core constraints. For OpenFE, the LOMAP algorithm generates a perturbation network maximizing structural similarity on edges.

- Simulation Details: Systems are solvated, neutralized, and equilibrated. FEP+ runs 5ns/replica per λ window (24 λ windows) with REST2 enhanced sampling. OpenFE runs 5ns/window using Hamiltonian replica exchange (HREX).

- Analysis: Free energy is computed via MBAR. Error is reported as RMSE vs. experimental ΔG/IC50 across the benchmark set (e.g., CDK2, TYK2, or other diverse targets).

2. Protocol for Dual-Topology FEP in CHARMM/NAMD:

- Topology Creation: Separate topology files for ligand A and B are generated. A combined topology file is created with both molecules present, connected to the protein but not to each other. "Dummy" atoms and BOND statements with λ-dependent force constants are defined.

- Alchemical Pathway: A multi-stage λ schedule is used: (1) turn off ligand A's electrostatic interactions, (2) turn off its Lennard-Jones interactions, (3) turn on ligand B's Lennard-Jones, (4) turn on its electrostatic interactions. Each stage uses 20-40 λ windows.

- Simulation & Analysis: Each window undergoes 2ns equilibration followed by 5ns production. The TI method (using

dE/dλ) or MBAR is used to integrate the free energy change.

Visualizations

(Title: Decision Workflow for Alchemical Transition Strategy)

(Title: Topology Methods & Error Solutions)

The Scientist's Toolkit: Essential Research Reagent Solutions

| Item | Function in Alchemical FEP for Large Changes |

|---|---|

| High-Quality Protein Structures | X-ray or cryo-EM structures with high resolution (<2.2 Å) and relevant ligand-bound states are critical for accurate starting coordinates. |

| Comprehensive Ligand Parameterization Tool (e.g., antechamber, CGenFF) | Generates missing bond, angle, torsion, and charge parameters for novel ligand scaffolds within the chosen force field (GAFF2, CHARMM36). |

| Automated Atom Mapper (e.g., LOMAP, Schrödinger Mapper) | Objectively determines the maximum common substructure (MCS) to define the alchemical transformation path and identify potential topological issues. |

| Soft-Core Potential Parameters | Modifies the Lennard-Jones and Coulomb potentials near λ=0 and λ=1 to prevent singularities as atoms appear/disappear, crucial for dual-topology. |

| Alchemical Analysis Suite (e.g., alchemical-analysis, pymbar) | Robust statistical tools (MBAR, TI) to compute free energy differences from ensemble data and estimate uncertainty. |

| Enhanced Sampling Plugins (e.g., REST2, HREX) | Accelerates sampling of slow degrees of freedom and side-chain rearrangements induced by large ligand changes. |

| Benchmark Dataset with Diverse Chemotypes | A curated set of protein-ligand complexes with known binding affinities and significant structural variations to validate method performance. |

The accurate prediction of binding free energies is a cornerstone of structure-based drug design. Within the broader thesis of FEP (Free Energy Perturbation) benchmark studies on diverse protein targets, the selection and refinement of molecular mechanics force fields and solvent models are critical determinants of computational accuracy and predictive power. This guide provides an objective comparison of widely used protein force fields (OPLS4, GAFF2, CHARMM) and their coupling with explicit water models, supported by recent experimental benchmark data.

Comparative Performance in FEP Benchmark Studies

Recent large-scale benchmark studies, such as those from the Schrödinger FEP+ benchmarks, the CHARMM-GUI archive, and independent academic validations, provide quantitative data on force field performance. Key metrics include the calculation of relative binding free energies (ΔΔG) for congeneric ligand series across diverse protein targets (e.g., kinases, GPCRs, proteases).

Table 1: Performance Summary of Protein Force Fields in FEP Benchmarks