From Schrödinger to Solutions: How Quantum Mechanics Laid the Foundation for Modern Computational Chemistry

This article traces the historical emergence and evolution of computational chemistry from its roots in early quantum mechanics.

From Schrödinger to Solutions: How Quantum Mechanics Laid the Foundation for Modern Computational Chemistry

Abstract

This article traces the historical emergence and evolution of computational chemistry from its roots in early quantum mechanics. It explores how foundational theories from the 1920s, such as the Schrödinger equation, were transformed into practical computational methodologies that now underpin modern drug discovery and materials science. The scope encompasses the key algorithmic breakthroughs of the mid-20th century, the pivotal role of increasing computational power, and the current state of structure-based and ligand-based drug design. Aimed at researchers and drug development professionals, this review also addresses the persistent challenges of accuracy and scalability, the validation of computational methods against experimental data, and the promising future directions integrating artificial intelligence and quantum computing.

The Quantum Leap: From Theoretical Physics to Chemical Realities

Quantum chemistry, the field that uses quantum mechanics to model molecular systems, fundamentally relies on the Schrödinger equation as its theoretical foundation [1]. This partial differential equation, formulated by Erwin Schrödinger in 1926, represents the quantum counterpart to Newton's second law in classical mechanics, providing a mathematical framework for predicting the behavior of quantum systems over time [2]. Its discovery marked a pivotal landmark in the development of quantum mechanics, earning Schrödinger the Nobel Prize in Physics in 1933 and establishing the principles that would eventually enable the computational modeling of chemical systems [2] [3]. Unlike classical mechanics, which fails at molecular levels, quantum mechanics incorporates essential phenomena such as wave-particle duality, quantized energy states, and probabilistic outcomes that are crucial for understanding electron delocalization and chemical bonding [4].

The Schrödinger equation's significance extends beyond theoretical physics into practical applications across chemistry and drug discovery. By describing the form of probability waves that govern the motion of small particles, the equation provides the basis for understanding atomic and molecular behavior with remarkable accuracy [3]. This article explores the mathematical foundations of the Schrödinger equation, its role in spawning computational chemistry methodologies, and its practical applications in modern drug discovery, while also examining recent advances and future directions that continue to expand its impact on scientific research.

Mathematical Foundation of the Schrödinger Equation

Fundamental Formulations

The Schrödinger equation exists in two primary forms: time-dependent and time-independent. The time-dependent Schrödinger equation provides a complete description of a quantum system's evolution and is expressed as:

[i\hbar\frac{\partial}{\partial t}|\Psi(t)\rangle = \hat{H}|\Psi(t)\rangle]

where (i) is the imaginary unit, (\hbar) is the reduced Planck constant, (t) is time, (|\Psi(t)\rangle) is the quantum state vector of the system, and (\hat{H}) is the Hamiltonian operator [2] [5]. This form dictates how the wave function changes over time, analogous to how Maxwell's equations describe the evolution of electromagnetic fields [6].

For many practical applications in quantum chemistry, the time-independent Schrödinger equation suffices:

[\hat{H}|\Psi\rangle = E|\Psi\rangle]

where (E) represents the energy eigenvalues corresponding to the allowable energy states of the system [2] [4]. Solutions to this equation represent stationary states of the quantum system, with their corresponding eigenvalues representing the quantized energy levels that the system can occupy [5].

The Hamiltonian Operator

The Hamiltonian operator (\hat{H}) encapsulates the total energy of the quantum system and serves as the central component of the Schrödinger equation. For a single particle, it consists of kinetic energy ((T)) and potential energy ((V)) components:

[\hat{H} = \hat{T} + \hat{V} = -\frac{\hbar^2}{2m}\nabla^2 + V(\mathbf{r},t)]

where (m) is the particle mass, (\nabla^2) is the Laplacian operator (representing the sum of second partial derivatives), and (V(\mathbf{r},t)) is the potential energy function [5] [4]. The complexity of the Hamiltonian increases significantly for multi-electron systems, where it must account for electron-electron repulsions and various other interactions.

Wave Functions and Physical Interpretation

The wave function (\Psi) contains all information about a quantum system. While (\Psi) itself is not directly observable, its square modulus (|\Psi(x,t)|^2) gives the probability density of finding a particle at position (x) and time (t) [2] [6]. This probabilistic interpretation fundamentally distinguishes quantum mechanics from classical physics. The wave function typically exhibits properties such as superposition—where a quantum system exists in multiple states simultaneously—and normalization, ensuring the total probability equals unity [2] [5].

Table 1: Key Components of the Schrödinger Equation

| Component | Symbol | Mathematical Expression | Physical Significance | ||

|---|---|---|---|---|---|

| Wave Function | (\Psi) | (\Psi(x,t)) | Contains all information about the quantum state; its square gives probability density | ||

| Hamiltonian Operator | (\hat{H}) | (-\frac{\hbar^2}{2m}\nabla^2 + V) | Total energy operator; sum of kinetic and potential energy | ||

| Laplacian | (\nabla^2) | (\frac{\partial^2}{\partial x^2} + \frac{\partial^2}{\partial y^2} + \frac{\partial^2}{\partial z^2}) | Spatial curvature of wave function; related to kinetic energy | ||

| Planck Constant | (\hbar) | (h/2\pi) | Fundamental quantum of action; sets scale of quantum effects | ||

| Probability Density | (P(x,t)) | ( | \Psi(x,t) | ^2) | Probability per unit volume of finding particle at position x and time t |

From Equation to Computation: Computational Quantum Chemistry

The Born-Oppenheimer Approximation

A critical breakthrough enabling practical application of the Schrödinger equation to chemical systems was the Born-Oppenheimer approximation, which separates electronic and nuclear motions based on the significant mass difference between electrons and nuclei [4]. This approximation allows chemists to solve the electronic Schrödinger equation for fixed nuclear positions:

[\hat{H}e\psie(\mathbf{r};\mathbf{R}) = Ee(\mathbf{R})\psie(\mathbf{r};\mathbf{R})]

where (\hat{H}e) is the electronic Hamiltonian, (\psie) is the electronic wave function, (\mathbf{r}) and (\mathbf{R}) represent electron and nuclear coordinates respectively, and (E_e(\mathbf{R})) is the electronic energy as a function of nuclear positions [4]. This separation makes computational quantum chemistry feasible by focusing first on electron behavior for given atomic arrangements.

Key Computational Methodologies

Several computational approaches have been developed to solve the Schrödinger equation approximately for molecular systems, each with different trade-offs between accuracy and computational cost:

Hartree-Fock (HF) Method: This foundational wave function-based approach approximates the many-electron wave function as a single Slater determinant, ensuring antisymmetry to satisfy the Pauli exclusion principle [4]. The HF method assumes each electron moves in the average field of all other electrons, simplifying the many-body problem through the self-consistent field (SCF) method. However, it neglects electron correlation, leading to inaccuracies in calculating binding energies and dispersion-dominated interactions crucial in drug discovery [4].

Density Functional Theory (DFT): DFT revolutionized quantum simulations by focusing on electron density (\rho(\mathbf{r})) rather than wave functions [7] [4]. Grounded in the Hohenberg-Kohn theorems, which state that electron density uniquely determines ground-state properties, DFT calculates total energy as:

[E[\rho] = T[\rho] + V{ext}[\rho] + V{ee}[\rho] + E_{xc}[\rho]]

where (T[\rho]) is kinetic energy, (V{ext}[\rho]) is external potential, (V{ee}[\rho]) is electron-electron repulsion, and (E{xc}[\rho]) is the exchange-correlation energy [4]. The unknown (E{xc}[\rho]) requires approximations (LDA, GGA, hybrid functionals), with Kohn-Sham DFT making the theory practically applicable to molecules and materials [7].

Coupled-Cluster Theory (CCSD(T)): Considered the "gold standard" of quantum chemistry, CCSD(T) provides highly accurate results but at tremendous computational cost—scaling so steeply that doubling electrons increases computation 100-fold, traditionally limiting it to small molecules (~10 atoms) [8].

Table 2: Computational Quantum Chemistry Methods

| Method | Theoretical Basis | Computational Scaling | Strengths | Limitations |

|---|---|---|---|---|

| Hartree-Fock (HF) | Wave function (Single determinant) | O(N⁴) | Foundation for correlated methods; physically intuitive | Neglects electron correlation; poor for dispersion forces |

| Density Functional Theory (DFT) | Electron density | O(N³) | Good accuracy/cost balance; widely applicable | Accuracy depends on exchange-correlation functional |

| Coupled-Cluster (CCSD(T)) | Wave function (Correlated) | O(N⁷) | Gold standard accuracy; reliable for diverse systems | Prohibitively expensive for large systems |

| Multiconfiguration Pair-DFT (MC-PDFT) | Hybrid: Wave function + Density | Varies | Handles strongly correlated systems; improved accuracy | Relatively new; fewer validated functionals |

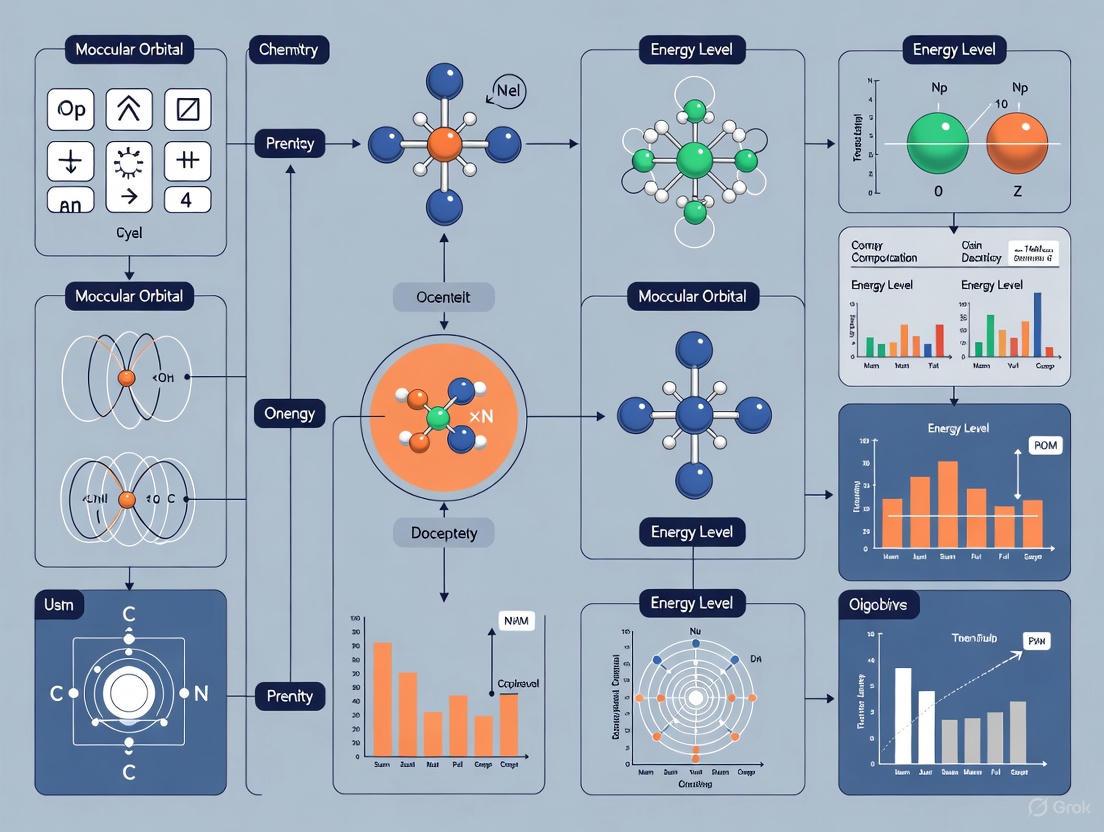

Computational Quantum Chemistry Evolution: From fundamental equation to practical applications through key methodological developments.

Applications in Drug Discovery and Materials Design

Quantum Mechanics in Modern Drug Development

Quantum mechanics has revolutionized drug discovery by providing precise molecular insights unattainable with classical methods [9] [4]. QM approaches model electronic structures, binding affinities, and reaction mechanisms, significantly enhancing structure-based and fragment-based drug design [4]. Specific applications include:

- Small-molecule kinase inhibitors: QM calculations provide accurate molecular orbitals and binding energy predictions for optimizing drug candidates [4].

- Metalloenzyme inhibitors: QM/MM methods model the complex electronic structures of metal-containing active sites in enzymes [10].

- Covalent inhibitors: QM predicts reaction mechanisms and energy barriers for covalent bond formation between drugs and targets [4].

- Fragment-based leads: QM evaluates fragment binding and helps optimize weak binders into potent drugs [4].

The expansion of the chemical space to libraries containing billions of synthesizable molecules presents both opportunities and challenges for quantum mechanical methods, which provide chemically accurate properties but traditionally for small-sized systems [10].

Advanced Materials Design

Quantum chemistry simulations enable researchers to understand and predict material behavior at the molecular level, crucial for designing better materials, creating new medicines, and solving environmental challenges [7]. Recent advances allow modeling of transition metal complexes, catalytic processes, quantum phenomena, and light-matter interactions with unprecedented accuracy [7]. These capabilities are particularly valuable for developing battery materials, semiconductor devices, and novel polymers [8].

Recent Advances and Future Directions

Machine Learning Acceleration

A groundbreaking development in computational quantum chemistry comes from MIT researchers who have created a neural network architecture that dramatically accelerates quantum chemical calculations [8]. Their "Multi-task Electronic Hamiltonian network" (MEHnet) utilizes an E(3)-equivariant graph neural network where nodes represent atoms and edges represent bonds, incorporating physics principles directly into the model [8]. This approach can extract extensive information about a molecule from a single model—including dipole and quadrupole moments, electronic polarizability, and optical excitation gaps—while achieving CCSD(T)-level accuracy at computational speeds feasible for molecules with thousands of atoms, far beyond traditional CCSD(T) limits [8].

Innovative Theoretical Methods

Professor Laura Gagliardi and colleagues have developed multiconfiguration pair-density functional theory (MC-PDFT), which combines wave function theory and density functional theory to handle both weakly and strongly correlated systems [7]. Their latest functional, MC23, incorporates kinetic energy density for more accurate electron correlation description, enabling high-accuracy studies of complex systems like transition metal complexes, bond-breaking processes, and excited states at lower computational cost than traditional wave-function methods [7].

Quantum Computing Integration

The emerging field of quantum computing holds promise to exponentially accelerate quantum mechanical calculations, potentially solving classically intractable quantum chemistry problems [9] [4]. Research is actively exploring how quantum algorithms can simulate molecular systems more efficiently, with projections suggesting transformative impacts on drug discovery and materials science by 2030-2035, particularly for personalized medicine and previously "undruggable" targets [9] [4].

Table 3: Emerging Techniques in Quantum Chemistry

| Technique | Key Innovation | Potential Impact | Current Status |

|---|---|---|---|

| ML-accelerated CCSD(T) | Graph neural networks trained on quantum data | CCSD(T) accuracy for thousands of atoms vs. tens | Demonstrated for hydrocarbons [8] |

| MC-PDFT (MC23) | Combines multiconfigurational wave function with DFT | Accurate treatment of strongly correlated systems | Validated for transition metal complexes [7] |

| Quantum Computing | Quantum algorithms for electronic structure | Exponential speedup for exact solutions | Early development; hardware limitations [9] |

| Multi-task Learning | Single model predicts multiple molecular properties | Unified framework for molecular design | MEHnet demonstrates feasibility [8] |

Experimental Protocols: QM/MM for Drug Discovery

QM/MM Methodology for Protein-Ligand Binding

The QM/MM (Quantum Mechanics/Molecular Mechanics) approach has become a standard protocol for studying biochemical systems, combining quantum mechanical accuracy for the reactive region with molecular mechanics efficiency for the biomolecular environment [4]. The detailed methodology includes:

System Preparation:

- Obtain the protein-ligand complex structure from crystallography, NMR, or homology modeling.

- Partition the system into QM and MM regions, typically with the ligand and key active site residues (e.g., catalytic amino acids, metal cofactors) in the QM region.

- Apply appropriate protonation states for all residues based on physiological pH and local environment.

- Solvate the system in a water box and add counterions to neutralize charge.

Computational Setup:

- Select QM method (typically DFT with hybrid functional like B3LYP for organic molecules, or specialized functionals for metal complexes) and basis set (6-31G* for initial optimization, larger for final calculations).

- Choose MM force field (AMBER, CHARMM, or OPLS-AA compatible with QM region).

- Define QM/MM boundary using link atoms or pseudopotentials to handle covalent bonds crossing regions.

- Implement electrostatic embedding to include MM partial charges in the QM Hamiltonian.

Calculation Workflow:

- Perform geometry optimization of the QM region with fixed MM coordinates.

- Conduct conformational sampling using molecular dynamics in the MM region.

- Calculate binding energy through free energy perturbation or thermodynamic integration.

- Analyze electronic properties (charge transfer, orbital interactions) from the QM wavefunction.

Validation:

- Compare results with experimental binding affinities (IC₅₀, Kᵢ values).

- Validate computational models against spectroscopic data when available.

- Perform sensitivity analysis on QM region selection and method choices.

QM/MM Protocol for Drug Binding: Stepwise computational approach combining quantum and classical mechanics.

Table 4: Essential Computational Tools in Quantum Chemistry

| Tool/Resource | Type | Primary Function | Application in Research |

|---|---|---|---|

| Gaussian | Software Suite | Electronic structure calculations | DFT, HF, post-HF methods for molecular properties [9] |

| Qiskit | Programming Library | Quantum algorithm development | Implementing quantum computing solutions for chemistry [9] |

| MEHnet | Neural Network | Multi-task molecular property prediction | Rapid calculation of multiple electronic properties [8] |

| MC-PDFT (MC23) | Theoretical Method | Strongly correlated electron systems | Transition metal complexes, bond breaking [7] |

| CCSD(T) | Computational Method | High-accuracy quantum chemistry | Gold standard reference calculations [8] |

| Matlantis | Atomistic Simulator | High-speed molecular simulation | Training machine learning models [8] |

Methodological Approaches

The modern quantum chemist's toolkit extends beyond software to encompass specialized methodological approaches tailored to specific research challenges. Fragment Molecular Orbital (FMO) method enables decomposition of large biomolecules into fragments, making QM treatment of entire proteins feasible [4]. Linear scaling methods reduce computational complexity for large systems, while embedding techniques like QM/MM balance accuracy and efficiency for complex biological environments [10] [4]. Machine learning potentials trained on QM data promise to preserve quantum accuracy while achieving molecular dynamics speeds, as demonstrated by recent neural network architectures that extract maximal information from expensive quantum calculations [8].

The Schrödinger equation remains the indispensable foundation of quantum chemistry, nearly a century after its formulation. From its origins in fundamental quantum mechanics research, it has spawned an entire discipline of computational chemistry that continues to evolve through methodological innovations like density functional theory, quantum mechanics/molecular mechanics hybrids, and machine learning acceleration. As computational power grows and algorithms refine, the application of quantum chemical principles to drug discovery and materials design expands, enabling researchers to probe molecular interactions with unprecedented accuracy. The ongoing integration of quantum-inspired approaches, including quantum computing and machine learning, ensures that the Schrödinger equation will continue to drive scientific discovery, addressing challenges from personalized medicine to renewable energy that were unimaginable at the time of its inception.

The field of computational chemistry has its origins in the late 1920s, when theoretical physicists began the first serious attempts to solve the Schrödinger equation for chemical systems using mechanical computation. Following the establishment of quantum mechanics, these pioneers faced the formidable challenge of solving the many-body Schrödinger equation without the aid of electronic computers. Their work, focused on validating quantum mechanics against experimental observations for simple atomic and molecular systems, established the foundational methodologies that would evolve into modern computational chemistry. These early efforts, constrained to systems with just one or two atoms, demonstrated that numerical solutions to the Schrödinger equation could quantitatively reproduce experimentally observed features, providing crucial verification of quantum theory and setting the stage for future computational advances [11].

The emergence of this field represented a fundamental shift from purely theoretical analysis to numerical computation. While the Schrödinger equation provided a complete theoretical description, analytical solutions were impossible for all but the simplest systems. This forced researchers to develop approximate numerical methods that could be executed with the limited computational tools available—primarily hand-cranked calculating machines and human computers. The success in reproducing the properties of helium atoms and hydrogen molecules established computational chemistry as a legitimate scientific discipline, one that would eventually transform how chemists understand molecular structure, spectra, and reactivity [11].

Historical Context and Motivation

The Computational Landscape of Early Quantum Mechanics

In the period following the 1926 publication of the Schrödinger equation, the theoretical framework for quantum mechanics was complete, but its practical application to chemical systems remained limited. The immediate challenge was mathematical—the Schrödinger equation for any system beyond hydrogen-like atoms presented insurmountable analytical difficulties. This mathematical barrier motivated the development of numerical approaches, despite the enormous computational effort required [11].

The first electronic computers would not be invented until the Second World War, and would not become available for general scientific use until the post-war decade. Consequently, researchers in the late 1920s and 1930s relied on hand-cranked mechanical calculators and human-intensive computation methods. Each calculation required tremendous manual effort, with teams of human "computers" (often women mathematicians) working in shifts to perform the tedious numerical work. This labor-intensive process necessarily limited the scope of problems that could be tackled, focusing attention on the simplest possible systems that could still provide meaningful verification of quantum theory [11].

Table: Key Historical Developments in Early Computational Chemistry

| Year | Development | Significance |

|---|---|---|

| 1926 | Schrödinger equation published | Provided theoretical foundation for quantum chemistry |

| 1928 | First attempts to solve Schrödinger equation using hand-cranked calculators | Marked birth of computational chemistry as empirical practice |

| 1933 | James and Coolidge explicit r₁₂ calculations for H₂ | Improved accuracy for hydrogen molecule calculations |

| Late 1940s | Electronic computers invented | Enabled more complex calculations but not yet widely available |

| 1960s | Kolos and Roothaan improved H₂ calculations | Set stage for high-accuracy computational chemistry |

Theoretical Foundations

The entire enterprise of early computational chemistry rested on the Schrödinger wave equation, which describes the time evolution of a quantum mechanical system. For a single particle with mass m and position r moving under the influence of a potential V(r), the time-dependent Schrödinger equation reads [11]:

[ i\hbar\frac{\partial}{\partial t}\psi(r,t) = H\psi(r,t) ]

where H represents the linear Hermitian Hamiltonian operator:

[ H = -\frac{\hbar^2}{2m}\nabla^2 + V(r) ]

Here, ħ is Planck's constant divided by 2π. The wavefunction ψ is generally complex, and its amplitude squared |ψ|² provides the probability distribution for the position of the particle at time t [11].

For chemical systems, the challenge was adapting this framework to many mutually interacting particles, particularly electrons experiencing Coulombic interactions. In the strictly nonrelativistic regime, electron spins could be formally eliminated from the mathematical problem provided the spatial wavefunction satisfied appropriate symmetry conditions. For two-electron systems like helium atoms or hydrogen molecules, the spatial wavefunction had to be either symmetric or antisymmetric under interchange of electron positions depending on whether the spins were paired or parallel [11].

Pioneering Computational Experiments

The Hydrogen Molecule: A Test Case for Quantum Chemistry

The hydrogen molecule (H₂) served as the critical test case for early computational chemistry. In 1927, Walter Heitler and Fritz London published what is often recognized as the first milestone in quantum chemistry, applying quantum mechanics to the dihydrogen molecule and thus to the phenomenon of the chemical bond [12]. Their work demonstrated that quantum mechanics could quantitatively explain covalent bonding, a fundamental chemical phenomenon that lacked satisfactory explanation within classical physics.

The Heitler-London approach was subsequently extended by Slater and Pauling to become the valence-bond (VB) method, which focused on pairwise interactions between atoms and correlated closely with classical chemical bonding concepts. This method incorporated two key concepts: orbital hybridization and resonance, providing a theoretical framework that aligned well with chemists' intuitive understanding of bonds [12]. An alternative approach developed in 1929 by Friedrich Hund and Robert S. Mulliken—the molecular orbital (MO) method—described electrons using mathematical functions delocalized over entire molecules. Though less intuitive to chemists, the MO method ultimately proved more capable of predicting spectroscopic properties [12].

Methodology: Computational Approaches for Simple Systems

Early researchers employed several computational strategies to overcome the limitations of their calculating machines:

The Matching Method

The matching method was particularly useful for asymmetric potential systems. The approach involved generating two separate wavefunctions—one from the left boundary and one from the right boundary of the potential—then adjusting the energy guess until these solutions matched smoothly at an interior point [13].

The process began with an initial energy guess, then computed wavefunctions using the finite difference approximation of the Schrödinger equation:

[ \psi{i+1} \approx \left(2-\frac{2m(E-Vi)(\Delta x)^2}{\hbar^2}\right)\psii - \psi{i-1} ]

Unique initial conditions were applied for even and odd parity solutions. The algorithm tracked the relative orientation of the slopes at the matching point, adjusting the energy value accordingly until a smooth connection was achieved [13]. This method allowed researchers to find eigenstates and hone in on eigenenergies without excessive computational overhead.

Variational Methods

The Rayleigh-Ritz variational principle provided another crucial approach, stating that the expectation value of the Hamiltonian for any trial wavefunction ψ must be greater than or equal to the true ground state energy:

[ E[\psi] = \frac{\langle\psi|H|\psi\rangle}{\langle\psi|\psi\rangle} \geq E_0 ]

This allowed researchers to propose parameterized trial wavefunctions and systematically improve them by minimizing the energy expectation value. The variational approach was particularly valuable because it provided an upper bound on the ground state energy, giving a clear indicator of progress toward better solutions [14].

Key Results and Experimental Verification

The painstaking computational work on simple systems produced remarkable agreement with experimental observations, providing crucial validation of quantum mechanics. A classic example comes from the work of W. Kolos and L. Wolniewicz in the 1960s. They performed a sequence of increasingly accurate calculations on the hydrogen molecule, using explicit r₁₂ terms that had been introduced by James and Coolidge in 1933 [11].

Their most refined calculations revealed a discrepancy with the experimentally derived dissociation energy of H₂. When all known corrections were included, their best estimate showed a difference of 3.8 cm⁻¹ from the accepted experimental value. This theoretical prediction prompted experimentalists to reexamine the system, leading to a new spectrum with better resolution and a revised assignment of vibrational quantum numbers in the upper electronic state published in 1970. The new experimental results fell within experimental uncertainty of the theoretical calculations, demonstrating the growing power of computational chemistry to not just explain but predict and correct experimental findings [11].

Table: Evolution of Hydrogen Molecule Calculations

| Researchers | Year | Method | System Size | Key Achievement |

|---|---|---|---|---|

| Heitler & London | 1927 | Valence Bond | H₂ molecule | First quantum mechanical explanation of covalent bond |

| James & Coolidge | 1933 | Explicit r₁₂ | H₂ molecule | Improved accuracy for hydrogen molecule |

| Kolos & Roothaan | 1960 | Improved basis sets | H₂ molecule | Higher precision calculations |

| Kolos & Wolniewicz | 1968 | High-accuracy | H₂ molecule | Identified discrepancy in dissociation energy |

The computational chemists of the early quantum era worked with a minimal but carefully designed set of mathematical tools and physical concepts. Their "toolkit" reflected both the theoretical necessities of quantum mechanics and the practical constraints of pre-electronic computation.

Theoretical and Computational Tools

Schrödinger Equation: The fundamental governing equation for all non-relativistic quantum systems, providing the mathematical framework for calculating system properties and dynamics [11].

Hand-Cranked Calculating Machines: Mechanical devices capable of performing basic arithmetic operations (addition, subtraction, multiplication, division) through manual cranking. These were the primary computational hardware available before electronic computers [11].

Variational Principle: A mathematical method for approximating ground states by minimizing the energy functional, valuable because it provided upper bounds to true energies and thus a clear metric for improvement [14].

Perturbation Theory: A systematic approach for approximating solutions to complex quantum problems by starting from exactly solvable simpler systems and adding corrections [15].

Slater Determinants: Antisymmetrized products of one-electron wavefunctions used to represent multiparticle systems in a way that satisfied the Pauli exclusion principle [15].

Born-Oppenheimer Approximation: The separation of electronic and nuclear motion based on mass disparity, crucial for making molecular calculations tractable by focusing initially on electronic structure with fixed nuclei [12].

Methodological Framework: Experimental Protocols

The computational experiments performed during this pioneering era followed systematic methodologies designed to extract maximum information from limited computational resources.

Protocol for Molecular Structure Calculation

The general workflow for calculating molecular structure and energies followed a well-defined sequence:

System Selection and Simplification: Researchers identified simple systems (1-2 atoms) that captured essential physics while remaining computationally tractable. The hydrogen molecule and helium atom were ideal test cases [11].

Hamiltonian Formulation: The appropriate molecular Hamiltonian was constructed, including all relevant kinetic energy terms and potential energy contributions (electron-electron repulsion, electron-nuclear attraction, nuclear-nuclear repulsion) [11] [12].

Basis Set Selection: For wavefunction-based methods, appropriate mathematical basis functions were selected. Early calculations often used Slater-type orbitals or similar functions that captured the correct asymptotic behavior of electron wavefunctions near nuclei [11].

Wavefunction Ansatz: An appropriate form for the trial wavefunction was chosen, incorporating fundamental physical principles like the Pauli exclusion principle through antisymmetrization requirements [15].

Energy Computation: Using the variational principle, the energy expectation value was computed as:

[ E = \frac{\langle \psi | H | \psi \rangle}{\langle \psi | \psi \rangle} ]

This involved computing numerous multidimensional integrals using numerical methods amenable to hand calculation [13].

Parameter Optimization: Parameters in the trial wavefunction were systematically varied to minimize the energy expectation value, yielding the best approximation to the true wavefunction within the chosen ansatz [14].

Property Calculation: Once an optimized wavefunction was obtained, other properties (bond lengths, dissociation energies, spectral transitions) could be computed and compared with experimental data [11].

Mathematical and Computational Techniques

The heart of early computational chemistry lay in the mathematical approximations that made solutions tractable:

Basis Set Expansion

The expansion of molecular orbitals as linear combinations of basis functions:

[ \phii(1) = \sum{\mu=1}^K c{\mu i}\chi\mu(1) ]

This approach transformed the problem of determining continuous functions into the more tractable problem of determining expansion coefficients [15].

The Self-Consistent Field Method

For many-electron systems, the Hartree-Fock method implemented through a self-consistent field procedure provided the first realistic approach to molecular electronic structure:

- An initial guess was made for the molecular orbitals

- The Fock operator was constructed using these orbitals

- The Hartree-Fock equations were solved for new orbitals

- The process was repeated until convergence, when the input and output orbitals became self-consistent [15]

This iterative approach, though computationally demanding, could be implemented with human computers and provided reasonable results for small molecules.

Evolution and Legacy

The pioneering work with hand-cranked machines established both the conceptual framework and practical methodologies that would define computational chemistry as a discipline. The successful application to simple systems in the 1920s-1940s demonstrated the feasibility of computational approaches to chemical problems [11].

The trajectory of development moved from 1-2 atom systems in 1928, to 2-5 atom systems by 1970, to the present capability of studying molecules with 10-20 atoms using highly accurate methods [11]. Each step built upon the foundational work of the early pioneers who developed the mathematical formalism and computational strategies under severe technological constraints.

The legacy of these early efforts extends far beyond their specific computational results. They established:

- The validity of quantum mechanics for predicting chemical phenomena

- The methodology of computational science applied to chemical problems

- The collaborative model of theoretical and experimental verification

- The foundation for modern electronic structure theory

This pioneering work created the intellectual and methodological foundation upon which all subsequent computational chemistry has been built, ultimately enabling the sophisticated drug design and materials discovery applications that characterize the field today [16] [17].

The period following World War II marked a critical transformation in theoretical chemistry, culminating in the emergence of computational chemistry as a distinct scientific discipline. This transition was characterized by the convergence of theoretical breakthroughs in quantum mechanics, the increasing accessibility of digital computers, and the formation of an interdisciplinary community of scientists. Where pre-war developments consisted largely of individual contributions from researchers working within their native disciplines of physics or chemistry, the post-war era witnessed a conscious effort to build a cohesive community with shared tools, methods, and institutional structures [18]. The discipline's identity solidified as theoretical concepts became practically applicable through computational tools that could solve previously intractable chemical problems, ultimately transforming chemical research and education [18]. This shift enabled the transition from qualitative molecular descriptions to quantitative predictions of molecular structures, properties, and reactivities, laying the groundwork for computational chemistry's modern applications in drug design, materials science, and catalysis [19].

Historical Backdrop: Pre-War Theoretical Foundations

The conceptual foundations for computational chemistry were established in the pre-war period through groundbreaking work in quantum mechanics. The 1927 work of Walter Heitler and Fritz London, who applied valence bond theory to the hydrogen molecule, represented the first theoretical calculation of a chemical bond [19]. Throughout the 1930s, key textbooks such as Linus Pauling and E. Bright Wilson's "Introduction to Quantum Mechanics – with Applications to Chemistry" (1935) and Heitler's "Elementary Wave Mechanics – with Applications to Quantum Chemistry" (1945) provided the mathematical frameworks that would guide future computational approaches [19].

These early developments faced significant theoretical and practical challenges. The mathematical complexity of solving the Schrödinger equation for systems with more than one electron limited applications to the simplest molecules [19]. Computations were performed manually or with mechanical desk calculators, constraining the ambition and scope of theoretical investigations. More fundamentally, the researchers working on these problems remained largely within their disciplinary silos—physicists developing mathematical formalisms and chemists seeking to interpret experimental observations—with little momentum toward building a unified quantum chemistry community [18].

The Post-War Catalysts: Institutional, Technological, and Conceptual Shifts

The Advent of Digital Computing Technology

The development of electronic digital computers in the post-war period provided the essential technological catalyst for computational chemistry's emergence as a distinct discipline. Early machines such as the EDSAC at Cambridge, used for the first configuration interaction calculations with Gaussian orbitals by Boys and coworkers in the 1950s, demonstrated the potential for automated quantum chemical computations [19]. These computers enabled scientists to move beyond the simple systems that could be treated analytically and tackle increasingly complex molecules.

The impact of computing technology extended beyond mere calculation speed; it fostered new collaborative relationships and institutional arrangements. Theoretical chemists became extensive users of early digital computers, necessitating partnerships with computer scientists and access to institutional computing facilities [19] [18]. This shift from individual calculations to programmatic computational research represented a fundamental change in how theoretical chemistry was practiced, creating infrastructure dependencies and specialized knowledge requirements that helped define the new discipline's unique identity.

Algorithmic and Theoretical Breakthroughs

The increasing availability of computational resources drove parallel advances in theoretical methods and algorithms. In 1951, Clemens C. J. Roothaan's paper on the Linear Combination of Atomic Orbitals Molecular Orbitals (LCAO MO) approach provided a systematic mathematical framework for molecular orbital calculations that would influence the field for decades [19]. By 1956, the first ab initio Hartree-Fock calculations on diatomic molecules were performed at MIT using Slater-type orbitals [19].

The 1960s witnessed further methodological diversification with the development of semi-empirical methods such as CNDO, which simplified computations by parameterizing certain integrals based on experimental data [19]. These approaches balanced computational feasibility with chemical accuracy, making quantum chemical insights more accessible to practicing chemists. The emergence of these distinct computational methodologies—ranging from semi-empirical to ab initio approaches—created the methodological diversity that characterized computational chemistry as a discipline with multiple traditions and specialized subfields [18].

Table 1: Key Methodological Developments in Early Computational Chemistry

| Time Period | Computational Method | Key Innovators | Significance |

|---|---|---|---|

| 1927 | Valence Bond Theory | Heitler & London | First quantum mechanical treatment of chemical bond |

| 1951 | LCAO MO Approach | Roothaan | Systematic framework for molecular orbital calculations |

| 1950s | Configuration Interaction | Boys & coworkers | First post-Hartree-Fock method for electron correlation |

| 1956 | Ab Initio Hartree-Fock | MIT researchers | First non-empirical calculations on diatomic molecules |

| 1960s | Hückel Method | Various groups | Simple LCAO method for π electrons in conjugated hydrocarbons |

| 1960s | Semi-empirical Methods (CNDO) | Pople & others | Parameterized methods balancing accuracy and cost |

Community Formation and Institutionalization

The post-war period witnessed deliberate efforts to forge a cohesive identity for computational chemistry through community-building activities and institutional support. The formation of research groups dedicated specifically to quantum chemistry, the establishment of annual meetings, and the creation of specialized journals provided the social and institutional infrastructure necessary for disciplinary consolidation [18]. Unlike the pre-war era where researchers operated in disciplinary isolation, the post-war period saw active networking among research groups and individuals who identified specifically as quantum or computational chemists.

A critical development in this process was the emergence of "chemical translators"—researchers who could explain quantum chemical concepts in language accessible to experimental chemists [18]. These individuals played a crucial role in facilitating the influence of computational chemistry across chemical education and research, helping to disseminate computational insights to broader chemical audiences. Their work ensured that computational chemistry would not remain an isolated specialty but would instead transform how chemistry was taught and practiced more broadly.

Experimental and Computational Methodologies

Early Computational Workflows and Protocols

The transition to computational approaches required developing standardized protocols for setting up, performing, and analyzing quantum chemical calculations. Early practitioners established workflows that began with molecular system specification, followed by method selection, computation execution, and finally results interpretation—a sequence that remains fundamental to computational chemistry today [20].

Table 2: Early Computational Chemistry "Research Reagent Solutions"

| Computational Tool | Function | Theoretical Basis |

|---|---|---|

| Slater-type Orbitals | Basis functions for molecular orbitals | Exponential functions with radial nodes |

| Gaussian-type Orbitals | More computationally efficient basis sets | Gaussian functions allowing integral simplification |

| Hartree-Fock Method | Approximate solution to Schrödinger equation | Self-consistent field approach neglecting electron correlation |

| LCAO-MO Ansatz | Construction of molecular orbitals | Linear combination of atomic orbitals |

| Semi-empirical Parameters | Approximation of complex integrals | Empirical parameterization based on experimental data |

| Configuration Interaction | Treatment of electron correlation | Multi-determinant wavefunction expansion |

For ab initio calculations, the fundamental workflow involved selecting both a theoretical method (such as Hartree-Fock) and a basis set of mathematical functions centered on atomic nuclei to describe molecular orbitals [19]. The Hartree-Fock method itself represented a compromise—it provided a numerically tractable approach through its self-consistent field procedure but neglected electron correlation effects, requiring subsequent methodological refinements [19]. As the field matured, standardized computational protocols emerged, balancing accuracy requirements with the severe computational constraints of early computing systems.

Diagram 1: Early Computational Workflow (11.8 kB)

Key Software and Implementation

The late 1960s and early 1970s witnessed the emergence of specialized quantum chemistry software packages that standardized computational methods and made them more accessible to non-specialists. Programs such as ATMOL, Gaussian, IBMOL, and POLYAYTOM implemented efficient ab initio algorithms that significantly accelerated molecular orbital calculations [19]. Of these early programs, Gaussian has demonstrated remarkable longevity, evolving through continuous development into a widely used computational tool that remains relevant today.

The first mention of the term "computational chemistry" appeared in the 1970 book "Computers and Their Role in the Physical Sciences" by Fernbach and Taub, who observed that "'computational chemistry' can finally be more and more of a reality" [19]. This terminological recognition reflected the growing coherence of the field, as widely different methods began to be viewed as part of an emerging discipline. The 1970s also saw Norman Allinger's development of molecular mechanics methods such as the MM2 force field, which provided alternative approaches to quantum mechanics for predicting molecular structures and conformations [19]. The establishment of the Journal of Computational Chemistry in 1980 provided an official publication venue and further institutional identity for the discipline.

Impact and Legacy: Transforming Chemical Research

The emergence of computational chemistry fundamentally transformed chemical research practice and education. Computational approaches enabled the prediction of molecular structures and properties before synthesis, the exploration of reaction mechanisms not readily accessible to experimental observation, and the interpretation of spectroscopic data [19]. By providing a "third workhorse" alongside traditional synthesis and spectroscopy, computational chemistry expanded the chemist's toolkit, allowing for more rational design of molecules and materials [20].

The discipline's influence was recognized through numerous Nobel Prizes, most notably the 1998 award to Walter Kohn for density functional theory and John Pople for computational methods in quantum chemistry, and the 2013 award to Martin Karplus, Michael Levitt, and Arieh Warshel for multiscale models of complex chemical systems [19]. These honors acknowledged computational chemistry's central role in modern chemical research and its successful transition from specialized subfield to essential chemical methodology.

The post-war birth of computational chemistry established a foundation for subsequent developments that continue to evolve today. The integration of machine learning approaches with quantum chemical methods, the development of multi-scale simulation techniques, and the application of computational chemistry to drug design and materials science all build upon the disciplinary infrastructure established during this formative period [8] [21] [7]. From its origins in quantum mechanics research, computational chemistry has grown to become an indispensable component of modern chemical science, demonstrating the enduring legacy of the post-war disciplinary shift.

The genesis of modern computational chemistry is inextricably linked to the development of quantum mechanics in the early 20th century and its subsequent application to molecular systems. The fundamental challenge—to predict and explain how atoms combine to form molecules with specific structures and properties—required moving beyond classical physics and into the quantum realm. This transition produced two foundational, complementary, and at times competing theoretical frameworks: Valence Bond (VB) Theory and Molecular Orbital (MO) Theory [22] [23]. Both theories emerged from efforts to apply the new quantum mechanics to chemistry, representing different conceptual approaches to the same fundamental problem. Their development, refinement, and eventual implementation in computational methods form a critical chapter in the history of science, marking the origins of computational chemistry as a discipline that uses numerical simulations to solve chemical problems [18]. This whitepaper provides an in-depth technical examination of these two frameworks, detailing their theoretical bases, historical contexts, and their indispensable roles in modern computational protocols for drug development and materials science.

Historical Development and Theoretical Origins

The evolution of these theories was not linear but rather a complex interplay of ideas, personalities, and technological capabilities. Table 1 chronicles the key milestones in their development.

Table 1: Historical Milestones in VB and MO Theory Development

| Year | Key Figure(s) | Theoretical Advancement | Significance |

|---|---|---|---|

| 1916 | G.N. Lewis [23] | Electron-pair bond model; Lewis structures | Provided a qualitative, pre-quantum mechanical model of covalent bonding based on electron pairs. |

| 1927 | Heitler & London [22] [23] | Quantum mechanical treatment of H₂ | First successful application of quantum mechanics (wave functions) to a molecule, forming the basis of modern VB theory. |

| 1927-1928 | Friedrich Hund [24] | Concept of molecular orbitals | Laid the groundwork for MO theory by proposing delocalized orbitals for diatomic molecules. |

| 1928 | Linus Pauling [22] [23] | Resonance & Hybridization | Extended VB theory, making it applicable to polyatomic molecules and explaining molecular geometries. |

| 1928-1932 | Robert S. Mulliken [24] | Formalized MO theory | Developed the conceptual and mathematical framework of MO theory, emphasizing the molecular unit. |

| 1931 | Erich Hückel [24] | Hückel MO (HMO) theory | Created a semi-empirical method for π-electron systems, making MO theory applicable to organic molecules like benzene. |

| 1950s-1960s | John Pople & Others [24] | Ab initio methods & computational implementation | Developed systematic ab initio computational frameworks and software (Gaussian), transforming MO theory into a practical tool. |

| 1980s-Present | Shaik, Hiberty & Others [22] [23] | Modern VB theory revival | Addressed computational challenges of VB theory, leading to a resurgence and allowing it to compete with MO and DFT. |

The historical trajectory reveals a struggle for dominance between the two paradigms. Initially, VB theory, championed by Linus Pauling, was more popular among chemists because it used a language that was intuitive and aligned with classical chemical concepts like localized bonds and tetrahedral carbon [23]. Its ability to explain molecular geometry via hybridization and to treat reactivity through resonance structures made it immensely successful. However, by the 1950s and 1960s, MO theory, advocated by Robert Mulliken and others, began to gain the upper hand. This shift was driven by MO theory's more natural explanation of properties like paramagnetism in oxygen molecules and its greater suitability for implementation in the digital computer programs that were becoming available [25] [22] [24]. The subsequent development of sophisticated ab initio methods and Density Functional Theory (DFT) within the MO framework cemented its position as the dominant language for computational chemistry, though modern valence bond theory has seen a significant renaissance due to improved computational methods [22] [23].

Fundamental Principles and Comparative Analysis

Valence Bond Theory: A Localized Picture

Valence Bond theory describes a chemical bond as the result of the overlap between two half-filled atomic orbitals from adjacent atoms [26] [22]. Each overlapping orbital contains one unpaired electron, and these electrons pair with opposite spins to form a localized bond between the two atoms. The theory focuses on the concept of electron pairing between specific atoms.

A central tenet of VB theory is the condition of maximum overlap, which states that the stronger the overlap between the orbitals, the stronger the bond [22]. To account for the observed geometries of molecules, VB theory introduces hybridization. This model proposes that atomic orbitals (s, p, d) can mix to form new, degenerate hybrid orbitals that provide the optimal directional character for bonding [22]. For example:

- sp³ hybridization: As in methane (CH₄), forming four equivalent orbitals directed toward the corners of a tetrahedron.

- sp² hybridization: As in ethylene (C₂H₄), forming three trigonal planar orbitals and one unhybridized p orbital for π-bonding.

- sp hybridization: As in acetylene (C₂H₂), forming two linear orbitals and two unhybridized p orbitals for two π-bonds.

When a single Lewis structure is insufficient to describe the molecule, VB theory uses resonance, where the true molecule is represented as a hybrid of multiple valence bond structures [22].

Molecular Orbital Theory: A Delocalized Picture

In contrast, Molecular Orbital theory constructs a picture where electrons are delocalized over the entire molecule [25] [24]. Atomic orbitals (AOs) from all atoms in the molecule combine linearly (Linear Combination of Atomic Orbitals - LCAO) to form molecular orbitals (MOs). These MOs are one-electron wavefunctions that belong to the molecule as a whole.

Key principles of MO theory include:

- Bonding and Antibonding Orbitals: The constructive interference of AOs produces a bonding MO (e.g., σ, π) with electron density concentrated between nuclei, lower in energy than the original AOs. Destructive interference produces an antibonding MO (e.g., σ, π) with a nodal plane between nuclei and higher energy [25] [24].

- Aufbau Principle: Electrons fill the available MOs starting from the lowest energy level.

- Bond Order: Calculated as (Number of electrons in bonding MOs - Number of electrons in antibonding MOs) / 2, providing a quantitative measure of bond strength and stability [24].

Theoretical Comparison

The following diagram illustrates the fundamental logical relationship and comparative features of the two theories.

Computational Implementation and Methodologies

The transition of these theories from conceptual frameworks to practical tools is the cornerstone of computational chemistry. The following workflow outlines a generalized modern computational approach, which often integrates concepts from both VB and MO theories.

Detailed Computational Protocols

Protocol 1: Full Configuration Interaction (FCI) with Natural Orbital Analysis

This protocol, as used in a 2025 study to derive a global bonding descriptor (Fbond), represents a high-accuracy ab initio approach [27].

- System Preparation: Define the molecular geometry (Cartesian coordinates or internal coordinates) and select an appropriate atomic orbital basis set (e.g., STO-3G, 6-31G, cc-pVDZ).

- Hartree-Fock Calculation: Perform a restricted Hartree-Fock (RHF) calculation to obtain a reference wavefunction and a set of canonical molecular orbitals. This step provides the initial mean-field approximation of the electron distribution.

- Frozen-Core FCI Calculation: Execute a Full Configuration Interaction calculation within a frozen-core approximation. This involves:

- Correlating all valence electrons while treating the core electrons as non-interacting.

- Generating all possible electron configurations (determinants) by exciting electrons from occupied to virtual orbitals.

- Diagonalizing the full electronic Hamiltonian matrix in this determinant basis to obtain the exact solution of the Schrödinger equation within the chosen basis set.

- Natural Orbital Analysis: Diagonalize the first-order reduced density matrix obtained from the FCI wavefunction. The eigenvectors are the "natural orbitals," and the eigenvalues are their occupation numbers, which range from 0 to 2.

- Quantum Information Analysis: Calculate the von Neumann entropy from the natural orbital occupation number distribution. This quantifies the total electron correlation and entanglement in the system [27].

- Descriptor Calculation: Compute the global bonding descriptor Fbond using the formula: Fbond = 0.5 × (HOMO-LUMO Gap) × (Maximum Entanglement Entropy) [27]. This descriptor synthesizes energetic and correlation information.

Protocol 2: Variational Quantum Eigensolver (VQE) with UCCSD Ansatz

This protocol is designed for implementation on quantum computers or simulators, demonstrating the framework's method-agnostic nature [27].

- Qubit Mapping: Map the molecular Hamiltonian (from an initial HF calculation) to a qubit Hamiltonian using a transformation such as the Jordan-Wigner or Bravyi-Kitaev encoding.

- Ansatz Selection: Prepare a parameterized wavefunction ansatz. The Unitary Coupled-Cluster Singles and Doubles (UCCSD) ansatz is a common choice, as it is capable of capturing significant electron correlation effects.

- Classical Optimizer Setup: Choose a classical optimization algorithm (e.g., COBYLA, SPSA) to minimize the expectation value of the energy.

- VQE Iteration Loop:

- The quantum processor/prepares the ansatz state with a given set of parameters.

- It measures the expectation value of the Hamiltonian.

- The classical optimizer uses this energy value to update the parameters for the next iteration.

- The loop continues until energy convergence is achieved.

- Wavefunction Analysis: Once optimized, the VQE wavefunction is analyzed to extract properties, similar to step 4 in the FCI protocol, including the calculation of entanglement measures and the Fbond descriptor.

Table 2: Key Computational "Reagents" and Resources

| Resource Category | Specific Examples | Function & Application |

|---|---|---|

| Basis Sets [27] [24] | STO-3G, 6-31G, cc-pVDZ, cc-pVTZ | Sets of mathematical functions (Gaussian-type orbitals) that represent atomic orbitals. The size and quality of the basis set determine the accuracy and computational cost of the calculation. |

| Electronic Structure Methods | HF, MP2, CCSD(T), CASSCI, DFT Functionals (e.g., B3LYP) | The specific computational recipe for approximating the electron correlation energy, which is vital for accurate predictions of energies and properties. |

| Wavefunction Analysis Tools | Natural Bond Orbital (NBO), Quantum Theory of Atoms in Molecules (QTAIM), Density Matrix Analysis | Software tools for interpreting the computed wavefunction to extract chemical concepts like bond orders, atomic charges, and orbital interactions. |

| Software Packages [27] [24] | PySCF, Qiskit Nature, Gaussian, GAMESS | Integrated software suites that implement the algorithms for quantum chemical calculations, from geometry optimization to property prediction. |

| Quantum Computing Libraries [27] | Qiskit (IBM), Cirq (Google) | Software libraries that provide tools for building and running quantum circuits, including implementations of VQE and UCCSD for chemistry problems. |

Quantitative Applications and Data in Molecular Systems

The power of these frameworks is demonstrated by their ability to generate quantitative predictions and classifications of molecular behavior. A 2025 study applied the unified Fbond descriptor across a range of molecules, revealing distinct bonding regimes based on quantum correlation [27].

Table 3: Calculated Bonding Descriptor (Fbond) for Representative Molecules [27]

| Molecule | Basis Set | Fbond Value | Bonding Type / Correlation Regime |

|---|---|---|---|

| H₂ | 6-31G | 0.0314 | σ-bond / Weak Correlation |

| NH₃ | STO-3G | 0.0321 | σ-bonds / Weak Correlation |

| H₂O | STO-3G | 0.0352 | σ-bonds / Weak Correlation |

| CH₄ | STO-3G | 0.0396 | σ-bonds / Weak Correlation |

| C₂H₄ | STO-3G | 0.0653 | σ + π-bonds / Strong Correlation |

| N₂ | STO-3G | 0.0665 | σ + 2π-bonds / Strong Correlation |

| C₂H₂ | STO-3G | 0.0720 | σ + 2π-bonds / Strong Correlation |

The data in Table 3 highlights a critical finding from the modern unified framework: the quantum correlational structure, as measured by Fbond, is determined primarily by bond type (σ vs. π) rather than traditional factors like bond polarity or atomic electronegativity differences [27]. The σ-only bonding systems (H₂, NH₃, H₂O, CH₄) cluster in a narrow range of Fbond values (0.031–0.040), while π-containing systems (C₂H₄, N₂, C₂H₂) exhibit significantly higher Fbond values (0.065–0.072), indicating a regime of stronger electron correlation.

For researchers in drug development, these theoretical frameworks are not mere academic exercises but are fundamental to computer-aided drug design (CADD). Molecular Orbital theory, often implemented via Density Functional Theory (DFT), is crucial for:

- Reactivity Prediction: Calculating frontier molecular orbital (HOMO and LUMO) energies to predict a molecule's susceptibility to nucleophilic or electrophilic attack.

- Non-Covalent Interactions: Modeling the weak interactions (e.g., π-π stacking, hydrogen bonding) that are critical for drug-receptor binding, where accurate electron correlation treatment is essential.

- Spectroscopic Properties: Predicting UV-Vis, IR, and NMR spectra to aid in the identification and characterization of novel pharmaceutical compounds.

Valence Bond theory provides complementary, intuitive insights into:

- Reaction Mechanism Elucidation: Using concepts like resonance and hybridization to map out reaction pathways, such as the formation of transition states in enzyme-catalyzed reactions.

- Rationalizing Tautomerism and Tautomeric Stability: Modeling the electronic reorganization in tautomers, which can profoundly affect a drug's bioavailability and binding affinity.

In conclusion, the journey from the foundational quantum mechanical research of Heitler, London, Pauling, Mulliken, and Hund to the sophisticated computational algorithms of today represents the very origin and maturation of computational chemistry. While Molecular Orbital theory currently forms the backbone of most computational workflows in pharmaceutical research, the resurgence of Valence Bond theory offers a deeper, more chemically intuitive understanding of electron correlation and bond formation. The most powerful modern approaches, as exemplified by the unified Fbond framework, increasingly seek to integrate the strengths of both pictures to provide a more complete understanding of molecular behavior, thereby accelerating the discovery and optimization of new therapeutic agents.

From Theory to Practice: Algorithmic Breakthroughs and Pharmaceutical Applications

The field of computational chemistry, as recognized today, was fundamentally shaped by three pivotal methodological advances during the 1960s. This period witnessed the transformation of quantum chemistry from a discipline focused on qualitative explanations to one capable of producing quantitatively accurate predictions for molecular systems. The emergence of this capability stemmed from concurrent developments in computationally feasible basis sets, practical approaches to electron correlation, and the derivation of analytic energy derivatives [11]. These three elements—often termed the "1960s Trinity"—provided the foundational toolkit that enabled the first widespread applications of quantum chemistry to chemical problems, forming the origin point for modern computational approaches in chemical research and drug design.

Historical Background and Pre-1960s Landscape

The theoretical foundation for computational chemistry was established with the formulation of the Schrödinger equation in 1926. Early pioneers, beginning in 1928, made attempts to solve this equation for simple systems like the helium atom and the hydrogen molecule using hand-cranked calculating machines [11]. These calculations verified that quantum mechanics could quantitatively reproduce experimental observations, but the computational difficulty limited applications to systems of only 1-2 atoms.

The post-World War II period saw the invention of electronic computers, which became available for scientific use in the 1950s [11]. This technological advancement coincided with a shift in physics toward nuclear structure, creating an opportunity for chemists to develop their own computational methodologies. The stage was set for the breakthrough developments that would occur in the following decade, when the convergence of several theoretical advances would finally make quantitative computational chemistry a reality.

The First Pillar: Development of Computationally Feasible Basis Sets

Theoretical Foundation and Evolution

Basis sets form the mathematical foundation for representing molecular orbitals in computational quantum chemistry. A basis set is a collection of functions, typically centered on atomic nuclei, used to expand the molecular orbitals of a system. The development of computationally feasible basis sets in the 1960s was crucial for moving beyond the conceptual limitations of earlier approaches.

Prior to the 1960s, quantum chemical calculations were hampered by the lack of standardized, efficient basis sets that could be applied to a range of molecular systems. The breakthrough came with the creation of basis sets that balanced mathematical completeness with practical computational demands. These basis sets typically employed Gaussian-type orbitals (GTOs), which, although less accurate than Slater-type orbitals for representing electron distributions near nuclei, offered computational advantages through the Gaussian product theorem—allowing efficient calculation of multi-center integrals [28].

Key Methodological Advances

The transformation was marked by several critical developments:

- Systematic contraction schemes: Researchers developed contracted Gaussian basis sets where fixed linear combinations of primitive Gaussian functions represented atomic orbitals, significantly reducing the number of integrals to compute.

- Standardization for chemical elements: Basis sets were developed and optimized for atoms across the periodic table, enabling consistent application to diverse molecular systems.

- Balance between accuracy and cost: The 1960s saw the creation of basis sets of varying sizes (single-zeta, double-zeta, triple-zeta) and polarization functions, allowing chemists to select an appropriate level of theory for their specific problem.

These developments were incorporated into software packages in the early 1970s, leading to what has been described as "an explosion in the literature of applications of computations to chemical problems" [11].

Table: Evolution of Basis Set Capabilities in the 1960s

| Period | Typical Systems | Basis Set Features | Computational Limitations |

|---|---|---|---|

| Pre-1960s | 1-2 atoms | Minimal sets, Slater-type orbitals | Hand calculations, limited to smallest systems |

| Early 1960s | 2-5 atoms | Uncontracted Gaussians, minimal basis | Limited integral evaluation capabilities |

| Late 1960s | 5-10 atoms | Contracted Gaussians, double-zeta quality | Emerging capabilities for small polyatomics |

| Post-1960s | 10-20 atoms | Polarization functions, extended sets | Larger systems becoming feasible |

The Second Pillar: Solving the Electron Correlation Problem

Theoretical Significance of Electron Correlation

Electron correlation, often called the "chemical glue" of nature, represents the correction to the Hartree-Fock approximation where electrons are treated as moving independently in an average field [29]. The electron correlation problem stems from the fact that electrons actually correlate their motions to avoid each other due to Coulomb repulsion. Löwdin formally defined the correlation energy as "the difference between the exact and the Hartree-Fock energy" [29].

The significance of this problem cannot be overstated—without proper accounting for electron correlation, theoretical predictions of molecular properties including bond dissociation energies, reaction barriers, and electronic spectra remain qualitatively incorrect for many systems. Early work on correlation problems dates to the 1930s with Wigner's studies of the uniform electron gas [29], but practical methods for molecular systems only emerged in the 1960s.

Practical Methodologies Developed in the 1960s

The 1960s witnessed the demonstration of reasonably accurate approximate solutions to the electron correlation problem [11]. Several key approaches emerged:

Configuration Interaction (CI): This method expands the wavefunction as a linear combination of Slater determinants representing different electron configurations. The full CI approach is exact within a given basis set but computationally intractable for larger systems. Truncated CI methods (CISD, CISDT) developed in this period provided practical compromises [29].

Many-Body Perturbation Theory: Particularly Møller-Plesset perturbation theory (MP2, MP3) provided size-consistent correlation corrections at manageable computational cost.

Multiconfiguration Self-Consistent Field (MCSCF): This approach allowed simultaneous optimization of orbital and configuration coefficients, essential for describing bond breaking and electronically excited states.

The landmark work of Kolos and Wolniewicz on the hydrogen molecule exemplifies the power of these developing correlation methods. Their increasingly accurate calculations revealed discrepancies with experimentally derived dissociation energies, ultimately prompting experimentalists to reexamine their measurements and methods [11]. This case demonstrated how theoretical chemistry could not just complement but actually guide experimental science.

Table: Electron Correlation Methods and Their Applications

| Method | Key Principle | Strengths | 1960s-Era Limitations |

|---|---|---|---|

| Configuration Interaction (CI) | Linear combination of determinants | Systematic improvability | Size inconsistency, exponential scaling |

| Møller-Plesset Perturbation Theory | Order-by-order perturbation correction | Size consistency, systematic | Divergence issues for some systems |

| Multiconfiguration SCF (MCSCF) | Self-consistent optimization of orbitals and CI coefficients | Handles quasidegeneracy | Choice of active space, convergence issues |

The Third Pillar: Analytic Derivatives of Energy

Theoretical Breakthrough and Mathematical Formulation

The derivation of formulas for analytic derivatives of the energy with respect to nuclear coordinates represented perhaps the most practically significant advancement of the 1960s Trinity [11]. Prior to this development, molecular properties such as gradients and force constants had to be calculated through numerically differentiating the energy, requiring multiple energy evaluations and suffering from precision limitations.

The theoretical breakthrough involved formulating analytic expressions for first, second, and eventually third derivatives of the electronic energy [30]. This allowed direct calculation of:

- Energy gradients (first derivatives) for geometry optimization

- Force constants (second derivatives) for harmonic frequency analysis

- Higher-order derivatives for anharmonic corrections and properties

The mathematical foundation relied on the Hellmann-Feynman theorem and its extensions, coupled with efficient computational implementations for various wavefunction types, particularly for single-configuration self-consistent-field (SCF) wavefunctions [30].

Impact on Computational Workflows

The availability of analytic derivatives revolutionized computational chemistry workflows in several ways:

Efficient geometry optimization: Transition state location and equilibrium geometry determination became feasible through direct gradient methods rather than inefficient point-by-point potential energy surface mapping.

Vibrational frequency calculation: Analytic second derivatives enabled routine computation of harmonic frequencies, providing critical connection to spectroscopic experiments.

Molecular dynamics and reaction pathways: With efficient gradients, trajectory calculations and intrinsic reaction coordinate following became practical.

These developments were particularly crucial for connecting computational results to experimental observables, bridging the gap between quantum mechanics and spectroscopy, kinetics, and thermodynamics.

Methodological Protocols and Experimental Frameworks

Standard Computational Workflow

The integration of the three methodological pillars enabled a standardized workflow for computational chemical investigations. The following diagram illustrates the fundamental computational workflow enabled by the 1960s Trinity:

The Scientist's Toolkit: Essential Research Reagents

Table: Essential Computational "Reagents" of 1960s Quantum Chemistry

| Tool/Component | Function | Theoretical Basis |

|---|---|---|

| Gaussian-Type Basis Sets | Represent molecular orbitals | Linear combination of atomic orbitals |

| Configuration Interaction | Account for electron correlation | Multideterminantal wavefunction expansion |

| Analytic Gradient Methods | Optimize molecular geometry | Hellmann-Feynman theorem derivatives |

| Potential Energy Surface | Model nuclear motion | Born-Oppenheimer approximation |

| SCF Convergence Algorithms | Solve Hartree-Fock equations | Iterative matrix diagonalization |

Case Study: The Hydrogen Molecule Breakthrough

The power of the emerging computational chemistry methodology is perfectly illustrated by the work of Kolos and Wolniewicz on the hydrogen molecule in the late 1960s [11]. Their systematic improvement of calculations incorporated:

- Explicitly correlated wavefunctions with r₁₂ terms originally introduced by James and Coolidge in 1933

- Advanced basis sets with careful optimization of exponential parameters

- Comprehensive inclusion of correlation effects including relativistic corrections

When their most refined calculation diverged from the experimentally accepted dissociation energy by 3.8 cm⁻¹, it prompted experimentalists to reexamine their methods. This led to new spectra with better resolution and revised vibrational quantum number assignments, ultimately confirming the theoretical predictions [11]. This case established the paradigm of theory guiding experiment rather than merely following it.

Impact and Legacy

Immediate Scientific Impact

The convergence of basis sets, correlation methods, and analytic derivatives in the 1960s created an immediate transformation in chemical research:

- Software dissemination: These methods were incorporated into software packages that became widely available to chemists in the early 1970s [11].

- Domain expansion: Useful quantitative results became obtainable for molecules with up to 10-20 atoms, compared to the 2-5 atom systems feasible at the decade's beginning [11].

- Methodological bridge-building: The derivatives facilitated connection between electronic structure theory and nuclear motion programs for classical, semiclassical, and quantum dynamics [11].

Long-Term Influence on Drug Discovery and Materials Design

The 1960s Trinity established the conceptual and methodological framework that continues to underpin computational chemistry in pharmaceutical and materials research:

- Molecular mechanics/dynamics: The analytic derivatives and potential energy surface concepts enabled the force field approaches that dominate biomolecular modeling today [11].

- Rational drug design: The ability to compute molecular properties quantitatively provided the foundation for structure-based drug design.

- Materials modeling: The methods developed for molecular systems extended to periodic systems, enabling computational materials science.

The legacy of these developments is particularly evident in molecular mechanics approaches, where "many chemists now equate it with computational chemistry" despite its origins in the quantum mechanical advances of the 1960s [11].

The three interconnected advances of the 1960s—computationally feasible basis sets, practical electron correlation methods, and analytic energy derivatives—collectively transformed quantum chemistry from a primarily explanatory science to a predictive one. This "1960s Trinity" provided the essential foundation upon which modern computational chemistry has been built, enabling its application to problems ranging from fundamental chemical physics to rational drug design. The methodological framework established during this period continues to influence computational approaches today, even as hardware capabilities and algorithmic sophistication have advanced dramatically. Understanding these historical developments provides essential context for contemporary researchers applying computational methods to chemical problems in both academic and industrial settings.

The field of computational chemistry originated from fundamental quantum mechanics research in the early 20th century, beginning with pivotal work like the 1927 paper by Walter Heitler and Fritz London, which applied quantum mechanics to the hydrogen molecule and marked the first quantum-mechanical treatment of the chemical bond [12]. This theoretical foundation slowly began to be applied to chemical structure, reactivity, and bonding through the contributions of pioneers like Linus Pauling, Robert S. Mulliken, and John C. Slater [12]. However, for decades, progress was hampered by the tremendous computational complexity of solving quantum mechanical equations for molecular systems.